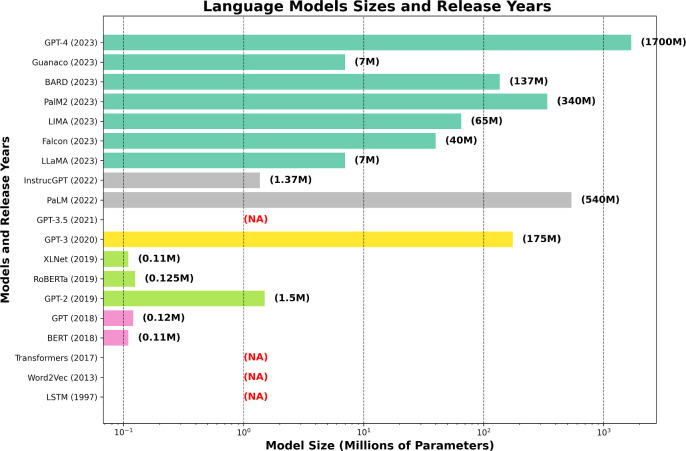

Figure 1.

The bar chart illustrates the evolution of several influential language models, commencing with long short-term memory (LSTM) in 1997. Models released within the same year are represented by a unified color, while ‘NA’ denotes models whose sizes are either undisclosed or not applicable. The graph depicts a remarkable increase in the number of parameters of language models in recent times. While some models such as generative pre-trained transformer (GPT) by OpenAI and LLaMA (Large Language Model) by Meta AI have considerably scaled up in size to enhance their capabilities, others such as pathways language model (PaLM) by Google have employed diverse strategies to balance performance gains with efficiency, resulting in more compact model sizes.