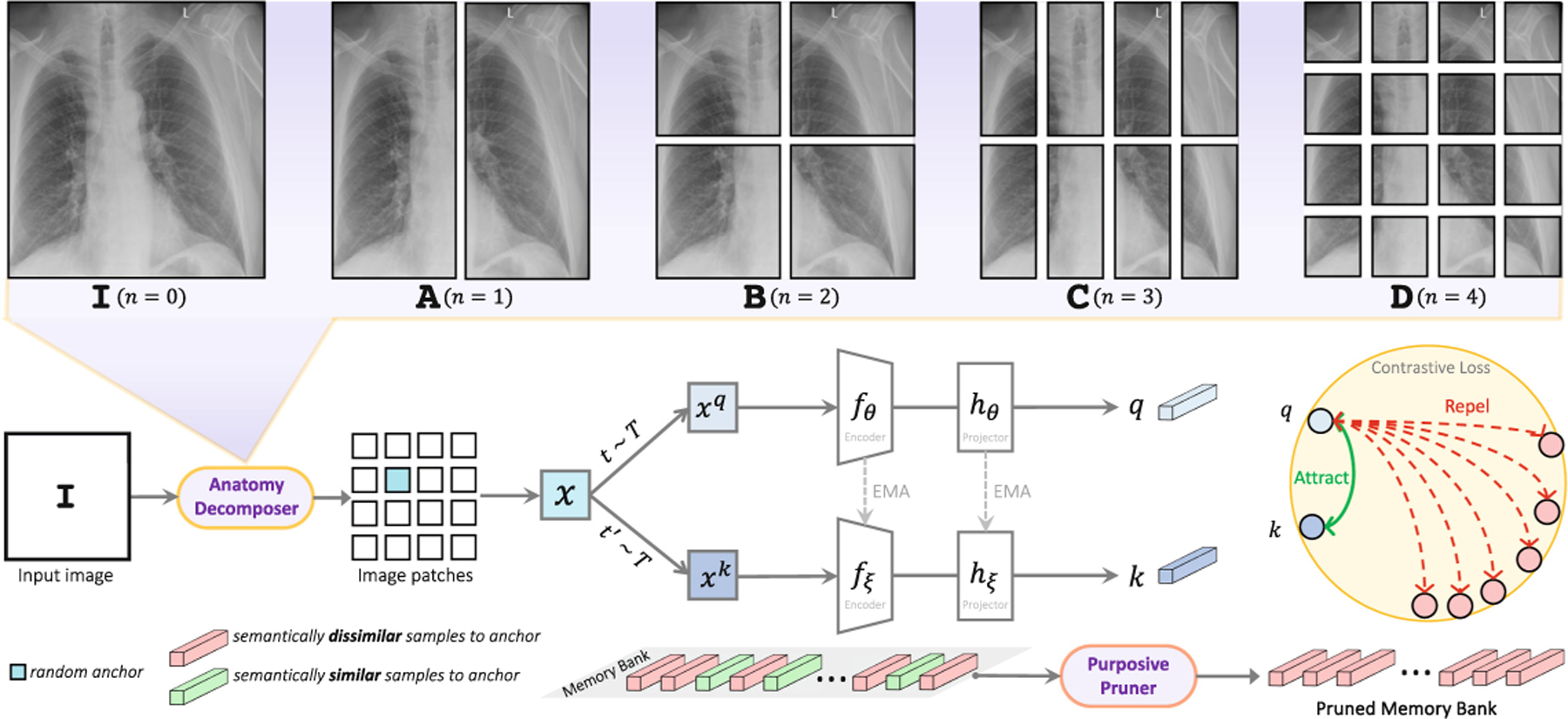

Fig. 2.

Our SSL strategy gradually decomposes and perceives the anatomy in a coarse-to-fine manner. Our Anatomy Decomposer (AD) decomposes the anatomy into a hierarchy of parts with granularity level at each training stage. Thus, anatomical structures of finer-grained granularity will be incrementally presented to the model as the input. Given image , we pass it to AD to get a random anchor . We augment to generate two views (positive samples), and pass them to two encoders to get their features. To avoid semantic collision in training objective, our Purposive Pruner removes semantically similar anatomical structures across images to anchor x from the memory bank. Contrastive loss is then calculated using positive samples’ features and the pruned memory bank. The figure shows pretraining at .