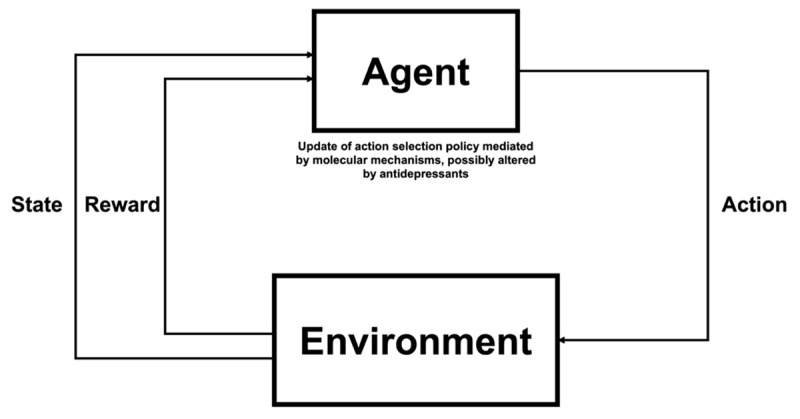

Figure 1.

Schematic diagram illustrating the RL framework. At every time step, the agent receives information about the state they are in (i.e., a representation of their current environment, such as the speed and position of the car when driving) and the amount of reward they have received. Based on this feedback, the agent aims to adjust its action selection policy to maximise the amount of reward obtained in the future.