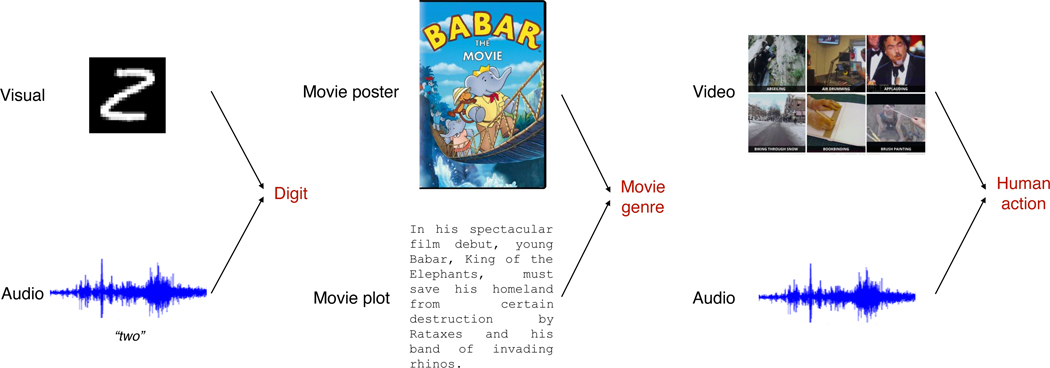

Figure 11:

Multimedia: A significant body of research in multimodal learning has been fueled by the large availability of multimedia data (language, image, video, and audio) on the internet. MultiBench includes 3 popular large-scale multimedia datasets with varying sizes and levels of difficulty: (1) Audio-Visual MNIST (AV-MNIST) [161] is assembled from images of handwritten digits [88] and audio samples of spoken digits [94], (2) Multimodal IMDb (MM-IMDb) [8] uses movie titles, metadata, and movie posters to perform multi-label classification to a set of movie genres, and (3) Kinetics [80] contains video and audio of 306,245 video clips annotated for 400 human actions. To ease experimentation, we split Kinetics into small and large partitions (see Appendix C). Figure adapted from [8, 80].