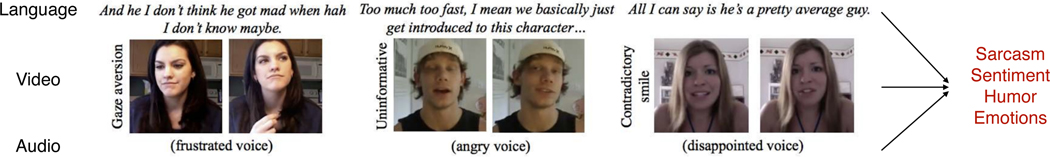

Figure 6:

Affective computing studies the perception of human affective states (emotions, sentiment, and personalities) from our natural display of multimodal signals spanning language (spoken words), visual (facial expressions, gestures), and acoustic (prosody, speech tone) [124]. MultiBench contains 4 datasets in this category involving fusing language, video, and audio time-series data to predict sentiment (CMU-MOSI [181] and CMU-MOSEI [183]), emotions (CMU-MOSEI [183]), humor (UR-FUNNY [64]), and sarcasm (MUStARD [24]).