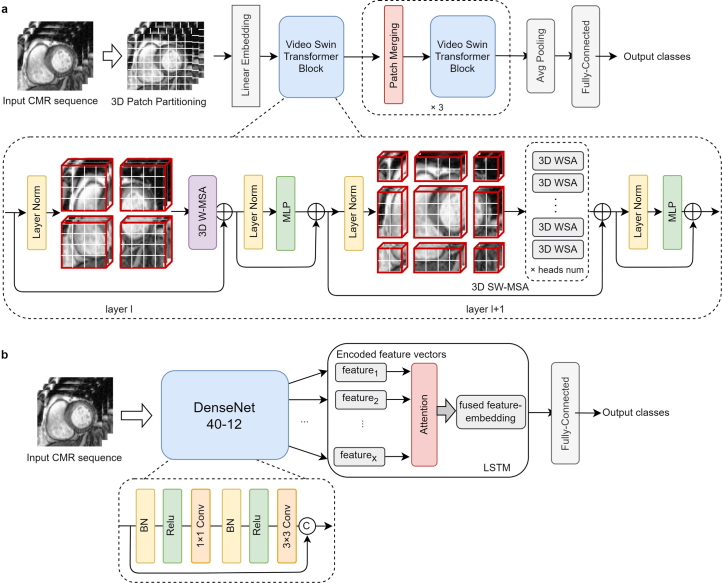

Extended Data Fig. 3. Schematic overview of Video-based Swin Transformer and CNN-LSTM frameworks for CMR interpretation.

a, the framework of video-based swin transformer (VST). The model takes cardiac MRI sequence as input, extracts distinct features by global self-attention and shifted window mechanism inherent in VST, and outputs the classification score. 3D W-MSA, 3D window based multi-head self-attention module; 3D SW-MSA, 3D-shifted window based multi-head self-attention module; WSA, window self-attention module. b, the framework of the conventional CNN-LSTM (Long short-term memory). Each CMR slice is encoded by the DenseNet-40–12 (layer = 40; growth rate = 12) CNN into a feature vector feature i. These feature vectors are sequentially fed into the LSTM encoder, which uses a soft attention layer to learn a weighted average embedding of all slices.