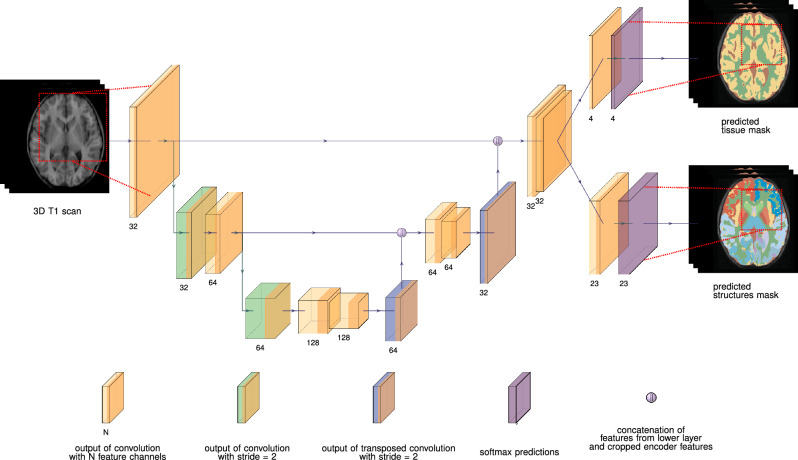

Figure 1.

The deep learning model processes a 3D T1-weighted image via a single-input, dual-output 3D convolutional neural network (CNN) to produce estimated multi-label masks for brain tissues (background, white matter, gray matter, cerebrospinal fluid) and brain structures (background + 22 brain structures). The CNN is based on the widely used 3D U-net architecture, which operates on 3D patches of the input scan. Each convolutional layer utilizes kernels, except for the two convolutional layers before the softmax layers, which use kernels. Weight normalization and leaky ReLU (slope = 0.20) are employed. The output patches have dimensions of voxels, which are smaller than the input patches’ dimensions ( voxels) due to the use of valid convolutions, mitigating off-patch-center bias.