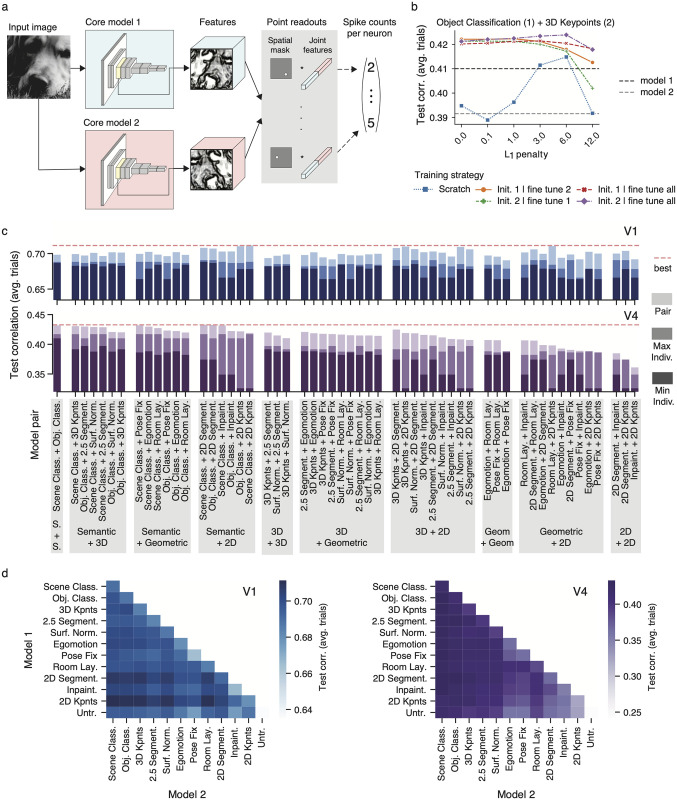

Fig 3. Jointly reading from pairs of tasks.

a, Modeling approach. We modified the methods described in Fig 1 to simultaneously read out neural responses from two feature spaces. We forwarded the same input image, at the same scale, to two pretrained taskonomy networks, extracted the features at the same layer and concatenated them. We then learned a point readout [22] for each neuron and always keep the cores’ weights frozen during training. b, Comparison of training strategies when jointly reading from object classification (core 1) and 3D keypoints (core 2). The resulting increased dimensionality makes it harder to find global optima, so we compared—under several regularization strengths (L1 penalty) –five strategies that leverage the individual task-models’ optimum readout weights as per validation set from the models we trained earlier. We trained from scratch (i.e. initialized the readout weights at random), initialized the readout of core 1 (2) and finetuned the readout of core 2 (1), and initialized the readout of core 1 (2) and finetuned all readout weights. The individual task-model performances are in dotted lines for comparison. c Comparison of pair task models in V1 (top) and V4 (bottom). Pairs are grouped based on the task-group identities of pair members. In dark (middle-dark) color saturation, we show the worse (best) individual task model from the pair. In lightest color saturation, we show the performance of the pair model. The highest performing pair model is shown as a red dotted line to facilitate comparisons. Performances are shown in terms of correlation between model predictions and average over trials. Baseline on all bar plots is the performance of the untrained network. d Heat map representation of pair task models’ performances in V1 (let) and V4 (right). Here, in addition to the pairs in c, the pair models with identical members (diagonal), and pairs built between tasks and the untrained network (bottom row) are included.