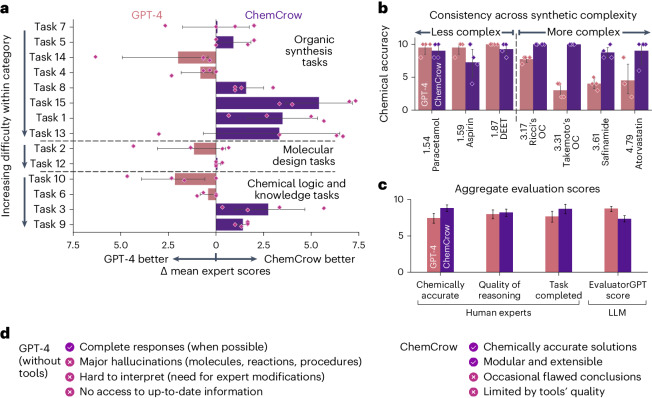

Fig. 4. Evaluation results.

Comparative performance of GPT-4 and ChemCrow across a range of tasks. a, Per-task preference. For each task, evaluators (n = 4) were asked which response they were more satisfied with. Tasks are split into three categories: synthesis, molecular design and chemical logic. Tasks are sorted by order of difficulty within the classes. b, Mean chemical accuracy (factuality) of responses across human evaluators (n = 4) in organic synthesis tasks, sorted by synthetic accessibility of targets c, Aggregate results for each metric from human evaluators across all tasks (n = 56) compared to EvaluatorGPT scores (n = 14). The error bars represent the confidence interval (95%). d, The checkboxes highlight the strengths and flaws of each system. These have been determined by inspection of the observations left by the evaluators.