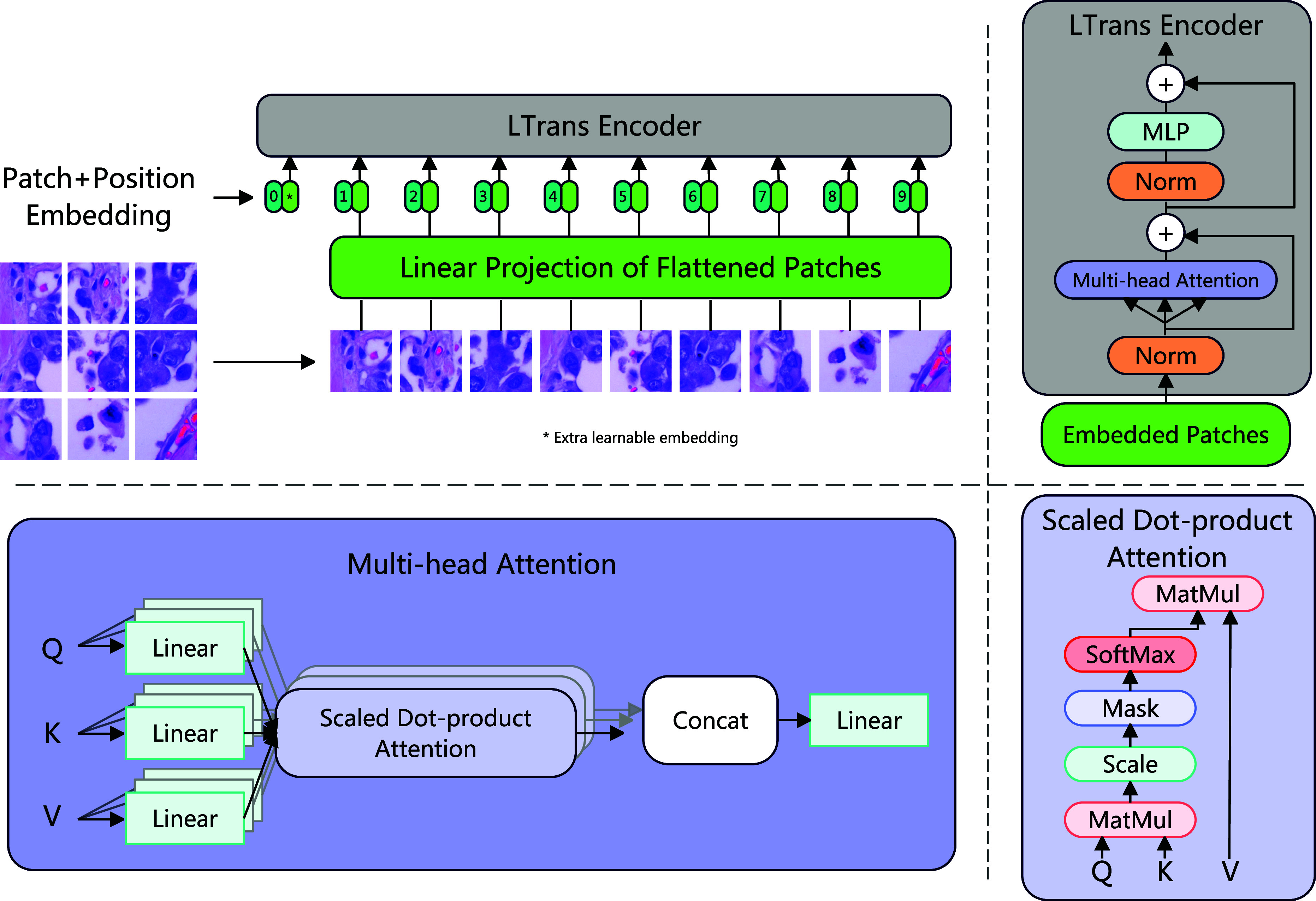

Figure 3.

Architecture of the LTrans. Firstly, we reshape the original image into a sequence of flattened 2D patches and pass them into a linear projection with trainable parameters. Then the embedded patches pass the LTrans. The LTrans contains two normalization layers, two residual connections, a multi-head attention module, and an MLP. The details of the multi-head attention module are also provided in this figure.