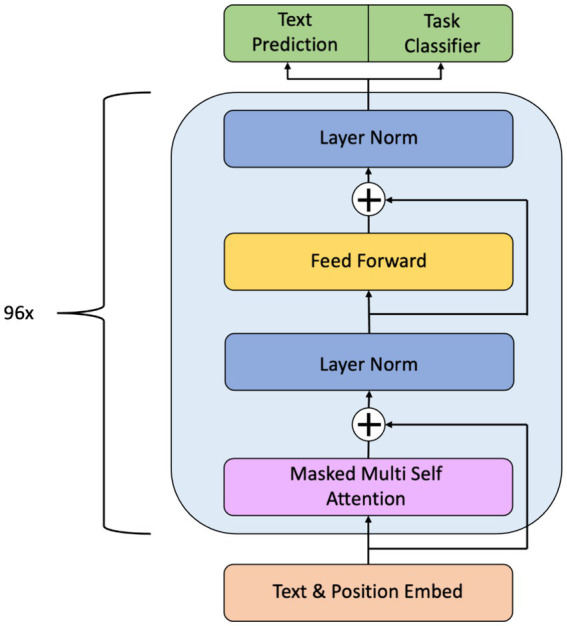

Figure 1.

Overall architecture of GPT-3. The hierarchical structure is made up of different layers of transformer decoding, each of which is responsible for a different phase of text processing. The embedding layer converts the input tokens into numerical vectors, the normalization layer stabilizes the training process, the feed-forward layer captures complex patterns in the input data, and the masked multi-head self-attention layer captures the relationship between different tokens in the input sequence.