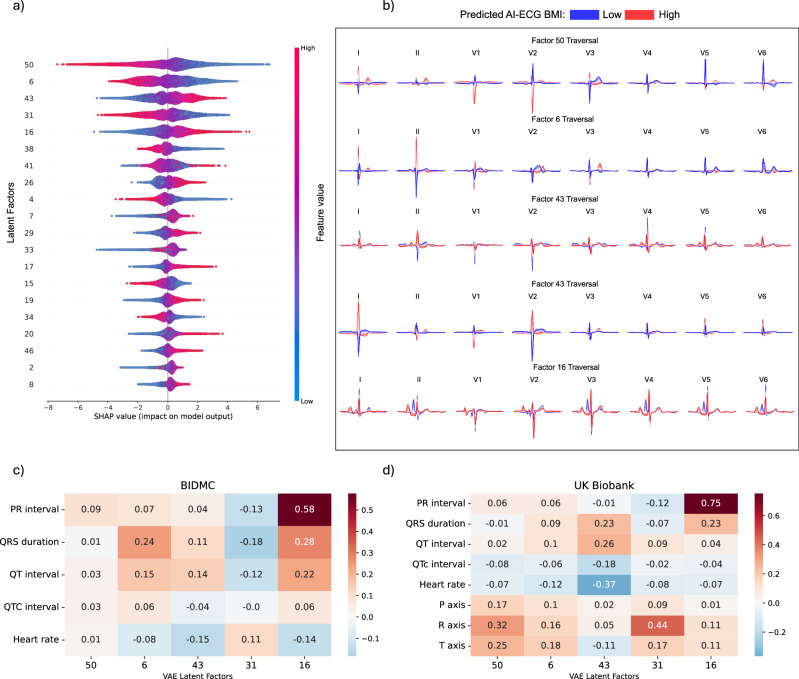

Fig. 9. Explainable AI in ECG morphology.

Explainable ECG morphology: An XGBoost model was trained using variational autoencoder-derived latent factors to estimate the AI-ECG-derived BMI predictions. a Depicts a beeswarm plot of the 20 most influential latent factors, ordered by their feature importance derived from the SHAP (SHapley Additive exPlanations) values. Each dot represents a SHAP value for a specific latent factor, providing insight into the significance of these latent factors and the direction of their impact on the AI-ECG BMI predictions. For example, for latent factor 50, lower values of the latent factor (in blue) indicate a positive impact on the AI-ECG BMI estimation, resulting in higher BMI predictions, while higher feature values (in red) indicate a negative impact on the AI-ECG BMI estimation, resulting in lower BMI predictions. b Illustrates the latent traversals of the top 5 latent features and their impact on the ECG morphology. ECG morphologies corresponding with high and low AI-ECG BMI predictions are represented in red and blue, respectively. Subplots c and d show correlation heatmaps between ECG parameters and the VAE-derived latent factors for the BIDMC and UK Biobank cohorts, respectively.