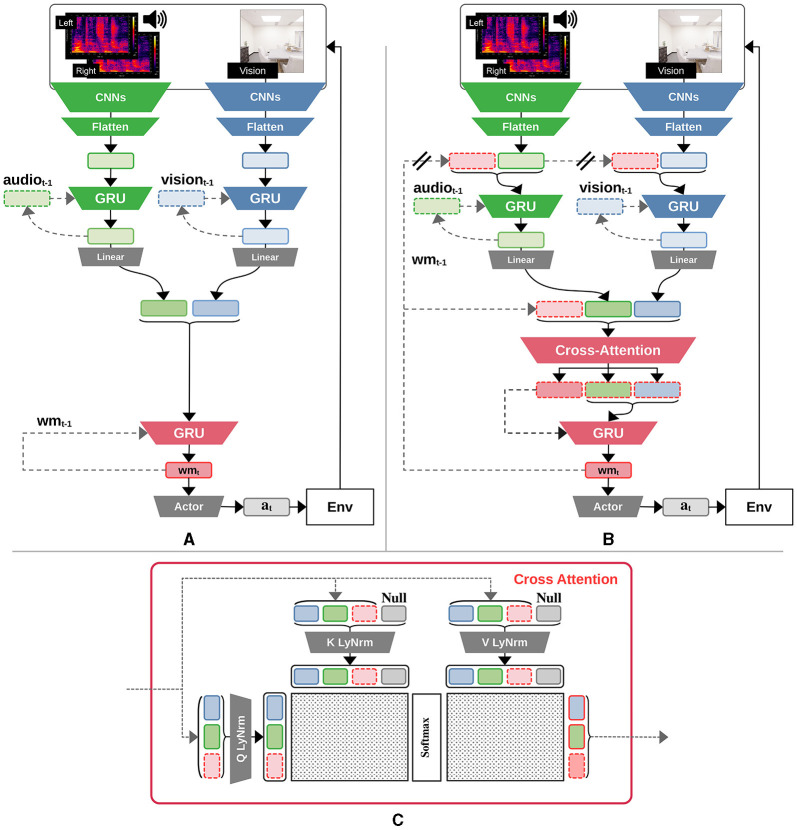

Figure 1.

Diagram of agent architectures. (A) Baseline GRU agent with recurrent encoders. (B) Global-workspace agent satisfying all RPT and GWT indicator properties. The double bars marking the path from the past step's working memory in the recurrent encoder for each modality emphasize the gradient-stopping operation during training that was required to stabilize the learning of this agent variant. (C) The proposed cross-attention mechanism takes as input the past step's working memory, the current visual and acoustic features to be used as query sources, and then computes an attention mask for each of those items, along with a null input; this therefore simultaneously incorporates bottom-up, cross, self, and top-down attention. Intuitively, the attention mask determines which information to incorporate from each input.