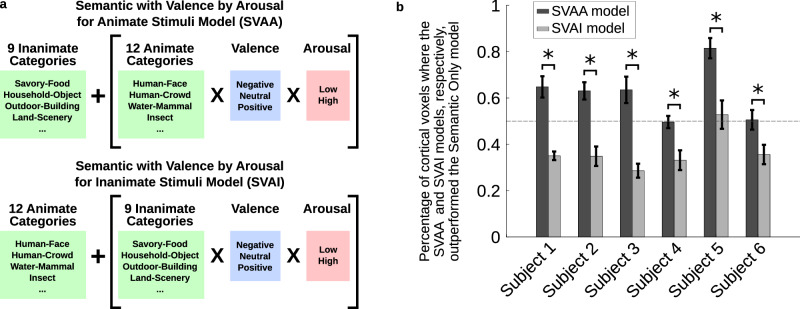

Fig. 3. Cortical tuning to stimulus affective content is greatest for animate stimuli.

a We examined the extent to which including the valence and arousal of either animate or inanimate stimuli improved model fit over and above modeling semantic category information alone. To investigate this, we constructed two additional models. The Semantic with Valence by Arousal for Animate Stimuli (SVAA) model includes features for each semantic category, but only stimuli belonging to animate semantic categories are also labeled for valence and arousal. The Semantic with Valence by Arousal for Inanimate Stimuli (SVAI) model includes features for each semantic category, but here only stimuli belonging to inanimate semantic categories are labeled for valence and arousal. b Model comparison was performed using the same cortical mask as for comparison of the CSVA model against the Semantic Only and Valence by Arousal models. This plot shows the percentage of voxels where the SVAA and SVAI models, respectively, outperformed the Semantic Only model, +/- SEM calculated across bootstrap samples. Data are presented separately for each individual subject. The number of voxels included in this analysis were as follows: subject 1 n = 6777, subject 2 n = 6450, subject 3 n = 5483, subject 4 n = 6596, subject 5 n = 6580, subject 6 n = 6618 (see Methods and Table S1). For all six subjects, labeling valence and arousal features for animate images improved model fit to a greater extent than labeling valence and arousal features for inanimate images (* significant at p = 0.05, two-tailed confidence interval test with SEM calculated via 1000-resample bootstrap test). Using separate estimation and validation datasets penalizes the inclusion of additional regressors that capture noise as opposed to genuine signal in the data. This is likely to explain the poorer performance of the SVAI model relative to the Semantic Only model.