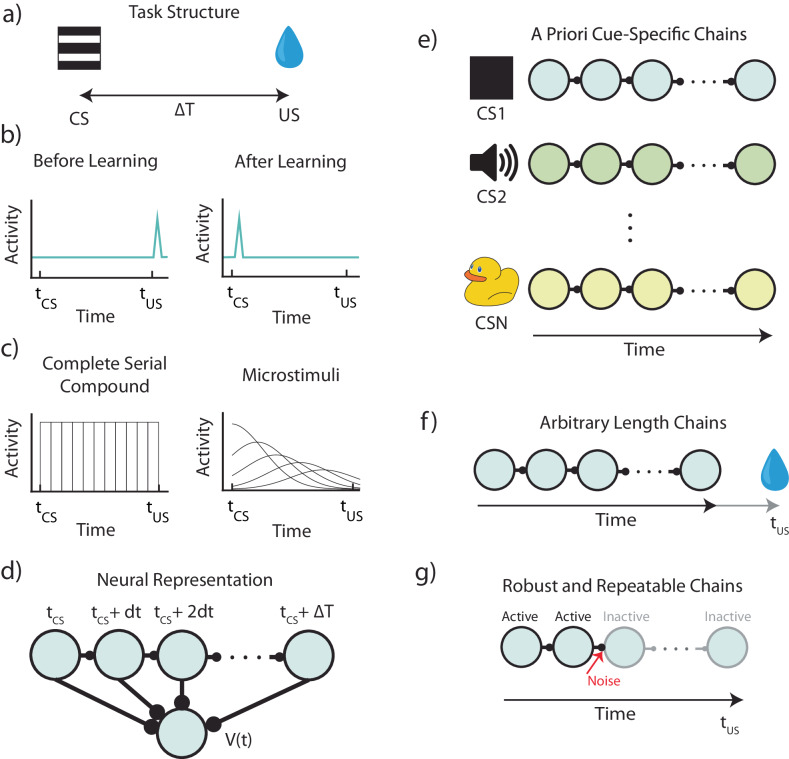

Fig. 1. Structure and assumptions of temporal bases for temporal difference learning.

a Diagram of a simple trace conditioning task. A conditioned stimulus (CS) such as a visual grating is paired, after a delay ΔT, with an unconditioned stimulus (US) such as a water reward. b According to the canonical view, dopaminergic (DA) neurons in the ventral tegmental area (VTA) respond only to the US before training, and only to the CS after training. c In order to represent the delay period, temporal difference (TD) models generally assume neural “microstates” which span the time in between cue and reward. In the simplest case of the complete serial compound (left) the microstimuli do not overlap, and each one uniquely represents a different interval. In general, though (e.g.: microstimuli, right), these microstates can overlap with each other and decay over time. d A weighted sum of these microstates determines the learned value function V(t). e An agent does not know a priori which cue will subsequently be paired with reward. In turn, microstate TD models implicitly assume that all N unique cues or experiences in an environment each have their own independent chain of microstates before learning. f Rewards delivered after the end of a particular cue-specific chain cannot be paired with the cue in question. The chosen length of the chain therefore determines the temporal window of possible associations. g Microstate chains are assumed to be reliable and robust, but realistic levels of neural noise, drift, and variability can interrupt their propagation, thereby disrupting their ability to associate cue and reward.