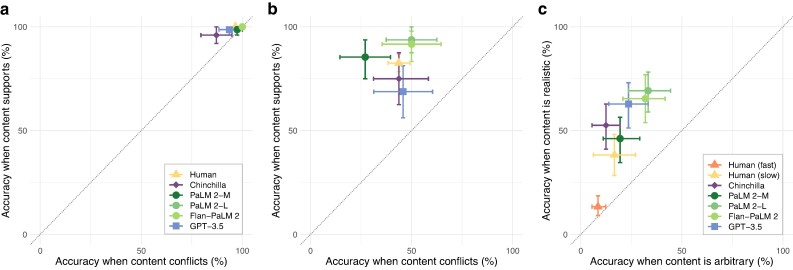

Fig. 2.

Across the three tasks we consider, various language models and humans show similar patterns of overall accuracy and directions of content effects on reasoning. The vertical axis shows accuracy when the content of the problems supports the logical inference. The horizontal axis shows accuracy when the content conflicts (or, in the Wason task, when it is arbitrary). Thus, points above the diagonal indicate an advantage when the content supports the logical inference. a) On basic NLIs, both humans and LMs demonstrate high accuracy across all conditions, and thus relatively little effect of content. b) When identifying whether syllogisms are logically valid or invalid, both humans and LMs exhibit moderate accuracy, and substantial content effects. c) On the Wason selection task, the majority of humans show fairly poor performance overall. However, the subset of subjects who take the longest to answer show somewhat higher accuracy, primarily on the realistic tasks—i.e. substantial content effects. On this difficult task, LMs generally exceed humans in both accuracy and magnitude of content effects. (Throughout, errorbars are bootstrap 95% CIs).