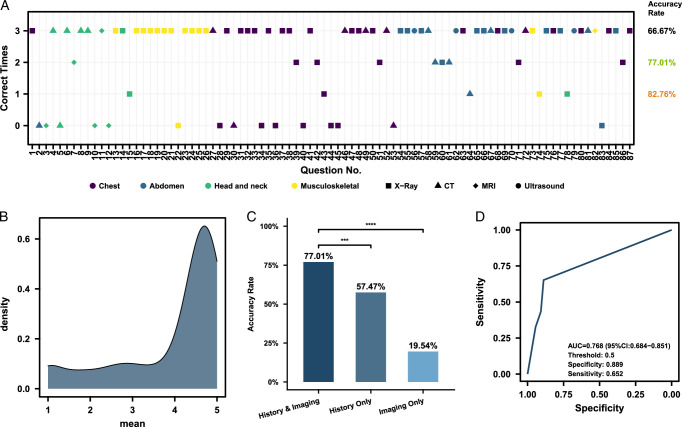

Figure 1.

Evaluating the Performance of the Multimodal ChatGPT-4V in Interpreting Radiological Images for Diagnosis and Formulating Treatment Plans. (A) Performance of ChatGPT-4 in USMLE-style questions. The shapes represent different imaging modalities, whereas the colors represent different anatomical regions. The annotations on the right show the accuracy calculated using different criteria. From top to bottom, the criteria are: considering ChatGPT-4 to have answered correctly only if all 3 attempts for a given question are correct; if at least 2 out of the 3 attempts are correct; and if at least 1 of the 3 attempts is correct. (B) Difference in accuracy of multimodal ChatGPT-4V in USMLE-style questions with both image and history, with history only and with image only. ***P<=0.001. ****P<=0.0001 (C) Scores of multimodal ChatGPT-4V in USMLE-style questions involving treatment plan formulation. The score for each question is recorded as the average of the scores given by three reviewers. (D) ROC curve of multimodal ChatGPT-4 in diagnosing the presence of abnormalities in chest radiography from the ChestX-ray8 database. If ChatGPT considers there to be abnormalities, it is scored 1; if it considers there to be no abnormalities, it is scored 0. The sum of the scores from the three attempts was taken to make a comprehensive judgment. AUC, the area under the receiver operating characteristic curve.