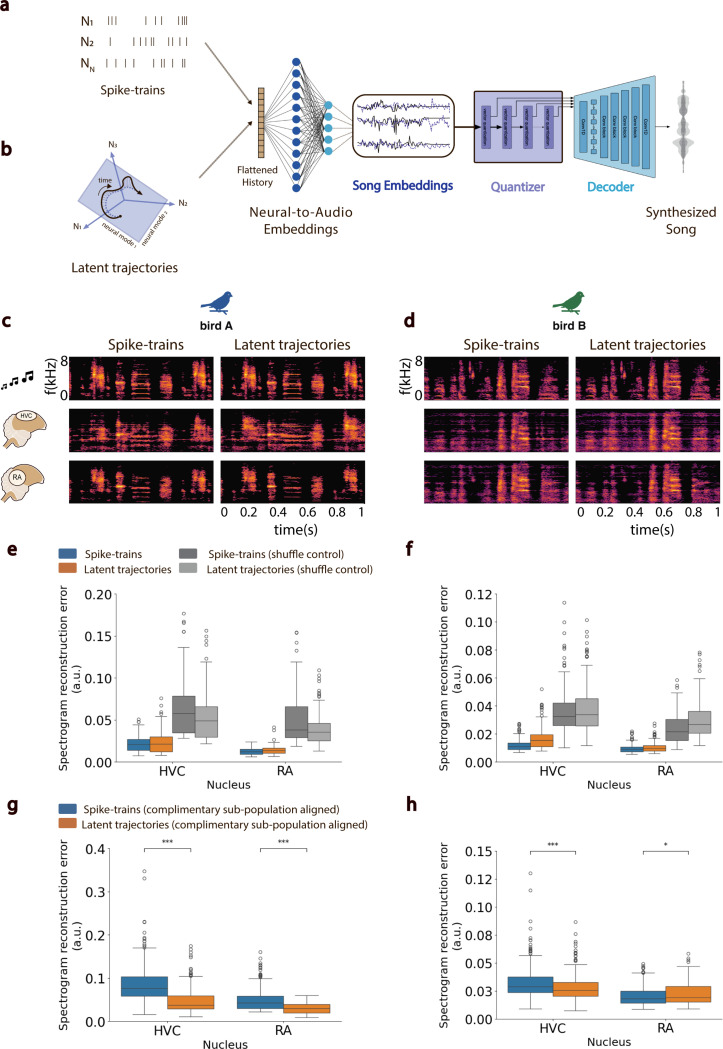

Fig. 7. Brain-to-Song Decoder.

a-b. Architecture of the proposed decoder featuring a Feed-Forward Neural Network that predicts song embeddings from spike-trains (a) or neural latents (b), integrated with Quantizer-Decoder networks from a pre-trained EnCodec model. c-d. Spectrogram of a single-second sample of a bird’s own song (BOS, top row), compared with songs synthesized from HVC spike-trains (middle left), HVC neural latents (middle right), RA spike-trains (lower left), and RA neural latents (lower right) for two distinct birds (c) and (d). e-f. Boxplots depicting the mean squared error in spectrogram reconstruction across synthesized song examples from spike-trains (blue) and neural latents (orange), including shuffle controls for the birds shown in (c) and (d). g-h. Latent stability analysis results. Boxplots show the mean squared error in spectrogram reconstruction of songs synthesized using a model trained on a randomly-selected half of a neural population and evaluated using the complementary half, aligned with the original using Canonical Correlation Analysis. *** denote p − value < 0.001; * denote p − value < 0.05 (Student’s t-test).