Abstract

BACKGROUND:

To adapt to threats in the environment, animals must predict them and engage in defensive behavior. While the representation of a prediction error signal for reward has been linked to dopamine, a neuromodulatory prediction error for aversive learning has not been identified.

METHODS:

We measured and manipulated norepinephrine release during threat learning using optogenetics and a novel fluorescent norepinephrine sensor.

RESULTS:

We found that norepinephrine response to conditioned stimuli reflects aversive memory strength. When delays between auditory stimuli and footshock are introduced, norepinephrine acts as a prediction error signal. However, temporal difference prediction errors do not fully explain norepinephrine dynamics. To explain noradrenergic signaling, we used an updated reinforcement learning model with uncertainty about time and found that it explained norepinephrine dynamics across learning and variations in temporal and auditory task structure.

CONCLUSIONS:

Norepinephrine thus combines cognitive and affective information into a predictive signal and links time with the anticipation of danger.

Individuals must anticipate and respond to threats that exist along a continuum of intensity and proximity (1,2). Such behavioral flexibility relies on accurate computation of threat across timescales and under uncertainty. This can be accomplished by predictive modeling of the outside world (3,4).

Threat learning involves the neuromodulation of areas such as amygdala, prefrontal cortex, and hippocampus (5–10). Norepinephrine (NE) enhances sensory perception and arousal by modulating neuronal firing threshold, neural gain, and synaptic efficacy (11–14). Moreover, NE is critical for fear learning and reconsolidation (15–19). The frontal cortex is an important target for NE, having the highest density of NE terminals within the cortex (20–22). Regions of the medial prefrontal cortex facilitate emotional threat responses, and these responses themselves are modulated by NE (17,19,23).

Neuromodulators play critical roles in computational models of learning. For example, dopamine neuron firing can approximate a reward prediction error in the temporal difference (TD) model of learning (24,25). However, despite the clear relevance of threat computation to behavior, a neuromodulatory threat prediction error signal has not been identified, and it similarly has not been refined to include complex cognition, leaving a major gap in our understanding of motivated behaviors.

We sought such a signal in NE, reasoning that it has validated roles in both stress and attention (14,26), which nonetheless lack formal computational links. Recent studies used fluorescent G protein–coupled receptor activation–based norepinephrine (GRABNE) sensors to identify aversive and appetitive learning NE signals (27,28). However, in contrast to the mapping of dopamine to reinforcement learning models, a gap exists in the computational understanding of NE within aversive prediction, limiting our knowledge of negative emotional states. To fill this gap, we compared NE release dynamics in frontal cortex during imminent and delayed threat perception to reinforcement learning models.

METHODS AND MATERIALS

Animals

In this study, 79 C57BL/6J mice (47 male, 32 female) along with 8 DBH-Cre+ mice (5 male, 3 female) and 7 DBH-Cre− littermates (4 male, 3 female) were used, of which 16 were excluded. Surgeries were performed at 9 to 11 weeks of age, and behavioral experiments were conducted at 13 to 23 weeks of age. To maintain an internal control for circadian effects, mice were usually run in the same order each day during the light cycle (7 am–7 pm). All mouse procedures were performed in accordance with the protocol approved by the Yale Institutional Animal Care and Use Committee.

Surgeries

For NE measurements, 0.5 μL of AAV9-hSyn-NE2h virus (WZ Biosciences Inc.) (1 × 1013 genome copies/mL) was injected into the medial frontal cortex (anteroposterior +1.6–2.1 mm, mediolateral ±0.3 mm, dorsoventral 1.3 mm below dura). For optogenetics, 0.8 μL of AAV5-EF1a-double floxed-hChR2 (H134R)-mCherry virus (Addgene) (1 × 1013 genome copies/mL) was injected bilaterally into the locus coeruleus (LC) (anteroposterior −1.0 mm, mediolateral ±1.0 mm from lambda, dorsolateral 3.2–3.4 mm below dura). Fiberoptic implants (0.2-mm core) (Neurophotometrics LLC) were placed at the viral injection coordinates (3.3 mm below dura for LC), then secured with quick adhesive cement (C&B Metabond; Parkell). Mice were given carprofen (5 mg/kg) for 2 days after surgery. We note that the optogenetic strategy used has the potential for ectopic expression in subcoeruleus NE neurons (29), but that the viral strategy used has shown approximately 80% efficacy and specificity for LC NE neurons (30).

Behavior

Mice were handled for 3 minutes per day for 2 days before behavioral assays except for some used in 35-second cue and quieting cue experiments that were not handled due to logistical constraints. Behavioral assays were conducted in an aversive conditioning chamber (Med Associates Inc.), with video recorded throughout via an infrared sensitive camera.

For GRABNE recordings, behavior assessment was conducted as follows:

Day 1: 10 auditory cue presentations (5.5 kHz for forward conditioning, 9 kHz for trace conditioning, 80 dBA, 60–120 second intertrial interval between tone onsets)

Days 2 and 3: 10 pairings each of this cue with footshock

Day 4: 10 presentations of the conditioned tone

For optogenetics, assessment was as follows:

Day 1: 3 pairings of cue (9 kHz, 80 dBA, 10 seconds) with footshock 5 seconds after tone offset (1 second, 0.3 mA) simultaneous with light delivery (10 mW, 10 Hz, 20-ms pulses)

Days 2 and 3: 10 cue presentations

Freezing behavior was measured using the VideoFreeze analysis program (Med Associates Inc.).

Fiber Photometry and Optogenetics

Fiber photometry and optogenetics were performed using an FP3002 system (Neurophotometrics) connected to fiberoptic cables (Doric). Event-related GRABNE fluorescence (470 nm) was calculated as a percent change from the precue average for each trial (cue).

Histology

After experiments, mice were deeply anesthetized with isoflurane, then intracardially perfused with phosphate-buffered saline followed by 4% paraformaldehyde in phosphate-buffered saline. Brains were post-fixed in paraformaldehyde and dehydrated in 30% sucrose. Brains were sliced to 100-μm thickness on a cryostat (Leica CM3050S; Leica Biosystems GmbH), and frontal cortex or LC containing slices were imaged on a confocal microscope (Olympus FV3000; Evident Scientific, Inc.).

Computational Modeling Overview

Reinforcement learning models were adapted from previous literature (31,32). Briefly, TD models estimate value by incorporating present value V(t) and expected future values (discounted by γ) across temporal states, with error δ(t) the difference between estimated and real value. The uncertainty TD model (31) proposes that the agent experiences uncertainty in believed time, and thus temporal state was modeled as a probability distribution.

RESULTS

We investigated how NE represents predictable aversive events in time by varying the temporal intervals between auditory cues and aversive stimuli (trace conditioning). To examine the role of NE in threat anticipation, in vivo fiber photometry of the fluorescent GRABNE sensor GRABNE2h was used to measure NE release dynamics in the frontal cortex. By recording these responses over days, we could compare NE release over the course of learning to TD reinforcement learning models. Finally, incorporation of temporal event boundaries (both the onsets and the offsets of relevant events) into reinforcement learning models enabled further investigation of second-to-second NE dynamics during the anticipation of danger. Thus, by combining in vivo NE sensor dynamics with temporally varying danger, we were able to interrogate the ability of NE to represent different timescales of threat prediction.

Frontal NE Represents Strength of Fear Association

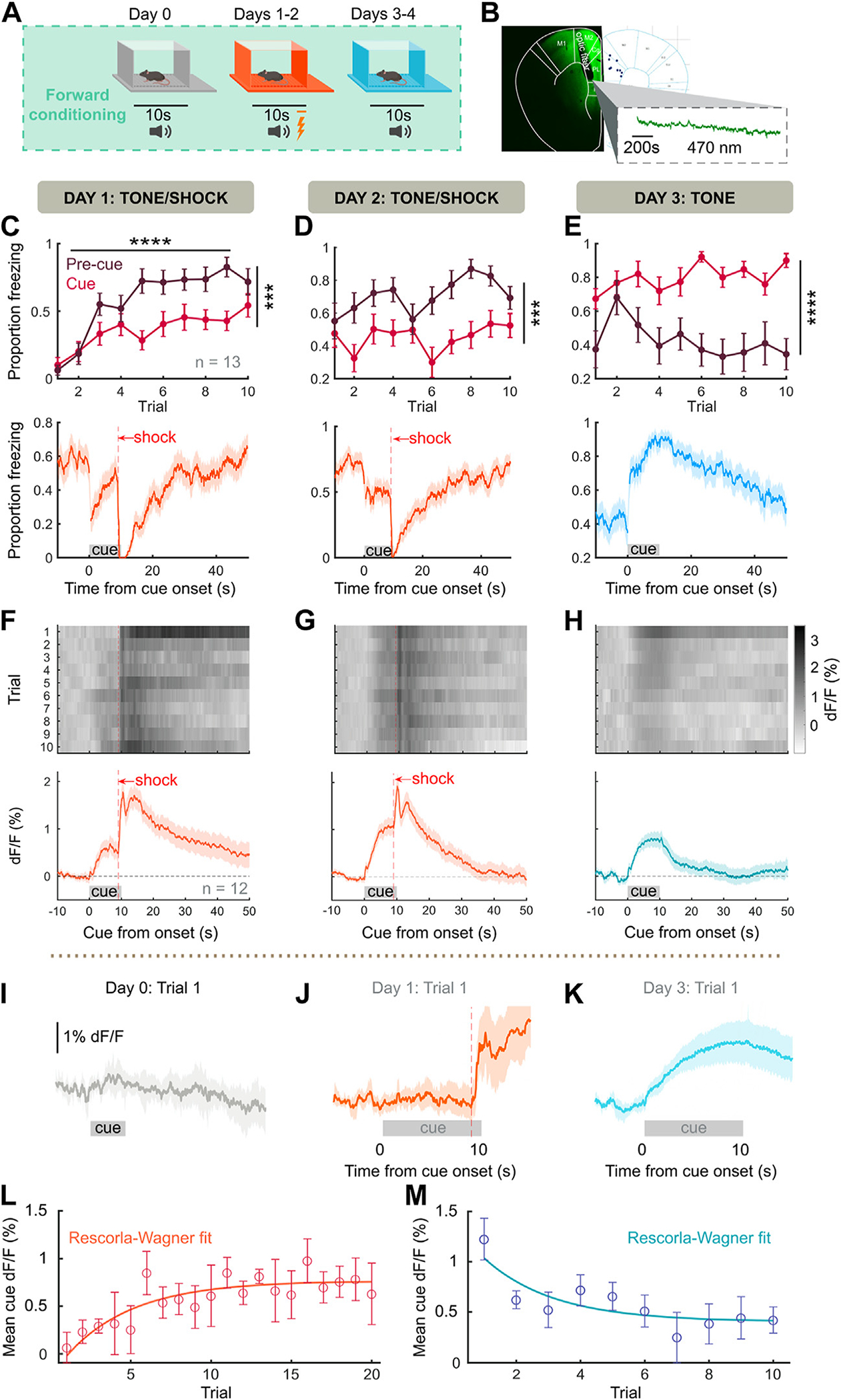

To characterize NE dynamics in relation to fear association, we combined forward fear conditioning with fiber photometry of the fluorescent GRABNE sensor GRABNE2h (Figure 1A, B). We found significant increases in cue-specific (cue) and contextual (precue) freezing, noting that across training, contextual freezing is greater than cue-evoked freezing, which reverses during recall (Figure 1C–E). Freezing was maximal during threat recall, indicating a strong, extinction-resistant threat memory (Figure 1E). During recall, females showed decreased freezing compared with males (Figure S3C). NE was released consistently to footshock, with footshock producing a biphasic response due to either a motion/hemodynamic artifact (Figure S1) or an α2-adrenergic autoinhibition of LC (33). NE responses to auditory stimulus increased rapidly during learning (Figure 1F), were stable throughout learning (Figure 1G), and continued during shock-free recall in a new context (Figure 1H). NE was not released to the tone before shock pairing (Figure 1I), suggesting that cue-evoked NE is not a sensory salience response. No sex differences were found in NE across training (Figure S2A) or recall (Figure S2C).

Figure 1.

Frontal NE represents the strength of fear association. (A) Behavioral schema. Mice (n = 13) were pre-exposed to 10 pure-tone cues on day 0, then 10 tone/shock pairings (tone and shock coterminating) per day for 2 days, then 10 recall tones per day for 2 days. (B) (Left panel) Surgical strategy for fiber photometry of GRABNE sensor in the frontal cortex. (Right panel) Placements of fibers within frontal cortex. (C) During first tone/shock pairings, cue-evoked freezing is significantly less than precue freezing (F1,12 = 31.8, p = .0001). (Bottom panel) Average proportion of mice freezing across trial, mean ± bootstrapped 95% CI. (D) Continued tone/shock pairing maintains greater precue than cue freezing (F1,12 = 97.97, p < .0001). (E) During tone-only presentation, cue freezing is significantly greater than precue freezing (F1,12 = 28.43, p = .0002). (F–H) (Top panels) Trial averages of GRABNE %dF/F across mice. (Bottom panels). Grand average NE trace across trials and mice, mean ± bootstrapped 95% CI. (I) Evoked NE release on the first exposure to unconditioned tone (day 0). (J) Evoked NE during first cue/shock pairing. (K) Evoked NE during first recall cue. All traces, mean ± bootstrapped 95% CI. (L, M) Average cue-evoked NE across acquisition and recall with Rescorla-Wagner model fit. ***p < .001, ****p < .0001. GRABNE, G protein–coupled receptor activation–based NE; NE, norepinephrine.

These results suggested that the evolution of cue-evoked NE might reflect the association strength of a learned cue. Associative learning models, such as Rescorla-Wagner learning (34), model associative strength as a gradual accumulation throughout repetition of pairings between a conditioned stimulus and unconditioned stimulus. Since we observed such a relationship (Figure 1F–H), we compared the cue-evoked NE release when association strength ought to be zero (trial 1 day 1) and when association strength ought to be maximal (trial 1 day 3) and found cue-evoked NE to increase robustly. Moreover, Rescorla-Wagner models predict fear association to increase asymptotically during learning and to decline asymptotically once conditioned stimulus–unconditioned stimulus contingency is abolished. We fit cue-evoked NE to the Rescorla-Wagner model and found that NE release fit this model both during 20 trials of tone/shock pairing (Figure 1L) (adjusted R2 = 0.6869) and during 10 extinction trials (Figure 1M) (adjusted R2 = 0.6911). In contrast, cue NE did not fully explain behavioral freezing to the same extent (acquisition adjusted R2 = 0.0124, extinction adjusted R2 = 0.0241). Thus, NE release represents the strength of association between a predictive cue and an aversive event.

NE Shows Temporal Features of a Prediction Error Signal During Trace Conditioning

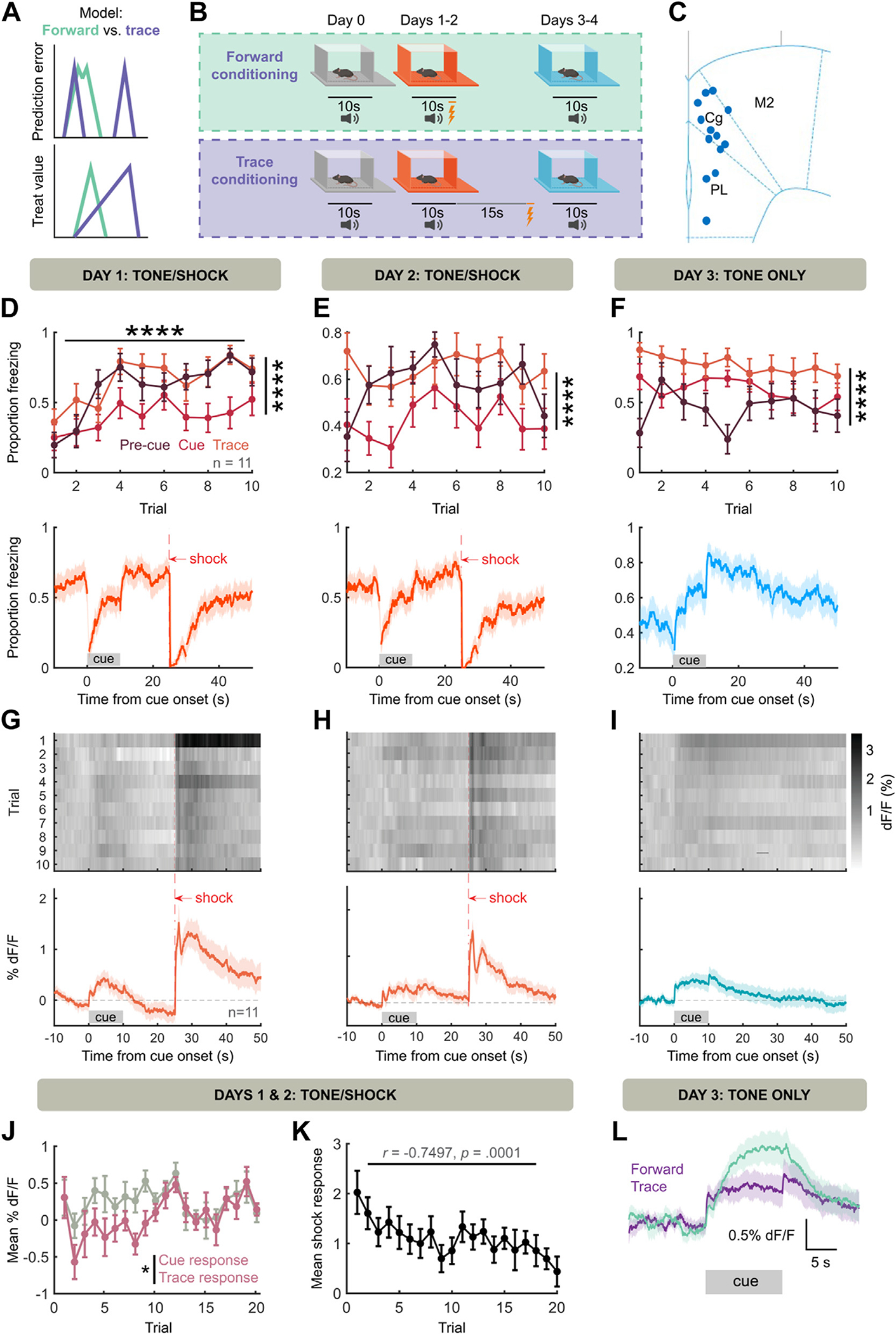

Although cue-evoked NE tracked the strength of fear association, the correspondence between reinforcement learning model components (e.g., prediction error, value, weight) and NE was still ambiguous. In these models, both cue-evoked prediction error and value scale with strength of association, and thus NE release to an aversive cue (Figure 1L, M) is consistent with both interpretations. In appetitive learning, striatal dopamine levels ramp upward between predictive cue and outcome delivery (35), leading to interpretation of dopamine release as representing state value. Thus, we introduced a 15-second delay between the end of cue and footshock (trace conditioning) (Figure 2A, B) to observe whether NE might ramp upward similarly.

Figure 2.

NE represents a threat prediction error. (A) Prediction error and value representations under forward (difficult to separate) and trace (more separable) conditioning. (B) Behavioral schema. Same as Figure 1A, but with a 15-second waiting period between cue offset and shock. (C) Placement of fibers in frontal cortex. (D) During initial tone/shock pairing, both precue freezing and trace freezing are greater than cue freezing (F2,20 = 18.95, p < .0001; trace vs. cue freezing: t20 = 5.785, p < .0001, precue vs. cue freezing: t20 = 4.717, p = .0004). (E) Continued tone/shock pairing maintains freezing pattern (F2,20 = 17.49, p < .0001; trace vs. cue freezing: t20 = 5.720, p < .0001, precue vs. cue freezing: t20 = 4.161, p = .0014). (F) During tone-only presentations, cue and trace freezing are greater than precue freezing (F2,20 = 40.31, p < .0001, precue vs. cue freezing: t20 = 3.028, p = .0198, precue vs. trace freezing: t20 = 8.835, p < .0001, cue vs. trace freezing: t20 = 5.806, p < .0001). (G–I) Same as Figure 1F–H, but for trace conditioning (n = 11 mice). Lack of NE ramping during trace is notable. (J) NE release during trace period is decreased compared with cue period across acquisition (F1,10 = 7.270, p = .0225 for cue vs. trace). (K) Shock-evoked NE release (0 to 2 seconds postshock %dF/F minus 2 to 0 seconds preshock %dF/F) decreases across acquisition trials. (L) Forward cue-evoked NE is on average greater than trace cue-evoked NE. ****p < .0001. NE, norepinephrine.

Consistent with our previous results (Figure 1), in this paradigm we observed increased freezing across learning, with increased precue freezing during training and increased cue freezing during recall. However, freezing during the trace remained high regardless of context (Figure 2D–F). Further, we observed cue-evoked NE release during and after training (Figure 2G–I). No sex differences were found in NE responses across training (Figure S2B) or recall (Figure S2D). Critically, after cue-offset, fluorescence returned near the precue baseline rather than ramping to the point of shock (Figure 2G, H). While a small NE release was visible on the offset cue in well-trained mice and in the absence of shock (Figure 2H, I), NE release during the trace was decreased relative to the cue (Figure 2J). Further, if NE release indicates prediction error, we would expect that the unconditioned stimulus (footshock) response diminishes along tone-shock pairings. Accordingly, we observed a trial-by-trial decrease in shock-evoked NE (Figure 2K). Thus, NE levels had multiple features (decrease during waiting period, decreased response to unconditioned stimulus after learning) consistent with a threat prediction error signal rather than a threat value signal. Interestingly, when comparing forward cue-evoked NE with trace cue-evoked NE, we observed that trace cues evoke less NE on average (Figure 2L). This suggested that the ability of NE to represent predictive cues may scale with the temporal imminence of threat during the cue, which we sought to test.

In learning paradigms, on any trial the amount of learning is proportional to the size of prediction error (25). Therefore, we reasoned that if NE is a prediction error, augmenting shock-evoked NE should enhance learning. To test this, we expressed Cre-dependent ChR2 (channelrhodopsin-2) in NE neurons of LC using targeted viral infusion in DBH-Cre mice (see Methods and Materials). This approach has approximately 80% specificity for expression in NE-releasing neurons in prior studies (30), although some non-NE neurons will also express the opsin ectopically (30,36–39). We then optically stimulated the LC in DBH-Cre mice or littermate controls in a weak (3 trials, 0.3-mA shock) trace conditioning paradigm (Figure S4A–C), followed by measurement of threat recall across 20 recall trials. We found that optogenetic stimulation produced a trending effect on cue-evoked (cue minus precue) freezing (Figure S4D), which became significant when considering the latter half of the cue (Figure S4E). Thus, augmenting shock-evoked NE increases subsequent behavioral fear expression, consistent with an aversive prediction error (although a potential contribution of non-NE neurons or subcoeruleus NE neurons to the behavioral effect cannot be ruled out).

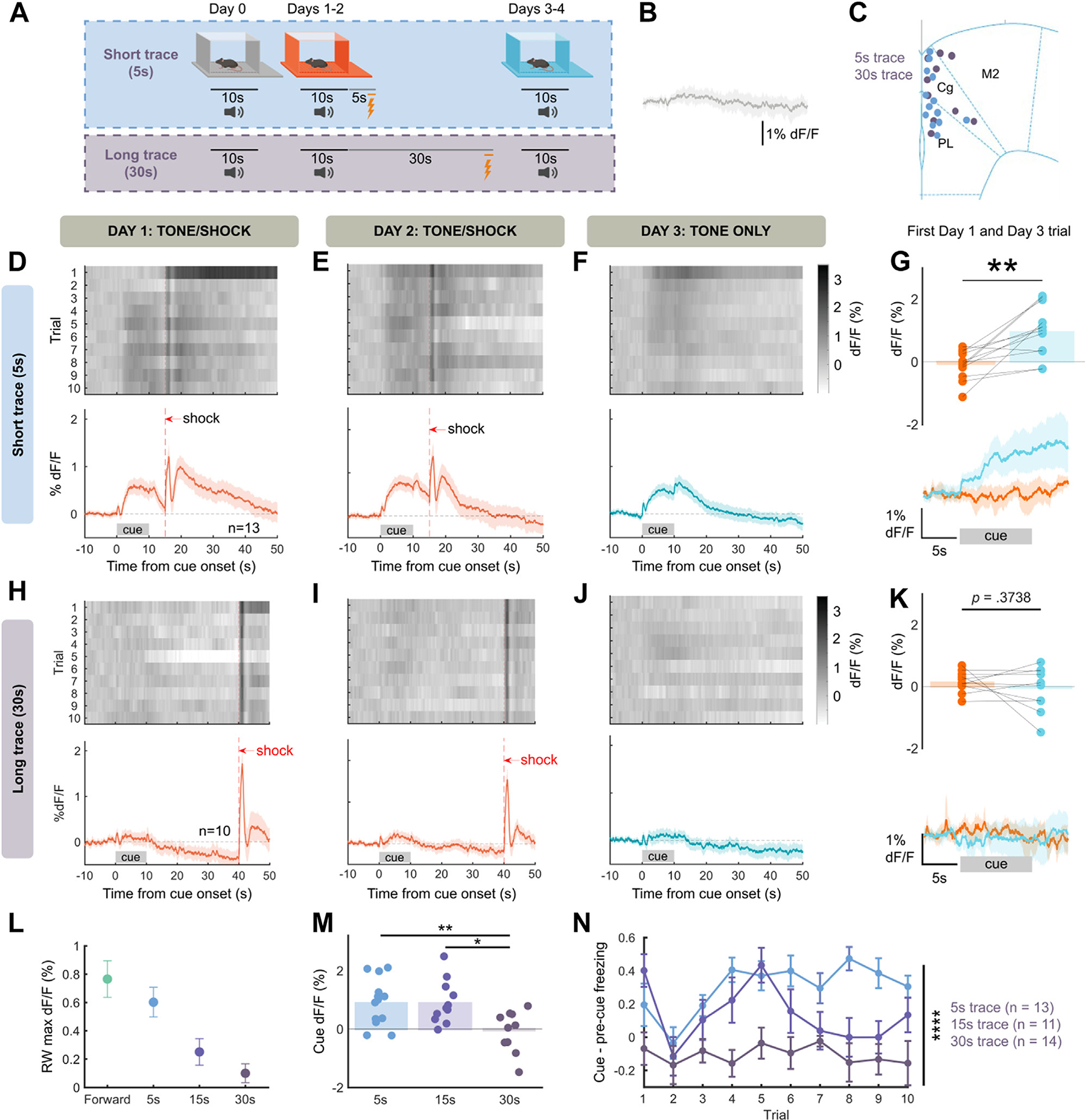

Cue-Evoked NE Scales With Threat Imminence

In TD models of reinforcement learning, a prediction error update to value should be discounted by the time until an outcome due to temporal discounting of the value of future outcomes. Moreover, there may be limited eligibility of a cue in explaining a distant outcome. Thus, cue-evoked NE prediction errors should diminish with increasing trace periods. We tested this hypothesis by varying the length of the trace, using short trace (5 seconds between cue offset and shock) and long trace (30 seconds between cue offset and shock) conditions (Figure 3A). Across trace conditions, the cue itself evoked no NE response (Figure 3B). In 5-second trace conditioning, we observed gradual increase of cue-evoked NE and continued cue NE in the absence of shock (Figure 3D–F), and we observed that conditioning significantly increased cue-evoked NE (Figure 3G), with no impact of sex (Figure S2E). However, mice trained with a 30-second trace lacked any cue-evoked NE across the behavioral paradigm (Figure 3H–J) with conditioning producing no change in cue NE (Figure 3K), with no effect of sex (Figure S2F). To further describe the effect of threat proximity on cue NE, we modeled NE release for each trace length using a Rescorla-Wagner model, which showed a decrease in maximal modeled cue NE with trace length (Figure 3L). Furthermore, when comparing NE release at maximal association (day 3 trial 1), we found a significant effect of trace (Figure 3M) with no effect of mouse sex (Figure S2H). We found a similar effect of trace on cue-evoked freezing behavior, with a significant effect of trace length on cue-evoked (cue–precue) freezing (Figure 3N). Thus, both cue-evoked NE and behavior are diminished by increasing temporal delay.

Figure 3.

Cue-evoked NE scales with threat imminence. (A) Behavioral schema. Trace duration varied between 5 seconds and 30 seconds. (B) Trace cue without shock pairing (day 0) evokes little response, average across all trace groups (5 seconds, 15 seconds, 30 seconds). (C) Placement of fibers during experiment. (D–F) Same as Figure 2G–I for 5-second trace conditioning (n = 13 mice). (G) Cue-evoked NE is significantly increased by 5-second trace conditioning (t11 = 4.385, p = .0011, paired two-sided t test). (H–J) Same as panels (D–F) for 30-second trace conditioning (n = 10 mice). (K) Cue-evoked NE is not changed by 30-second trace conditioning (t9 = 0.9358, p = .3738, paired two-sided t test, p = .1869, paired one-sided t test). (L) Maximal modeled cue-evoked NE using RW model. (M) Cue-evoked NE is significantly decreased by trace length (one-way analysis of variance, F2,31 = 6.188, p = .0055). (N) Cue-evoked freezing (cue–precue freezing) is significantly decreased by trace length (2-way analysis of variance, F2,35 = 28.23 for trace, p < .0001). *p < .05, **p < .01, ****p < .0001. max, maximum; NE, norepinephrine; RW, Rescorla-Wagner.

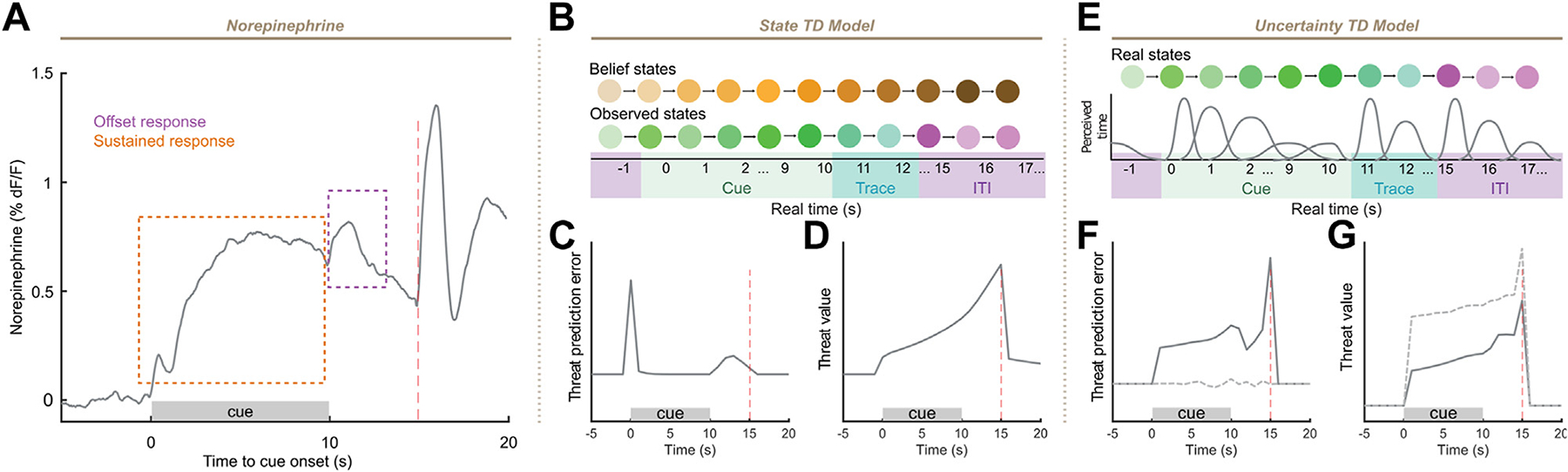

NE Corresponds to Prediction Error Under TD Learning With State Uncertainty

The scaling of NE levels with association strength and decay of NE levels during temporal gaps is consistent with prediction error terms of learning models such as TD. However, NE release during trace cue-shock trials contained some temporal features that could not be immediately explained: a small response at the offset of cue and a sustained cue response (Figure 4A). Reinforcement learning models such as TD require a representation of states over time within a trial, such as a complete serial compound representation. Such models have been used to explain temporal dynamics of dopamine neuronal activity (25). However, this model could not reproduce sustained NE responses during the cue period that we observed (Figure S5).

Figure 4.

Norepinephrine resembles threat prediction error under uncertainty. (A) Mean smoothed norepinephrine on day 2 of 5-second trace conditioning. Sustained and offset responses outlined. (B) Schematic of belief state–based TD model. (C, D) Prediction error (C) and value (D) terms after 20 trials of simulation in this model. (E) Schematic of state-based TD model with temporal uncertainty. Knowledge of present state is corrupted by uncertainty regarding current time. (F, G) Prediction error (F) and value (G) terms after 20 trials of simulation in this model. Dotted lines, without uncertainty correction; solid lines, with uncertainty correction. ITI, intertrial interval; TD, temporal difference.

We thus began by modeling our trace conditioning task using a TD model incorporating uncertainty about state (belief state): this model has been used to explain variations in dopamine signals during a trace appetitive conditioning task (32). This model involves a state representation of the task corresponding to the phases of conditioning trials (Figure 4B). This model could reconcile the offset responses observed during trace conditioning and lack of trace ramping (Figures 2 and 3) with a prediction error interpretation (Figure 4C, D). However, this offset response is transient and disappears with further training, which was not observed, and sustained cue responses could not be generated at the end of training (Figure 4C). A sustained prediction error can be generated if the subject has an imperfect representation of time. A recent reinforcement learning model examined TD learning under conditions of an imprecise internal clock to reconcile the observation of ramping dopamine with the dopamine prediction error hypothesis (31). In this model, value is estimated based on an uncertain perception of time such that states contribute to the value estimate based on their likelihood. When sensory evidence improves the estimation of time (during a sensory cue), value is therefore continually updated, necessitating a persistent prediction error to learn the correct value function. To apply this model to trace fear conditioning, we assumed that temporal uncertainty would increase with the duration of a time interval, such as cue or trace period (Figure 4E), as predicted by scalar expectancy theory (40). This model reproduced both sustained cue and cue offset responses in prediction error (Figure 4F). In contrast to the NE signal, value in this model ramps during the trace period (Figure 4G). Intuitively, sustained prediction error emerges from the continual correction of time by sensory evidence. However, the amount of resolution resolved decreases throughout the cue until the offset of the cue produces a precise temporal signal, producing the offset response.

Time Uncertainty Model Matches Learning Effects on NE

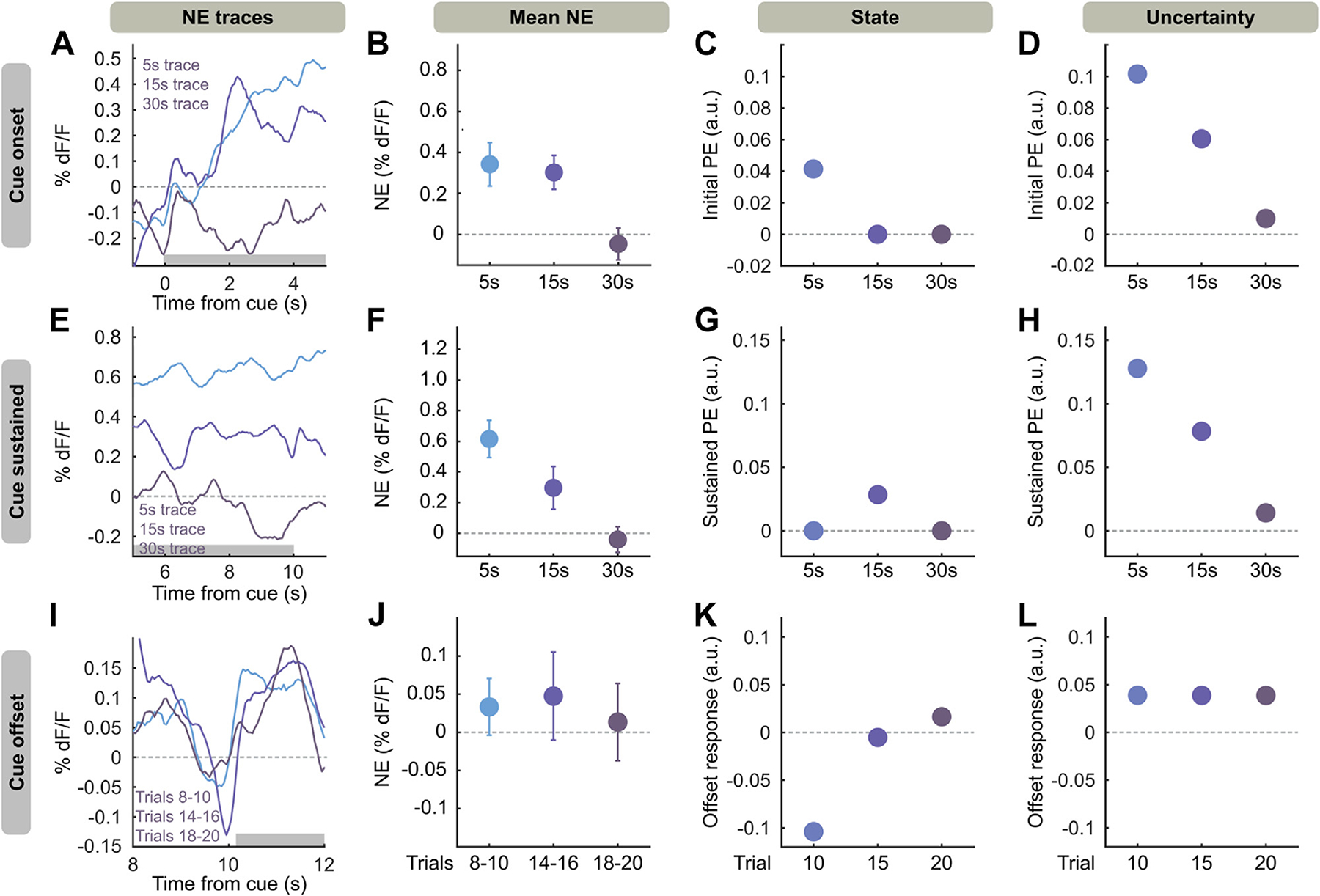

The unique features of NE (offset, sustained response) evolved differently across learning and conditioning paradigms, which we reasoned might define the nature of the reinforcement learning process and differentiate between models. We thus compared key effects of learning conditions on cue response, sustained cue response, and cue offset response to the same effects produced by models. In the last 3 tone/shock trials (where cue/shock contingency has been maximally learned and learning signals should be stable), longer trace periods produced diminished NE release during the first 5 seconds of cue (Figure 5A, B). The state model shows a learning-related temporal shift (41), which leads to rapidly diminished cue-onset prediction errors in the 15- and 30-second conditions (Figure 5C), which does not match our data. However, at the same number of trials, the uncertainty model had consistent responses at the cue onset period that scaled primarily with association strength (Figure 5D), consistent with NE.

Figure 5.

Uncertainty TD model matches learning effects on NE. (A) Averaged NE responses to the first 5 seconds of cue on trials 18–20 of cue shock pairing for 5-, 15-, and 30-second trace conditioning. (B) NE response, averaged along the first 5 seconds of cue [gray box in panel (A)]. Mean ± SEM. (C) Simulated early cue PEs for state TD model in 5-, 15-, and 30-second trace conditioning. (D) Same as panel (C), but for PEs from uncertainty model at 20 training iterations. (E–H) Same as panels (A–D), but referring to the last 5 seconds of the cue [gray box in panel (E)]. (I) Averaged NE responses at the end of cue (10–12 seconds after cue onset), baselined to end of cue (8–10 seconds after cue onset), for trial blocks 8–10, 14–16, 18–20 of 5-second trace conditioning. (J) NE responses in panel (I) averaged along the cue offset [gray box in panel (I)]. Mean ± SEM. (K) Simulated offset responses in the state TD model at trials 10, 15, and 20. (L) Offsets in the uncertainty TD model at 10, 15, and 20 iterations of training with 5-second trace conditioning (chosen due to robust offset responses). Black lines for all panels indicate 0 on the y-axis. a.u., arbitrary unit; NE, norepinephrine; PE, prediction error; TD, temporal difference.

During late training, cue NE was sustained for the length of the auditory stimulus, which does not occur in standard TD reinforcement learning, as after the initial cue onset no new information is provided by the rest of the cue. This effect could not be explained by convolution with a GRABNE2h kernel (Figure S7). We observed that sustained cue responses in late training decrease with longer traces (Figure 5E, F). This is not observed in the state model (Figure 5G), which produces no sustained prediction errors: the sustained response in 15-second trace conditioning is due to the temporally shifting prediction error (Figure S4). However, at 20 trials of simulation, the uncertainty model shows sustained responses decreasing with trace length (Figure 5H), as value, and thus prediction error under uncertainty, will decrease with time from outcome.

We observed NE release at the offset of cue after sufficient training (Figure 5I, J), which we hypothesized is due to the precise temporal signal the offset of cue provides. We compared prediction error terms of both models with NE over trials. The state model produced prediction errors only with offset responses at the end of training (Figure 5K). In contrast, the uncertainty model produced consistent offset responses throughout trials (Figure 5L), again matching the NE data. Moreover, we found that the uncertainty model could reproduce outcome (shock) prediction errors even after training. This is consistent with previous implementations of this model and models involving microstimuli, or internal representations of stimuli with increasing precision. Such models can predict the persistence of outcome responses (31,42) that we observe. Overall, the uncertainty model better explains our NE signals than the state TD model, suggesting that NE representations of threat are modulated by temporal uncertainty.

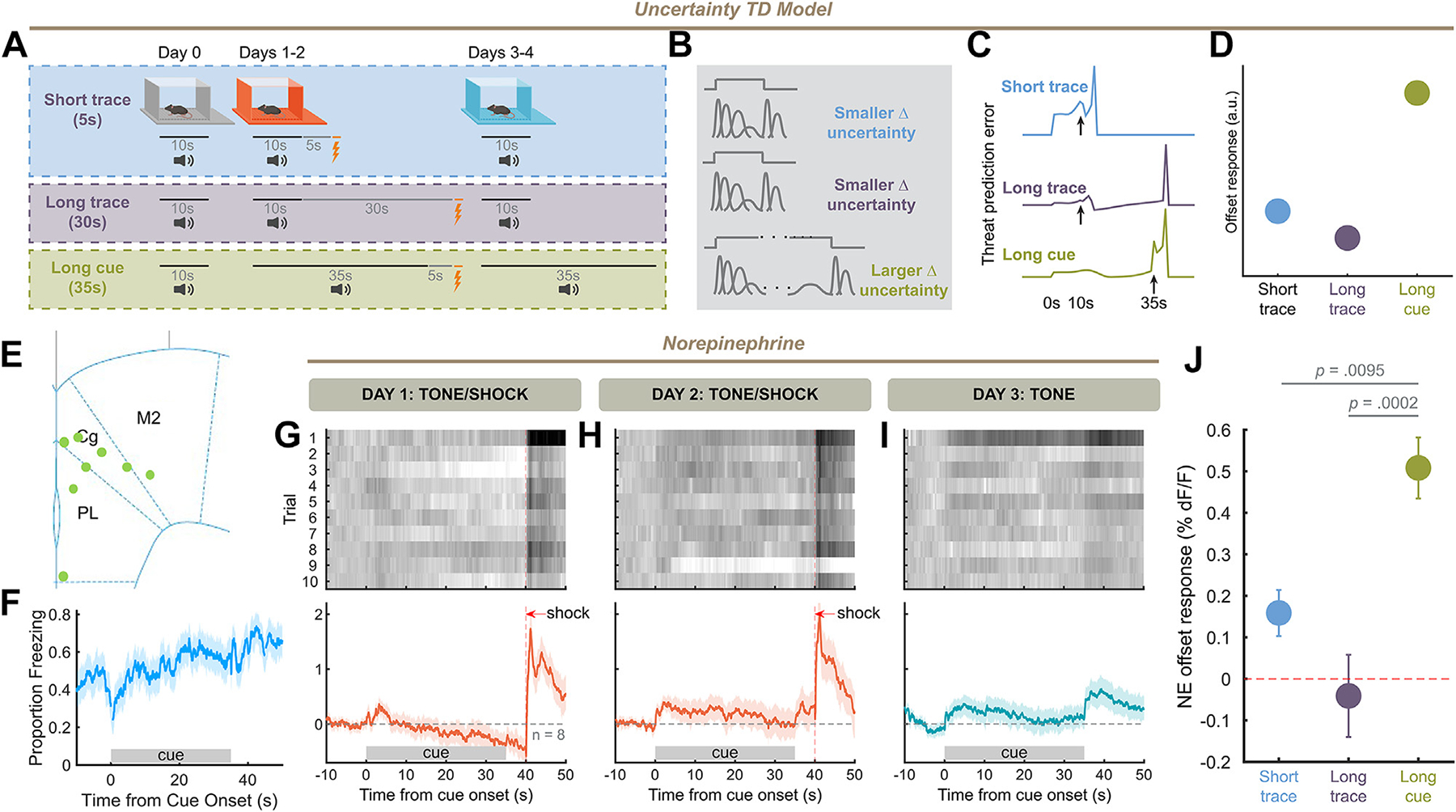

Cue Offset Responses Are Modulated by Temporal Uncertainty in a Long Cue Design

If cue offset NE responses originate from resolution of temporal uncertainty, increasing temporal uncertainty immediately before cue offset should cause the cue offset to resolve an increased amount of uncertainty, increasing the offset response. To test this hypothesis, we designed a variation of 5-second trace conditioning with a long (35-second) cue (Figure 6A, B), reasoning that if temporal uncertainty increases along the length of a cue, temporal uncertainty should be high at the end of a long cue. When simulating this task, we found that the uncertainty TD model could produce large prediction errors at the cue offset for a long cue compared with 10-second cue/5-second trace and 10-second cue/30-second trace tasks (Figure 6C, D). We performed this same task in mice and found that freezing ramped upward throughout this conditioning procedure, maximizing after cue offset (Figure 6F).

Figure 6.

Cue offset responses are modulated by temporal uncertainty. (A) Behavioral schema. Mice were trained using 5-second trace conditioning, but with a cue 35 seconds long. (B) Temporal uncertainty under multiple trace/cue combinations. (C, D) Offset response in prediction error for short trace, long trace, and long cue conditioning simulations with uncertainty temporal difference model. (E) Placement of fiber implants in frontal cortex for long trace experiment. (F) Average behavior during aversive recall using a long cue design. (G–I) Same as Figure 3D–F for long cue task (10 trials × 8 mice). (J) Norepinephrine offset responses for 5-second trace, 30-second trace, and 35-second cue conditioning at day 3 trial 1: 35-second cue conditioning produces significantly higher offsets than either trace task (one-way analysis of variance, F2,28 = 11.42, p = .0002, Tukey’s multiple comparisons test: 5-second trace vs. long cue: q28 = 4.513, p = .0095, 30-second trace vs. long cue: q29 = 6.721, p = .0002). a.u., arbitrary unit; TD, temporal difference.

In this trace conditioning design, we found that the NE cue offset response was large compared with the cue-evoked response (Figure 6G–I) across sexes (Figure S2H). We compared the offset response at the point of maximal cue association (day 3 trial 1) with that produced by the 10-second cue/5-second trace and 10-second cue/30-second trace designs, and found that the 35-second cue/5-second trace design produced larger offsets (Figure 6J). Thus, offset responses scale with temporal uncertainty at the point of cue offset, consistent with the offset reflecting the resolution of temporal uncertainty.

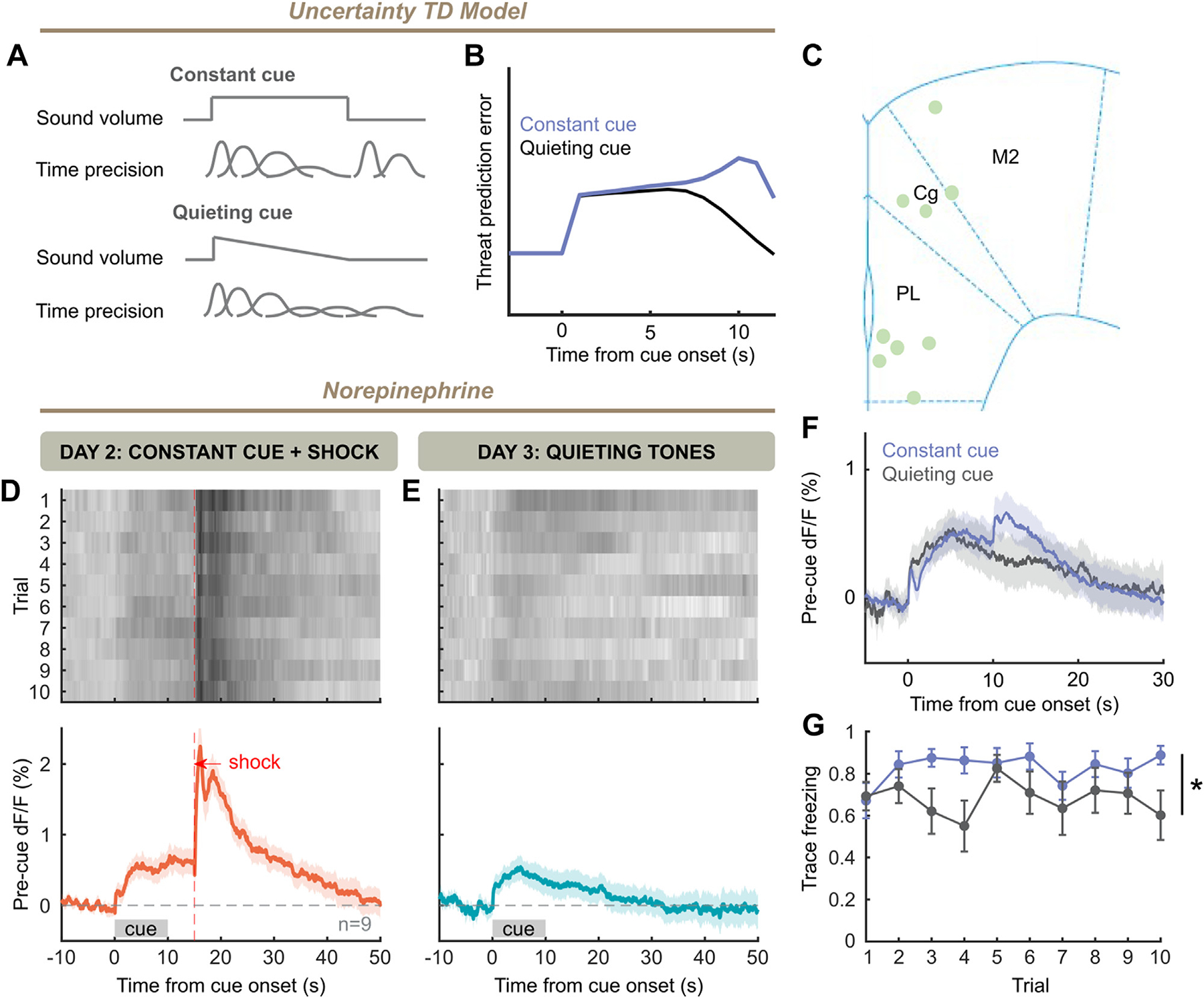

Withholding of Sensory Evidence Eliminates NE Offset Responses

Again reasoning that NE release at cue offset was an update to the mouse’s temporal precision, we sought a manipulation that could diminish this temporal update. In an appetitive visual task, Mikhael et al. (31) found that gradually darkening a visual environment (increasing uncertainty) altered dopamine release in a manner consistent with this model. We hypothesized that gradually decreasing the volume of an auditory cue would eliminate the temporal update that cue offset provides, eliminating offset NE (Figure 7A). The uncertainty TD model predicted that for a fast decrease in sensory feedback with no temporal precision provided by cue offset, prediction error should decrease without offset response (Figure 7B). We conditioned mice with a constant cue/5-second trace paradigm, and found NE release (Figure 7D) comparable to our prior 5-second trace experiment (Figure 3). However, when performing recall with quieting cues, there was no visible offset NE response (Figure 7E) across sexes (Figure S2I), in contrast to previously observed cue recall (Figure 3F and Figure 7F) and thus consistent with offset NE reflecting a temporal update. Interestingly, we found that when comparing freezing between constant cue recall (Figure 3) and quieting cue recall, there was a significant decrease in freezing during the trace period, indicating a possible causal role for this NE offset response in preparatory defensive behavior (Figure 7G).

Figure 7.

Withholding sensory evidence eliminates offset responses. (A) A constant cue will cause a slow decrease in temporal precision, with an update to temporal precision at the end of the cue. A quieting cue will cause faster decay of temporal precision and cause no such update at offset. (B) Model simulation of prediction error with a quickly decaying cue without discrete offset (blue), as well as that for the constant cue as in Figure 4F. (C) Placement of fibers in frontal cortex for experiment. (D) Trial-by-trial and grand averaged norepinephrine response for day 2 of training, with constant tone (10 trials × 9 mice). (E) Same as panel (D), but for day 3 with quieting cues. (F) Norepinephrine responses to constant (Figure 3F) and quieting [as in panel (E)] cues after training. (G) Freezing during trace periods for constant cue group (Figure 3) is higher than that during trace periods after quieting cues (two-way analysis of variance, cue: F1,22 = 7.144, p = .0139). TD, temporal difference.

DISCUSSION

Despite the importance of threat computation to survival, the biological substrates of these computations have not been well elucidated. We showed that NE represents the strength of fear associations (Figure 1), consistent with results that LC firing is synchronized to fear conditioning cues (43,44). Interestingly, NE decreases during anticipation of shock (trace period) and is necessary for threat learning, suggesting a prediction error interpretation (Figures 2 and 3). However, TD prediction errors alone do not fully explain NE dynamics: models incorporating temporal uncertainty are required to explain NE (Figures 4 and 5). Moreover, NE offset responses are bidirectionally modulated by manipulations of temporal uncertainty (Figures 6 and 7). Together, these results demonstrate how NE represents both cognitive uncertainty (about time) and affective prediction errors.

The hypothesis that NE encodes prediction error, in parallel with dopamine, has existed for at least 2 decades but has lacked empirical confirmation (45). As is characteristic of prediction error, noradrenergic neurons are activated by rewarding and aversive events and respond strongly to infrequent, salient events (44,46). Moreover, NE contributes to the P300 response to low-probability stimuli (47), and pupil diameter and risk prediction errors are correlated (48). Here, we directly assign a computational role to NE as a time uncertainty–modulated error signal. Recently, others have revealed a reward prediction error role for cortical NE (37) as well as a role in broadcasting sensory prediction error (49).

While the role of NE in threat processing is well established (17,19,43), future work should use more temporally sensitive manipulations of NE in light of its encoding of temporal precision. Additionally, while brain regions encoding temporal precision such as the medial prefrontal cortex and hippocampus (50,51) project to LC (52), the mechanism by which temporal precision is incorporated into NE signals is unknown. Another critical question is the extent to which neuromodulatory signals overlap in the encoding of prediction errors. NE neurons respond heterogeneously to expected reward, but synchronously to unexpected punishment (27,53); conversely, ventral tegmental area dopamine neurons respond to appetitive signals, but a subpopulation conveys aversive signals with the incorporation of uncertainty (54,55), consistent with shared but biased roles for different neuromodulators (44,55–59).

We also observed a sustained component to NE release when there was a broader update to the contextual valence: on the very first footshock or first auditory fear cue heard in a context (day 1 shock 1 or day 3 tone 1, respectively) (Figures 1–3). We hypothesize and have modeled (Figure S8) that sustained NE responses indicate contextual information, but the impact of spatiotemporal context (60) on NE signaling in threat computation bears further investigation.

In summary, we show that frontal cortical NE represents a prediction error signal during aversive learning, which is modulated by temporal uncertainty. Our work points to a temporally precise role for NE in the production and regulation of adaptive and maladaptive fear memory that may occur in disorders such as anxiety disorders and posttraumatic stress disorder.

Supplementary Material

ACKNOWLEDGMENTS AND DISCLOSURES

This work was supported by the National Institutes of Health (Grant Nos. 2T32NS041228-21 and 5T32NS007224-37 [to AB] and Grant No. 1K08MH122733-01 [to APK]), Yale University Fellowship (Grant No. 5T32NS007224-37 [to AB]), Glenn H. Greenberg Fund for Stress and Resilience (to APK), Brain and Behavior Research Foundation NARSAD Young Investigator Grant (to APK), Connecticut Mental Health Center (to AB, J-HY, AY, SG-K, JAR, and APK), and National Center for PTSD (to AB, AY, JHK, and APK). This work was funded in part by the State of Connecticut, Department of Mental Health and Addiction Services, but this publication does not express the views of the Department of Mental Health and Addiction Services or the State of Connecticut. The views and opinions expressed are those of the authors.

AB and APK were responsible for conceptualization. AB, AY, and APK were responsible for methodology. AB, J-HY, and SG-K were responsible for investigation. JF and YL were responsible for resources. AB and APK were responsible for writing of the original draft. AB, JHK, AY, J-HY, JAR, and APK were responsible for writing of the original draft.

We thank Drs. Alex Kwan, Michael Sinischalchi, and J. Richard Trinko for sharing photometry analysis code used in this work. We thank Dr. Jonathan Cohen for helpful feedback during the writing of the manuscript. We thank Drs. John Mikhael and Sam Gershman for making their implementations of uncertainty-based reinforcement learning models freely available and accessible.

A previous version of this article was published as a preprint on bioRxiv: https://www.biorxiv.org/content/10.1101/2022.10.13.511463v1

All data are available in the main text or supplemental materials. Pre-processed data and analysis scripts are available at https://doi.org/10.6084/m9.figshare.24585147

APK receives or has received research funding from Transcend Therapeutics and Freedom Biosciences. JHK has received consulting fees from Aptinyx, Inc., Atai Life Sciences, AstraZeneca Pharmaceuticals, Biogen, Idec, MA, Biomedisyn, Bionomics, Ltd. (Australia), Boehringer Ingelheim International, Cadent Therapeutics, Inc., Clexio Bioscience, Ltd., COMPASS Pathways, Ltd. (United Kingdom), Concert Pharmaceuticals, Inc., Epiodyne, Inc., EpiVario, Inc., Greenwich Biosciences, Inc., Heptares Therapeutics, Ltd. (United Kingdom), Janssen Research & Development, Jazz Pharmaceuticals, Inc., Otsuka America Pharmaceutical, Inc., Perception Neuroscience Holdings, Inc., Spring Care, Inc., Sunovion Pharmaceuticals, Inc., Takeda Industries, and Taisho Pharmaceutical Co., Ltd., is on the board of directors at Freedom Biosciences, Inc.; is a member of the scientific advisory board at Biohaven Pharmaceuticals, BioXcel Therapeutics, Inc. (Clinical Advisory Board), Cadent Therapeutics, Inc. (Clinical Advisory Board), Cerevel Therapeutics, LLC, Delix Therapeutics, Inc., EpiVario, Inc., Eisai, Inc., Jazz Pharmaceuticals, Inc., Novartis Pharmaceuticals Corporation, PsychoGenics, Inc., RBNC Therapeutics, Inc., Tempero Bio, Inc., Terran Biosciences, Inc.; owns stock at Biohaven Pharmaceuticals, Sage Pharmaceuticals, and Spring Care, Inc.; and owns stock options at Biohaven Pharmaceuticals Medical Sciences, EpiVario, Inc., Neumora Therapeutics, Inc., Terran Biosciences, and Inc., Tempero Bio, Inc. JHK has also received free drug for research studies from AstraZeneca (saracatinib), Novartis (mavoglurant), and Cerevel (CVL-751). None of the reported conflicts of interest are relevant to the current study. All other authors report no biomedical financial interests or potential conflicts of interest.

Footnotes

Supplementary material cited in this article is available online at https://doi.org/10.1016/j.biopsych.2024.01.025.

Contributor Information

Aakash Basu, Department of Psychiatry, Yale School of Medicine, New Haven, Connecticut; Interdepartmental Neuroscience Program, Yale School of Medicine, New Haven, Connecticut.

Jen-Hau Yang, Department of Psychiatry, Yale School of Medicine, New Haven, Connecticut; Clinical Neuroscience Division, Veterans Administration National Center for PTSD, West Haven, Connecticut.

Abigail Yu, Department of Psychiatry, Yale School of Medicine, New Haven, Connecticut.

Samira Glaeser-Khan, Department of Psychiatry, Yale School of Medicine, New Haven, Connecticut.

Jocelyne A. Rondeau, Department of Psychiatry, Yale School of Medicine, New Haven, Connecticut

Jiesi Feng, State Key Laboratory of Membrane Biology, Peking University School of Life Sciences, Beijing, China.

John H. Krystal, Department of Psychiatry, Yale School of Medicine, New Haven, Connecticut

Yulong Li, State Key Laboratory of Membrane Biology, Peking University School of Life Sciences, Beijing, China; Peking University-IDG/McGovern Institute for Brain Research, Beijing, China; Peking-Tsinghua Center for Life Sciences, Academy for Advanced Interdisciplinary Studies, Peking University, Beijing, China; Chinese Institute for Brain Research, Beijing, China.

Alfred P. Kaye, Department of Psychiatry, Yale School of Medicine, New Haven, Connecticut Clinical Neuroscience Division, Veterans Administration National Center for PTSD, West Haven, Connecticut; Wu Tsai Institute, Yale University, New Haven, Connecticut.

REFERENCES

- 1.Fanselow MS, Lester LS (1988): A functional behavioristic approach to aversively motivated behavior: Predatory imminence as a determinant of the topography of defensive behavior. In: Bolles RC, Beecher MD, editors. Evolution and Learning Hillsdale, NJ: Lawrence Erlbaum Associates, 185–212. [Google Scholar]

- 2.Moscarello JM, Penzo MA (2022): The central nucleus of the amygdala and the construction of defensive modes across the threat-imminence continuum. Nat Neurosci 25:999–1008. [DOI] [PubMed] [Google Scholar]

- 3.Peters A, McEwen BS, Friston K (2017): Uncertainty and stress: Why it causes diseases and how it is mastered by the brain. Prog Neurobiol 156:164–188. [DOI] [PubMed] [Google Scholar]

- 4.Rao RPN, Ballard DH (1999): Predictive coding in the visual cortex: A functional interpretation of some extra-classical receptive-field effects. Nat Neurosci 1999 21:79–87. [DOI] [PubMed] [Google Scholar]

- 5.Giustino TF, Maren S (2015): The role of the medial prefrontal cortex in the conditioning and extinction of fear. Front Behav Neurosci 9:298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Johansen JP, Cain CK, Ostroff LE, LeDoux JE (2011): Molecular mechanisms of fear learning and memory. Cell 147:509–524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.LeDoux JE (2000): Emotion circuits in the brain. Annu Rev Neurosci 23:155–184. [DOI] [PubMed] [Google Scholar]

- 8.Maren S (2001): Neurobiology of Pavlovian fear conditioning. Annu Rev Neurosci 24:897–931. [DOI] [PubMed] [Google Scholar]

- 9.Sanders MJ, Wiltgen BJ, Fanselow MS (2003): The place of the hippocampus in fear conditioning. Eur J Pharmacol 463:217–223. [DOI] [PubMed] [Google Scholar]

- 10.Takahashi LK (2001): Role of CRF1 and CRF2 receptors in fear and anxiety. Neurosci Biobehav Rev 25:627–636. [DOI] [PubMed] [Google Scholar]

- 11.Aston-Jones G, Cohen JD (2005): Adaptive gain and the role of the locus coeruleus-norepinephrine system in optimal performance. J Comp Neurol 493:99–110. [DOI] [PubMed] [Google Scholar]

- 12.Eldar E, Cohen JD, Niv Y (2013): The effects of neural gain on attention and learning. Nat Neurosci 16:1146–1153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Mather M, Clewett D, Sakaki M, Harley CW (2016): Norepinephrine ignites local hotspots of neuronal excitation: How arousal amplifies selectivity in perception and memory. Behav Brain Sci 39:e200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.O’Donnell J, Zeppenfeld D, McConnell E, Pena S, Nedergaard M (2012): Norepinephrine: A neuromodulator that boosts the function of multiple cell types to optimize CNS performance. Neurochem Res 37:2496–2512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bush D, Caparosa E, Gekker A, LeDoux J (2010): Beta-adrenergic receptors in the lateral nucleus of the amygdala contribute to the acquisition but not the consolidation of auditory fear conditioning. Front Behav Neurosci 4:154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Debiec J, Ledoux JE (2004): Disruption of reconsolidation but not consolidation of auditory fear conditioning by noradrenergic blockade in the amygdala. Neuroscience 129:267–272. [DOI] [PubMed] [Google Scholar]

- 17.Giustino TF, Maren S (2018): Noradrenergic modulation of fear conditioning and extinction. Front Behav Neurosci 12:43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gu Y, Piper WT, Branigan LA, Vazey EM, Aston-Jones G, Lin L, et al. (2020): A brainstem-central amygdala circuit underlies defensive responses to learned threats. Mol Psychiatry 25:640–654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Uematsu A, Tan BZ, Ycu EA, Cuevas JS, Koivumaa J, Junyent F, et al. (2017): Modular organization of the brainstem noradrenaline system coordinates opposing learning states. Nat Neurosci 20:1602–1611. [DOI] [PubMed] [Google Scholar]

- 20.Agster KL, Mejias-Aponte CA, Clark BD, Waterhouse BD (2013): Evidence for a regional specificity in the density and distribution of noradrenergic varicosities in rat cortex. J Comp Neurol 521:2195–2207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Arnsten AF (2011): Catecholamine influences on dorsolateral prefrontal cortical networks. Biol Psychiatry 69:e89–e99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chandler DJ, Waterhouse BD, Gao WJ (2014): New perspectives on catecholaminergic regulation of executive circuits: Evidence for independent modulation of prefrontal functions by midbrain dopaminergic and noradrenergic neurons. Front Neural Circuits 8:53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fan X, Song J, Ma C, Lv Y, Wang F, Ma L, Liu X (2022): Noradrenergic signaling mediates cortical early tagging and storage of remote memory. Nat Commun 13:7623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Keiflin R, Janak PH (2015): Dopamine prediction errors in reward learning and addiction: From theory to neural circuitry. Neuron 88:247–263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Schultz W, Dayan P, Montague PR (1997): A neural substrate of prediction and reward. Science 275:1593–1599. [DOI] [PubMed] [Google Scholar]

- 26.Yu AJ, Dayan P (2005): Uncertainty, neuromodulation, and attention. Neuron 46:681–692. [DOI] [PubMed] [Google Scholar]

- 27.Breton-Provencher V, Drummond GT, Feng J, Li Y, Sur M (2022): Spatiotemporal dynamics of noradrenaline during learned behaviour. Nature 606:732–738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Feng J, Zhang C, Lischinsky JE, Jing M, Zhou J, Wang H, et al. (2019): A genetically encoded fluorescent sensor for rapid and specific in vivo detection of norepinephrine. Neuron 102:745–761.e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tillage RP, Sciolino NR, Plummer NW, Lustberg D, Liles LC, Hsiang M, et al. (2020): Elimination of galanin synthesis in noradrenergic neurons reduces galanin in select brain areas and promotes active coping behaviors. Brain Struct Funct 225:785–803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wissing C, Maheu M, Wiegert S, Dieter A (2022): Targeting noradrenergic neurons of the locus coeruleus: A comparison of model systems and strategies. bioRxiv. 10.1101/2022.01.22.477348. [DOI] [Google Scholar]

- 31.Mikhael JG, Kim HGR, Uchida N, Gershman SJ (2022): The role of state uncertainty in the dynamics of dopamine. Curr Biol 32:1077–1087.e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Starkweather CK, Babayan BM, Uchida N, Gershman SJ (2017): Dopamine reward prediction errors reflect hidden-state inference across time. Nat Neurosci 20:581–589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Andrade R, Aghajanian GK (1984): Intrinsic regulation of locus coeruleus neurons: Electrophysiological evidence indicating a predominant role for autoinhibition. Brain Res 310:401–406. [DOI] [PubMed] [Google Scholar]

- 34.Rescorla RA, Wagner AR (1972): A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical Conditioning II: Current Research and Theory. New York: Appleton-Century-Crofts, 64–99. [Google Scholar]

- 35.Hamid AA, Pettibone JR, Mabrouk OS, Hetrick VL, Schmidt R, Vander Weele CM, et al. (2015): Mesolimbic dopamine signals the value of work. Nat Neurosci 19:117–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.McCall JG, Siuda ER, Bhatti DL, Lawson LA, McElligott ZA, Stuber GD, Bruchas MR (2017): Locus coeruleus to basolateral amygdala noradrenergic projections promote anxiety-like behavior. eLife 6:e18247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Su Z, Cohen JY (2022): Two types of locus coeruleus norepinephrine neurons drive reinforcement learning. bioRxiv. 10.1101/2022.12.08.519670. [DOI] [Google Scholar]

- 38.Tsetsenis T, Badyna JK, Li R, Dani JA (2022): Activation of a locus coeruleus to dorsal hippocampus noradrenergic circuit facilitates associative learning. Front Cell Neurosci 16:887679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Seo DO, Zhang ET, Piantadosi SC, Marcus DJ, Motard LE, Kan BK, et al. (2021): A locus coeruleus to dentate gyrus noradrenergic circuit modulates aversive contextual processing. Neuron 109:2116–2130.e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Malapani C, Fairhurst S (2002): Scalar timing in animals and humans. Learn Motiv 33:156–176. [Google Scholar]

- 41.Amo R, Matias S, Yamanaka A, Tanaka KF, Uchida N, Watabe-Uchida M (2022): A gradual temporal shift of dopamine responses mirrors the progression of temporal difference error in machine learning. Nat Neurosci 25:1082–1092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ludvig EA, Sutton RS, Kehoe EJ (2008): Stimulus representation and the timing of reward-prediction errors in models of the dopamine system. Neural Comput 20:3034–3054. [DOI] [PubMed] [Google Scholar]

- 43.Martins AR, Froemke RC (2015): Coordinated forms of noradrenergic plasticity in the locus coeruleus and primary auditory cortex. Nat Neurosci 18:1483–1492. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Sara SJ, Segal M (1991): Plasticity of sensory responses of locus coeruleus neurons in the behaving rat: Implications for cognition. Prog Brain Res 88:571–585. [DOI] [PubMed] [Google Scholar]

- 45.Schultz W, Dickinson A (2000): Neuronal coding of prediction errors. Annu Rev Neurosci 23:473–500. [DOI] [PubMed] [Google Scholar]

- 46.Aston-Jones G, Rajkowski J, Kubiak P, Alexinsky T (1994): Locus coeruleus neurons in monkey are selectively activated by attended cues in a vigilance task. J Neurosci 14:4467–4480. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Nieuwenhuis S, Aston-Jones G, Cohen JD (2005): Decision making, the P3, and the locus coeruleus–norepinephrine system. Psychol Bull 131:510–532. [DOI] [PubMed] [Google Scholar]

- 48.Preuschoff K, ‘t Hart BM, Einhäuser W (2011): Pupil dilation signals surprise: Evidence for noradrenaline’s role in decision making. Front Neurosci 5:115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Jordan R, Keller GB (2023): The locus coeruleus broadcasts prediction errors across the cortex to promote sensorimotor plasticity. Elife 12:RP85111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Kim J, Ghim JW, Lee JH, Jung MW (2013): Neural correlates of interval timing in rodent prefrontal cortex. J Neurosci 33:13834–13847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Shimbo A, Izawa EI, Fujisawa S (2021): Scalable representation of time in the hippocampus. Sci Adv 7:eabd7013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Schwarz LA, Miyamichi K, Gao XJ, Beier KT, Weissbourd B, Deloach KE, et al. (2015): Viral-genetic tracing of the input-output organization of a central noradrenaline circuit. Nature 524:88–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Bouret S, Sara SJ (2004): Reward expectation, orientation of attention and locus coeruleus-medial frontal cortex interplay during learning. Eur J Neurosci 20:791–802. [DOI] [PubMed] [Google Scholar]

- 54.Akiti K, Tsutsui-Kimura I, Xie Y, Mathis A, Markowitz JE, Anyoha R, et al. (2022): Striatal dopamine explains novelty-induced behavioral dynamics and individual variability in threat prediction. Neuron 110:3789–3804.e9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Matsumoto M, Hikosaka O (2009): Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature 459:837–841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Hangya B, Ranade SP, Lorenc M, Kepecs A (2015): Central cholinergic neurons are rapidly recruited by reinforcement feedback. Cell 162:1155–1168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Kutlu MG, Zachry JE, Melugin PR, Cajigas SA, Chevee MF, Kelly SJ, et al. (2021): Dopamine release in the nucleus accumbens core signals perceived saliency. Curr Biol 31:4748–4761.e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Sturgill J, Hegedus P, Li S, Chevy Q, Siebels A, Jing M, et al. (2020): Basal forebrain-derived acetylcholine encodes valence-free reinforcement prediction error. bioRxiv. 10.1101/2020.02.17.953141. [DOI] [Google Scholar]

- 59.Vander Weele CM, Siciliano CA, Matthews GA, Namburi P, Izadmehr EM, Espinel IC, et al. (2018): Dopamine enhances signal-to-noise ratio in cortical-brainstem encoding of aversive stimuli. Nature 563:397–401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Gershman SJ, Jones CE, Norman KA, Monfils MH, Niv Y (2013): Gradual extinction prevents the return of fear: implications for the discovery of state. Front Behav Neurosci 7:164. [published correction appears in Front Behav Neurosci 2021;15:786900]. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.