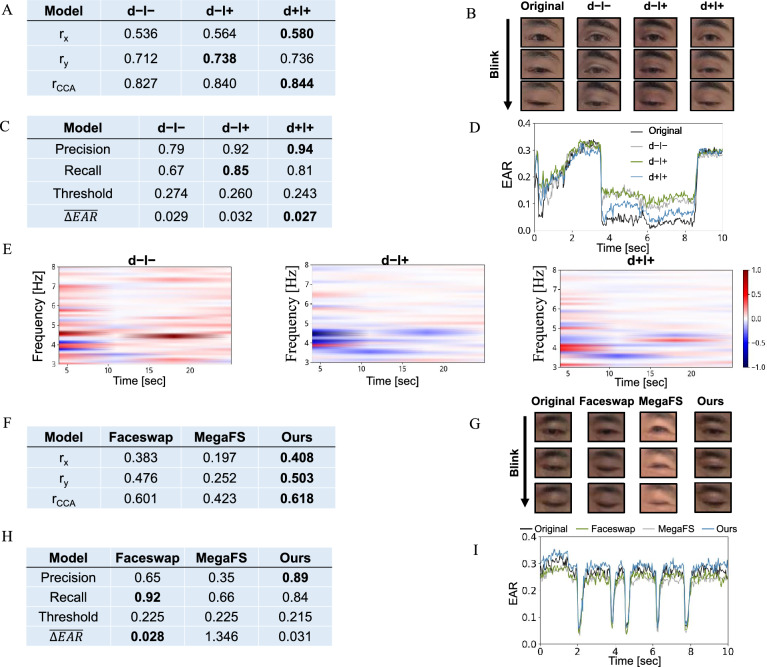

Figure 7.

Model comparison. We qualitatively and quantitatively compared model performance under different settings and with other deepfake models. Original: original data; d−l−: output from the ablated model trained without mEBAL blink data and without landmark similarity block; d−l+: output from the model trained without mEBAL blink data, with landmark similarity block; d+l+: output from FaceMotionPreserve model trained with blink data and landmark similarity block. (A) Pearson and CCA correlation coefficients of facial dynamics between original/de-identified pairs. Augmented training data and landmark similarity module both helped preserve facial dynamics. (B) Video frames of a patient’s blink. The d+l+ model output best matched the original. (C) Comparison of blink behaviors. The d−l+ improved blink detection accuracy and the d+l+ further improved eye closing completeness by lower eye blink threshold and smaller EAR deviation. (D) EAR curves of a patient. (E) Comparison of tremor spectrogram deviation of a patient. From left to right: d−l−, d−l+, d+l+ with mean deviation to the original spectrogram 0.106, , 0.003 in 4–6 Hz, respectively. (F–I) Comparison to Faceswap and MegaFS.