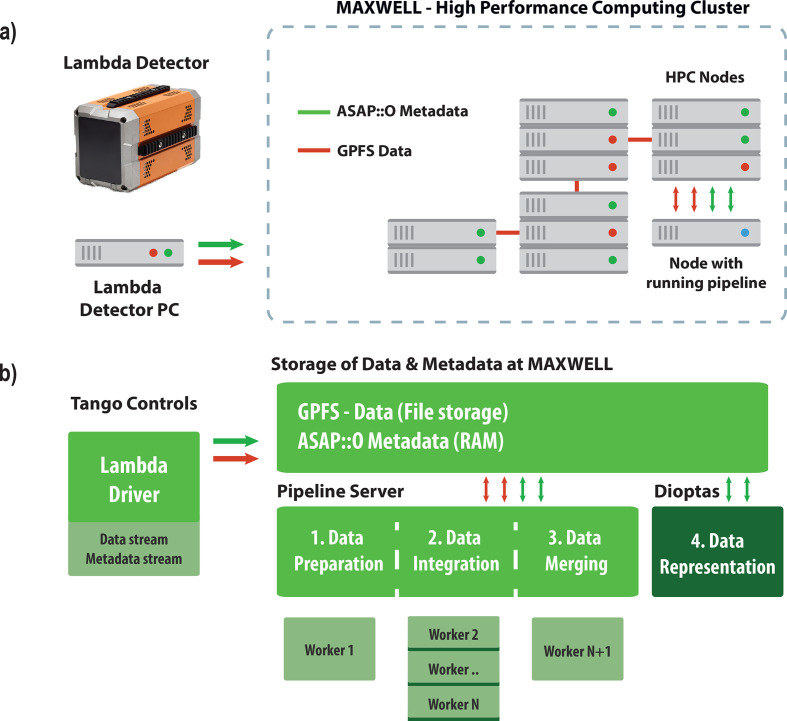

Figure 1.

The operation of the data processing pipeline. (a) The initial step of data acquisition using the Lambda 2M detector, where a metadata stream is created and recorded within the distributed ASAP::O system. The data are then collected by the detector and stored in the form of NeXuS files, which are transferred to the GPFS storage within the HPC cluster. A separate processing node within the cluster is dynamically allocated for a specific beamtime session and hosts the pipeline process. (b) An illustration of how the driver of the Lambda detector creates a data stream in the form of NeXuS files and streams metadata containing their filenames via ASAP::O messages. This information can be accessed at different stages of the pipeline, numbered from one to three. The pipeline uses a configuration file and allocates several processes, defined as workers, utilizing multiple cores of the HPC node. Each worker performs a specific role, such as (1) data preparation, (2) integration using the pyFAI code (Ashiotis et al., 2015 ▸) and (3) data merging. Coordination between the different workers is also done via ASAP::O metadata messages. The pipeline is designed to operate efficiently during data acquisition and saves time due to automatic data processing. The fourth and final step requires the use of the DIOPTAS software to view the processed data or initiate another round of reintegration at an HPC node when the allocated pipeline node becomes unavailable.