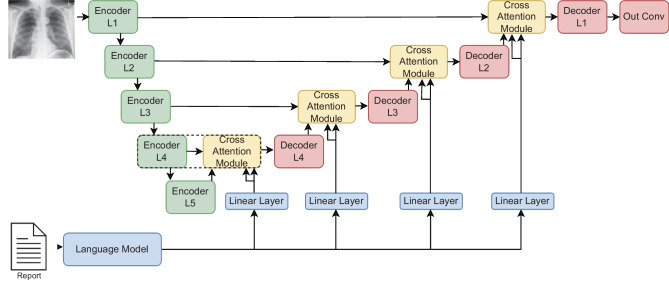

Fig. 1.

ConTEXTual Net combines a U-Net and a transformer architecture. It uses the encoder layers of the U-Net to extract visual representations and uses a pre-trained language model (bottom-left) to extract language representations. The architecture is modular such that any language model that creates word-level vector representations can be used. It then performs cross-attention between the modalities and finally uses the decoder layers (top-right) of the U-Net to predict the segmentation masks. The cross-attention module (dotted box) is further detailed in Fig 2