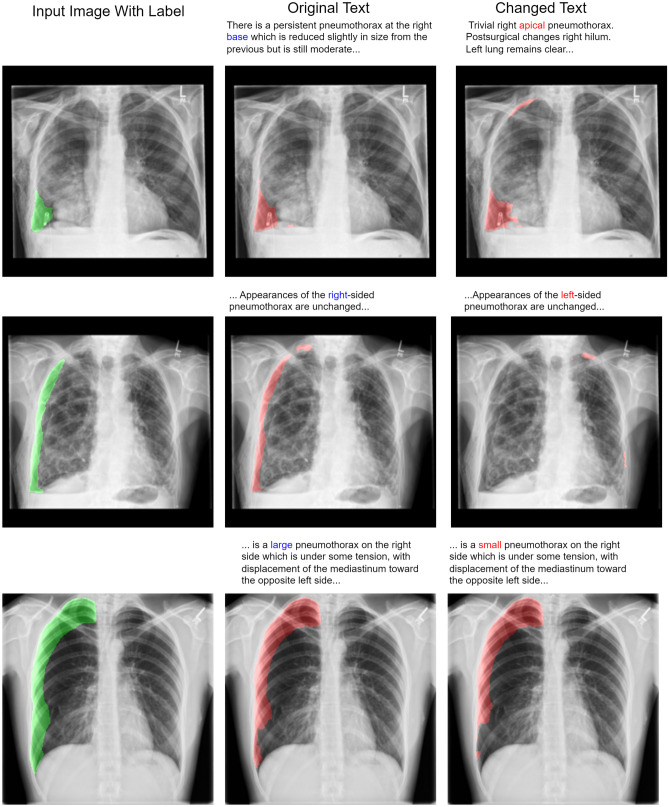

Fig. 4.

The same input image with different text is fed into the multimodal model. In the top row, an incorrect report describing an apical pneumothorax is used as input with an image, demonstrating that location descriptors like “apical” and “base” carry relevant information for segmentation. In the middle row, we show an example of an image and text with the term “right” changed to “left”. This illustrates the model’s sensitivity at the word level. In the bottom row, we changed the term “large” to “small”, which resulted in a reduction of segmented pixels by 10%. Note that “left” and “right” correspond to the patient’s “left” and “right”