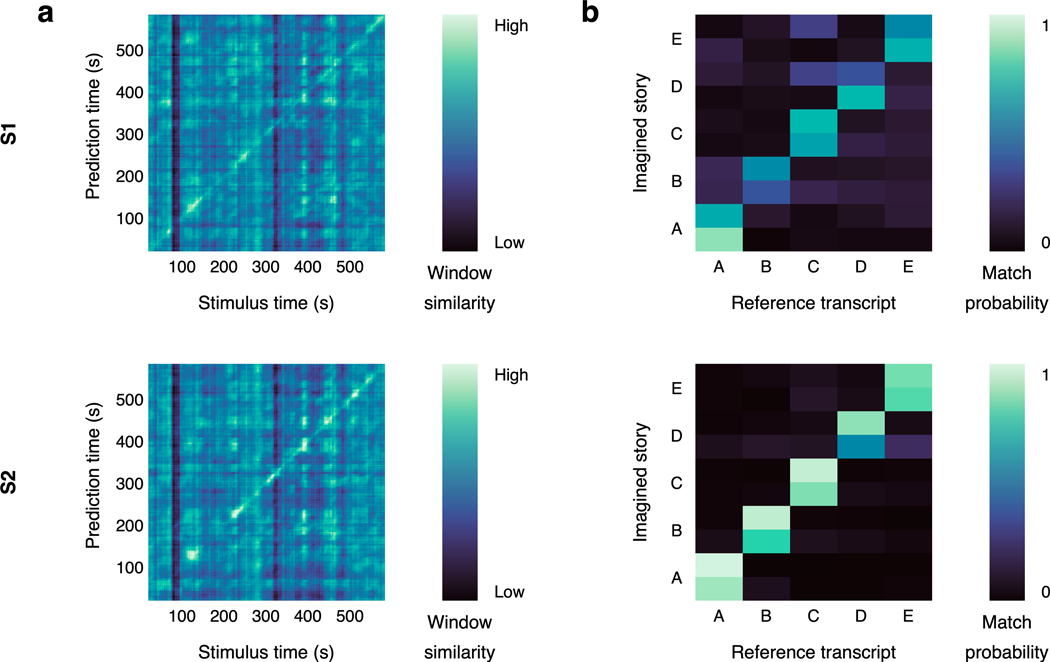

Extended Data Fig. 2. Perceived and imagined speech identification performance.

Language decoders were trained for subjects S1 and S2 on fMRI responses recorded while the subjects listened to narrative stories. (a) The decoders were evaluated on single-trial fMRI responses recorded while the subjects listened to the perceived speech test story. The color at reflects the BERTScore similarity between the th second of the decoder prediction and the th second of the actual stimulus. Identification accuracy was significantly higher than expected by chance (, one-sided permutation test). Corresponding results for subject S3 are shown in Figure 1f in the main text. (b) The decoders were evaluated on single-trial fMRI responses recorded while the subjects imagined telling five 1-minute test stories twice. Decoder predictions were compared to reference transcripts that were separately recorded from the same subjects. Each row corresponds to a scan, and the colors reflect the similarities between the decoder prediction and all five reference transcripts. For each scan, the decoder prediction was most similar to the reference transcript of the correct story (100% identification accuracy). Corresponding results for subject S3 are shown in Figure 3a in the main text.