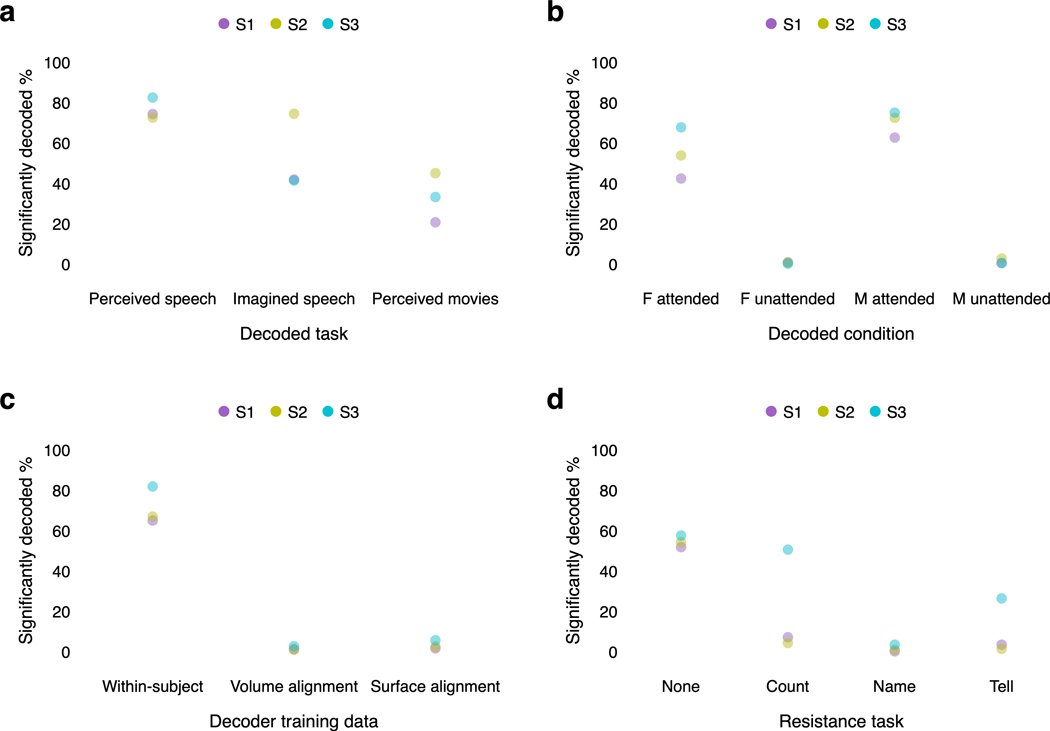

Extended Data Fig. 5. Comparison of decoding performance across experiments.

Decoder predictions from different experiments were compared based on the fraction of significantly decoded time-points under the BERTScore metric (). The fraction of significantly decoded time-points was used because it does not depend on the length of the stimuli. (a) The decoder successfully recovered 72–82% of time-points during perceived speech, 41–74% of time-points during imagined speech, and 21–45% of time-points during perceived movies. (b) During a multi-speaker stimulus, the decoder successfully recovered 42–68% of time-points told by the female speaker when subjects attended to the female speaker, 0–1% of time-points told by the female speaker when subjects attended to the male speaker, 63–75% of time-points told by the male speaker when subjects attended to the male speaker, and 0–3% of time-points told by the male speaker when subjects attended to the female speaker. (c) During a perceived story, within-subject decoders successfully recovered 65–82% of time-points, volumetric cross-subject decoders successfully recovered 1–2% of time-points, and surface-based cross-subject decoders successfully recovered 1–5% of time-points. (d) During a perceived story, within-subject decoders successfully recovered 52–57% of time-points when subjects passively listened, 4–50% of time-points when subjects resisted by counting by sevens, 0–3% of time-points when subjects resisted by naming animals, and 1–26% of time-points when subjects resisted by imagining a different story.