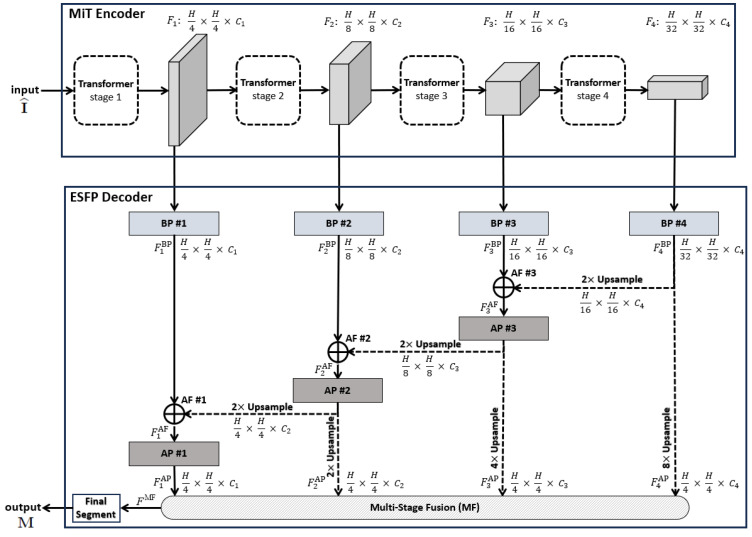

Figure 1.

Top-level ESPFNet architecture. The input is an video frame , where for our application, while the output is a segmented frame . The MiT encoder of Xie et al. serves as the network backbone [24], while the the Efficient Stage-Wise Feature Pyramid (ESFP) serves as the decoder. The four layers constituting the ESFP are (1) the basic prediction (BP) layer, given by blocks BP #1 through BP #4; (2) the aggregating fusion (AF) layer, defined by AF #1 through AF #3; (3) the aggregating prediction (AP) layer, given by AP #1 through AP #3; and (4) the multi-stage fusion (MF) layer. The “Final Segment” block produces the final segmented video frame. Quantities such as denote the feature tensors produced by each network block, while quantities “” specify the feature tensor dimensions, e.g., the dimensions of are .