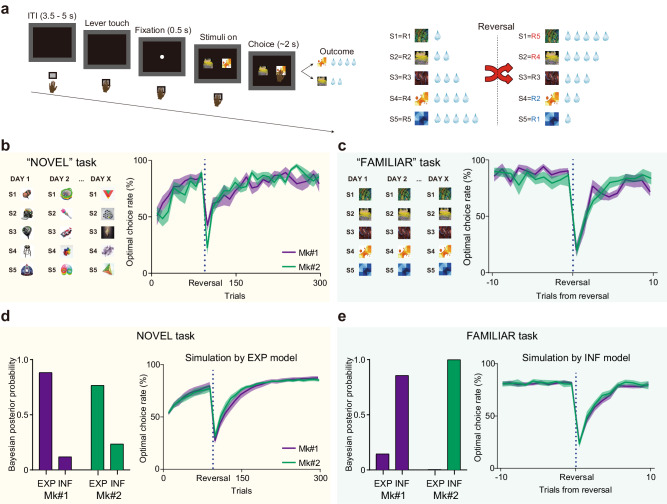

Fig. 1. Experience- and inference-based value updating in multi-reward reversal learning tasks.

a Sequence of a trial (left) and the reversal rule for stimulus-reward associations (right). S1-S5 represent the identity of each stimulus and R1-R5 represent the amount of reward (1 to 5 drops of juice) associated with each stimulus. Examples of stimulus sets and baseline performance for the “NOVEL” (b) and “FAMILIAR” (c) tasks. Averaged performance for each monkey is presented (N = 8 and 7 sessions for the NOVEL and FAMILIAR tasks, respectively). Data for the FAMILIAR task were truncated to show those around the reversals and were averaged across reversals. Bayesian posterior probability calculated for “EXP” and “INF” models given the behavioral data in each task (left) and the behavioral simulation by the model with higher posterior probability for each monkey (right); EXP model for the NOVEL task (d) and INF model for FAMILIAR task (e), respectively. Solid lines and shaded area represent the mean and s.e.m, respectively.