Abstract

The ethical decision making of researchers has historically been studied from an individualistic perspective. However, researchers rarely work alone, and they typically experience ethical dilemmas in a team context. In this mixed-methods study, 67 scientists and engineers working at a public R1 (very high research activity) university in the United States responded to a survey that asked whether they had experienced or observed an ethical dilemma while working in a research team. Among these, 30 respondents agreed to be interviewed about their experiences using a think-aloud protocol. A total of 40 unique ethical incidents were collected across these interviews. Qualitative data from interview transcripts were then systematically content-analyzed by multiple independent judges to quantify the overall ethicality of team decisions as well as several team characteristics, decision processes, and situational factors. The results demonstrated that team formalistic orientation, ethical championing, and the use of ethical decision strategies were all positively related to the overall ethicality of team decisions. Additionally, the relationship between ethical championing and overall team decision ethicality was moderated by psychological safety and moral intensity. Implications for future research and practice are discussed.

Keywords: Research ethics, Ethical decision making, Teams, Interviews, Content analysis

The integrity of the scientific enterprise depends a great deal on faith—faith that researchers will “play by the rules.” In other words, it is commonly assumed that researchers will largely follow best practices, comply with the ethical principles and standards of their profession, and that the scientific enterprise will self-correct over time as necessary (Watts et al., 2017). When this trust is jeopardized—such as when researchers are caught fabricating or falsifying data, plagiarizing others’ work, failing to disclose conflicts of interest, or engaging in a range of questionable research practices—the trust of the public as well as members of those professions erodes (e.g., Kakuk, 2009; Sox & Rennie, 2006; Stroebe et al., 2012). In other words, the integrity of the scientific enterprise depends upon the ethical decision making of researchers.

Historically, the predominant theoretical lens used by scholars to understand the factors that influence ethical decision making is the “bad apple” model (Mulhearn et al., 2017; Treviño & Youngblood, 1990). That is, poor ethical decisions are the result of individual differences, or traits, unique to a person’s psychological makeup (e.g., narcissism, machiavellianism). Although the empirical ethics literature, broadly speaking, supports the link between several individual traits and ethical decision making (Borkowski & Ugras, 1998; Pan & Sparks, 2012), these relationships tend to be only small in statistical terms, suggesting that non-individual factors may play a more important role. More recently, scholars focused on research integrity specifically have expanded their models to incorporate institutional and even cross-cultural perspectives on ethical decision making (e.g., Geller et al., 2010; Valkenburg et al., 2021). However, there remains an important gap in our knowledge at one level of fundamental importance—that of the research group, or team. Thus, here we introduce a teams-based approach to understanding researcher ethical decision making.

For over half a century, research on the psychology of groups and teams has pointed to a consistent conclusion—people think and behave differently when they work as part of a team compared to when they work alone (Kozlowski & Ilgen, 2006). Indeed, it is now well-established that when people operate in a team, performance can be both improved and impaired by a number of factors (Watts et al., 2022). For example, meta-analytic evidence demonstrates that teamwork, on the whole, tends to be associated with more positive worker attitudes and higher levels of work performance (Richter et al., 2011; Schmutz et al., 2019). At the same time, teamwork has also been associated with several unique process losses—such as interpersonal conflict (De Wit et al., 2012), ingroup favoritism bias (Balliet et al., 2014), and discussion bias in favor of shared (vs. unique) information (Lu et al., 2012), to name a few. Despite these well-known effects of facilitating and inhibiting factors in team settings from the social and organizational psychology literatures, the literature on researcher ethical decision making has largely neglected the team-based perspective.

The purpose of this research is to introduce a team-based perspective to the literature on research ethics and offer an initial exploration of the unique factors related to ethical decision making in research teams. This research is organized as follows. First, the emerging literature on team-based ethical decision making is summarized with an eye toward key factors that might facilitate or hinder ethical decision making in research teams. Second, these factors are organized and presented as a theoretical model based on traditional input-process-output frameworks of team processes. Third, we present the results of a mixed-methods study of ethical decision making in research teams that involved surveying and interviewing scientists and engineers about ethical incidents they had observed or experienced in a research team. This study also involved content analyzing interview transcripts to allow for quantitative modeling of the relationships appearing in the theoretical model. This theoretical model, with accompanying empirical findings, provides a roadmap for understanding critical team-based factors associated with ethical decision making in research teams.

Team-Based Ethical Decision Making

Ethical decision making involves a set of complex processes in which individuals or groups make sense of, and respond to, moral dilemmas (Mumford et al., 2008). When ethical decision making occurs in team settings where two or more group members work interdependently to achieve a shared goal, we refer to this as team-based ethical decision making. As previously stated, the team-based perspective on ethical decision making is in its infancy. For example, in MacDougall and colleagues’ comprehensive review of theory progression in the ethical decision-making literature (MacDougall et al., 2014), they were unable to identify any theories that specified ethical decision making as a team-level phenomenon, noting this subject as a “future direction.”

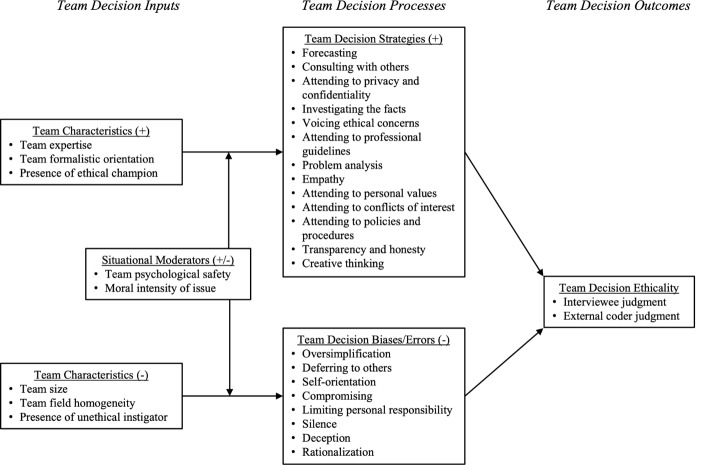

This lack of team-level theory persists despite the publication of a handful of empirical studies on group and team ethical decision making. Findings from this empirical work are briefly summarized next, as these findings provided the material for developing our initial theoretical model of team-based ethical decision making. This empirical literature is organized using an input-process-output framework, where the primary output of interest is overall team decision ethicality. We conceptualize overall team decision ethicality as a complex, multifaceted construct (Beu et al., 2003), where team decisions may be judged as ethical based on the extent to which they comply with relevant standards and regulations, conform to field best practices and norms rooted in professional consensus, and avoid negative consequences such as harming others (Watts et al., 2021). The “inputs” category focuses on team characteristics and situational factors that provide the context for team-based ethical decision making. Finally, the “processes” category includes team decision strategies and biases that arise from team characteristics and situational factors and ultimately influence overall team decision ethicality. Figure 1 presents our theoretical model of team-based ethical decision making.

Fig. 1.

Theoretical model of facilitating (+) and inhibiting (-) factors in team-based ethical decision making

Team Decision Inputs

Facilitating Characteristics

We identified three facilitating team characteristics, including team expertise, team formalistic orientation, and the presence (vs. absence) of an ethical champion on the team. Prior research based on business ethics simulations demonstrates that teams comprised of older, or more experienced members, tend to generate more ethical decisions than teams comprised of members with less expertise (Hunt & Jennings, 1997; Jennings et al., 1996). Formalistic orientation refers to the degree to which members tend to adopt a deontological (i.e., rule-focused) perspective versus a utilitarian (i.e., consequences-focused) perspective when solving ethical dilemmas, and has been shown to be positively related to team ethical decision making (Pearsall & Ellis, 2011). The presence of an ethical champion on the team has also been found to be positively related to team ethical awareness, communication, and decision making (Chen et al., 2020). An ethical champion is an individual who frequently communicates about the importance of adhering to ethical principles and role models ethical behavior.

Inhibiting Characteristics

The three inhibiting team characteristics investigated here include team size, team field homogeneity, and the presence (vs. absence) of an unethical instigator. As the number of members on a team increases (e.g., more than 5–7 members), personal accountability for any decisions tends to diminish, resulting in poorer ethical communications and decision making when teams are faced with ethical dilemmas (Armstrong et al., 2004). Additionally, when all members come from the same field or professional background, the lack of diversity in perspectives may constrain the team’s ability to detect problematic behavior or decision patterns (King, 2002), especially patterns around questionable research practices that are commonplace in that field. Finally, we predicted that, just as ethical champions facilitate team ethical decision making, the presence of unethical instigators (i.e., members who generate unethical solutions, vocally oppose ethical courses of action, and enable the team to rationalize unethical decisions) are likely to inhibit team ethical decision processes and outputs (Bohns et al., 2014).

Team Decision Processes

Facilitating Processes

Team decision strategies refer to cognitive resources or behavioral capabilities executed by one or more team members that facilitate team ethical decision making (Thiel et al., 2012). For example, teams tend to make more ethical decisions when they communicate frequently about the downstream consequences of their decisions (i.e., forecasting), consult with others (including knowledgeable external stakeholders) about potential courses of action (i.e., consultation and collaboration), and demonstrate concern for others’ well-being (i.e., empathy). There is no established team-specific taxonomy of ethical decision strategies. However, we identified 13 such strategies that might be relevant to team ethical decision making based on the ethical sensemaking literature (Mumford et al., 2008). The complete list of team decision strategies identified is detailed in the method.

Inhibiting Processes

Team decision biases are cognitive errors, or information distortions, enacted by one or more team members that disrupt team ethical decision making (Zeni et al., 2016). For example, when team members shift responsibility to others, compromise on their professional values or standards, or rationalize potential unethical decisions as necessary for “the good of the group”, these are all examples of cognitive biases that might inhibit team ethical decision making. Given the absence of a team-specific taxonomy of decision biases related to ethics, we once again drew on the broader decision bias literature (e.g., Medeiros et al., 2014) to identify 8 potential team decision biases. The complete list is detailed in the method.

Situational Moderators

Team ethical decision making is not merely a function of team characteristics and processes, but also the environments in which these teams are operating. Two situational factors have been examined in prior research on team ethical decision making, including team psychological safety and moral intensity. Team psychological safety refers to a shared belief among members that it is safe for members to take interpersonal risks (Edmondson, 1999), and voicing ethical concerns is one example of an interpersonal risk (Chen & Treviño, 2023). Although team psychological safety is generally held to exhibit a positive influence on team attitudes and performance (Edmondson & Lei, 2014), it has also been shown to moderate the influence of team ethical orientation, such that utilitarian-oriented teams exhibit increased unethical behavior and decisions under conditions of high psychological safety (Pearsall & Ellis, 2011).

A second situational factor, moral intensity, refers to six elements of a situation that can amplify the perceived moral stakes of a dilemma. These elements include the magnitude of potential consequences to victims (or benefits to beneficiaries), the degree of social consensus around the morality of a decision, the probability that a given act will actually result in the harm (or benefits) imagined, the lag in time between an action and its consequences, the physical or psychological closeness between an actor and those acted upon, and the number of potential victims (or beneficiaries) impacted (Jones, 1991). Moral intensity is generally held to facilitate ethical decision making because it stimulates the recognition of ethical issues and motivates ethical reasoning (Valentine & Hollingsworth, 2012). At the same time, situations involving high moral intensity can place heavy psychological demands on teams. For example, ethical champions may be particularly important for buffering team resources under high-stakes conditions (Zheng et al., 2015).

Research Questions

Based on the literature reviewed above, which is summarized in Fig. 1, the following four research questions guided our investigation:

How prevalent are ethical incidents in team research settings, compared to individual research settings?

How are team characteristics and situational factors related to team decision processes and outputs in research settings?

(a) What are the most prevalent decision processes (i.e., strategies and biases) observed in team research settings, and (b) how do these processes relate to team decision outputs?

How might situational factors moderate the influence of team characteristics on team decision processes and outputs?

Method

Sample

Research faculty and graduate students in the colleges of science and engineering at an R1 (very high research activity) university were invited via email to participate in a 5-minute online screening survey. The screening survey presented informed consent information, collected demographic data, and invited volunteers to sign up for a virtual interview opportunity in exchange for a $25 electronic Visa gift card. The university’s institutional review board (IRB) reviewed and approved all study procedures.

A total of 67 researchers completed the screening survey. Approximately 42% of respondents were women. The most common racial/ethnic group represented was Asian (63%), followed by White (27%), Black/African American (6%), and Hispanic (3%). While many participants were graduate students (77%), the sample also included three full professors, two assistant professors, two post-doctoral researchers, and two professional researchers in applied roles. Ages ranged from 21 to 72 (mean of 30), and the average participant reported having just over 4 years of research experience. The most common fields represented included computer science (27%), engineering (21%), biology (9%), chemistry (7%), psychology (7%), physics (5%), and mathematics (5%). About half (51%) indicated that they primarily conducted research in a team, and roughly a third (31%) indicated that they had observed or experienced an ethical/unethical situation while working on research (approximately 34% were unsure and 27% reported they had not).

Everyone who completed the survey had the option to sign up for a virtual interview timeslot. A total of 30 researchers completed virtual interviews, and these interviewees described a total of 40 unique ethical dilemmas.

Interview Procedures

Interviews lasted approximately 30 min. Each interview was conducted virtually with one member of our research team via Microsoft Teams where audio responses were recorded and automatically transcribed. For the one participant who was uncomfortable with being audio-recorded, the interviewer took detailed notes instead that served as the transcript. Video was not recorded to help participants feel more comfortable and protect their privacy.

Each interview followed a semi-structured think-aloud protocol.1 Think-aloud interview protocols are useful for probing cognitive processes and decisions that otherwise might be difficult or impossible to observe (van Someren et al., 1994). Further, think-aloud protocols have been used in prior research to explore the cognitive processes used by individual researchers in ethical decision making (Brock et al., 2008). While our protocol was structured based on a series of prompts, we allowed for some flexibility in terms of the order in which these prompts were asked. This helped to reduce potential redundancies given that participants sometimes provided rich information corresponding to prompts that had not yet been asked.

Following brief introductions, the interviewer defined the concept of an ethical dilemma and asked the participant to describe a situation in which they “faced an ethical dilemma in the context of working in a research team.” Once a participant identified such a situation, the interviewer guided the participant through a series of questions designed to probe for details about the situation/context (e.g., time, setting, place), any actions taken or decisions made by team members, and the consequences or outcomes associated with those actions or decisions. Additionally, participants were asked a series of questions about the general characteristics of their research team at the time of the incident (e.g., experience level and field of members, whether there was a clear leader on the team, etc.). Finally, if time allowed, participants were permitted to describe additional incidents.

Data Coding Procedures

Interview transcripts were subjected to content analysis using a coding database developed by our research team following a review of the literature on ethical decision making in team settings. The coding database contained a unique row for each of the 40 incidents and columns for a range of variables, described in more detail below. Each variable was operationally defined to ensure that coders had a shared understanding of each construct and scale.

The three coders consisted of two assistant professors and one doctoral student in industrial and organizational psychology. The coding team reviewed the coding database and met twice to practice scoring transcripts from three incidents together. Once they determined that adequate consensus had been achieved, each remaining incident was randomly assigned to two of the three coders who proceeded to code each incident independently over a period of 8 weeks.

Because each incident was coded by at least two coders, interrater agreement coefficients could be estimated. For binary variables (e.g., yes vs. no; present vs. absent), a raw percentage of agreement was estimated. This was considered preferable to Cohen’s kappa given that kappa can provide severely biased estimates of agreement depending on base rates (Byrt et al., 1993). For non-binary variables (e.g., count and Likert scales), the rwg interrater agreement coefficient was estimated. Both the raw percentage and rwg can range from 0 to 1 and can be interpreted similarly. That is, because all variables demonstrated interrater agreement coefficients at 0.70 or above, we determined a sufficient level of consensus had been achieved to render the variables interpretable (LeBreton & Senter, 2008). The operational definitions and interrater agreement coefficients corresponding to each variable are presented next.

Variables

Dilemma Background Characteristics

Three dilemma background characteristics were coded to capture general information about the nature and setting of the dilemmas reported by interviewees. First, coders used a “1” (yes) or “0” (no) score to indicate if there was a team component to the dilemma (i.e., the dilemma involved interactions among two or more members of a team; interrater agreement = 0.86). Second, coders tracked whether the dilemma occurred at the interviewee’s current (“1”) or a former (“0”) institution (interrater agreement = 0.86).

Third, the nine professional ethics guidelines provided by the U.S. Office of Research Integrity (ORI) were used to code for the subject area of each ethical dilemma (Steneck, 2007). These guidelines were developed with the intent of universality. That is, the guidelines encompass a broad range of ethical issues faced by researchers across various fields and professions. Coders used a “1” (relevant) or “0” (irrelevant) code for each guideline to indicate the primary subject area for each ethical dilemma. Coders were permitted to score multiple subject areas as relevant when applicable. The interrater agreement coefficients for the nine subject areas ranged from 0.74 to 0.92 (see Table 1).

Table 1.

Prevalence and interrater agreement for dilemma background characteristics

| Variables | Frequency | Percentage | Interrater agreement |

|---|---|---|---|

| Team component to dilemma | 35 | 87.5 | 0.86 |

| Occurred at current (vs. former) institution | 17 | 42.5 | 0.86 |

| Subject Area of Ethical Dilemma | |||

| Research misconduct (fabrication, falsification, plagiarism) | 30 | 75 | 0.74 |

| Protecting human subjects (IRB, consent, harm, etc.) | 22 | 55 | 0.88 |

| Welfare of lab animals (IACUC, harm, etc.) | 0 | 0 | 0.88 |

| Conflicts of interest | 16 | 40 | 0.92 |

| Data management practices | 13 | 32.5 | 0.76 |

| Mentor and trainee responsibilities | 18 | 45 | 0.79 |

| Collaborative research | 21 | 52.5 | 0.91 |

| Authorship and publication practices | 14 | 35 | 0.88 |

| Peer Review | 5 | 12.5 | 0.88 |

Note n = 40 ethical dilemmas; counts do not add up to 40 and percentages do not add up to 100% because coders were permitted to select multiple subject areas, when applicable, for each dilemma

Team Characteristics

Eight team characteristics were coded, specifically with respect to the team described as being involved in each ethical dilemma. Team size was coded by counting the total number of members on the research team involved in the dilemma. A three-point Likert scale was used to score team expertise (1 = low, 2 = moderate/mixed, 3 = high), field heterogeneity (1 = mostly homogenous, 2 = mixed, 3 = mostly heterogenous), formalistic orientation (1 = utilitarian orientation, 2 = mixed orientation, 3 = formalistic orientation), moral intensity (1 = low, 2 = moderate, 3 = high), and psychological safety (1 = low, 2 = moderate, 3 = high). Finally, whether there was a clear unethical instigator or ethical champion on the team were coded using a “1” (yes) or “0” (no) scale. The interrater agreement coefficients for the eight team characteristics variables ranged from 0.70 to 0.91 (see Table 2).

Table 2.

Definitions, descriptives, and agreement statistics for team decision outcomes and inputs

| Variable | Definition | M | SD | Interrater agreement |

|---|---|---|---|---|

| Overall Team Decision Ethicality | ||||

| Interviewee judgment | Interviewee judged the final decision made by the team to be ethical | 2.04 | 0.84 | 0.73 |

| Coder judgment | External coder judged the final decision made by the team to be ethical | 1.98 | 0.77 | 0.73 |

| Team Characteristics (+) | ||||

| Expertise | Team consisted of fully trained professionals (as opposed to students) | 2.09 | 0.32 | 0.87 |

| Formalistic orientation | Team members focused on following established rules, procedures, and principles (as opposed to utilitarian orientation) | 1.64 | 0.82 | 0.70 |

| Champion presence | A team member served as a vocal and active ethical role model | 0.55 | 0.50 | 0.88 |

| Team Characteristics (-) | ||||

| Size | Number of members on team | 4.80 | 2.40 | 0.91 |

| Field homogeneity | Team consisted of members from the same field (as opposed to diverse fields) | 0.90 | 0.30 | 0.71 |

| Instigator presence | A team member instigated the dilemma or made the dilemma worse by serving as an anti-ethical role model | 0.83 | 0.38 | 0.71 |

| Situational Moderators (+/-) | ||||

| Psychological safety | Team members felt safe to raise concerns and question the status quo | 1.90 | 0.82 | 0.71 |

| Moral intensity | Extent to which the dilemma involved high-stakes (e.g., magnitude of consequences) | 2.00 | 0.73 | 0.74 |

Note n = 40 ethical dilemmas; variables with means between 0 and 1 were coded as binary and can also be interpreted as a percentage; M = mean; SD = standard deviation

Team Decision Strategies

Coders scored whether 13 team decision strategies were used by at least one member of the team during the dilemma (“1” = yes, “0” = no). The list of team decision strategies included: (1) forecasting potential downstream consequences, (2) consulting or collaborating with colleagues, (3) upholding privacy and confidentiality agreements, (4) investigating the facts, (5) voicing ethical concerns, (6) attending to professional guidelines or best practices, (7) problem analysis, (8) empathy, (9) considering personal values, (10) attending to conflicts of interest, 11) attending to institutional policies and procedures, 12) transparency and honesty, and 13) creative thinking. These strategies were based on prior taxonomies of decision strategies that have been found to facilitate the ethical decision making of researchers (e.g., Mumford et al., 2008). Finally, coders were asked to qualitatively capture any additional strategies used by teams that were not represented in the 13 strategies. The interrater agreement coefficients for team decision strategies ranged from 0.71 to 0.90 (see Table 2).

Team Decision Biases

Coders scored 8 team decision biases based on whether they were used by the team as described by interviewees (“1” = yes, “0” = no). These team decision biases included: (1) oversimplification, (2) deferring to others (e.g., authority), (3) self-orientation, (4) compromising, (5) limiting personal responsibility, (6) silence, (7) deception, and (8) rationalization. This list of team decision biases and their operational definitions were also based on published taxonomies and frameworks of ethical biases (e.g., Medeiros et al., 2014). Coders were provided an opportunity to qualitatively record any decision biases used that were not represented in this list. Interrater agreement coefficients ranged from 0.71 to 0.90 (see Table 2).

Overall Team Decision Ethicality

Team decision ethicality was scored from two perspectives using a three-point Likert rating scale (1 = mostly unethical, 2 = moderated/mixed ethicality, 3 = mostly ethical) to indicate the general ethicality of the team’s final decisions and behaviors. First, overall team decision ethicality was scored based on the interviewee’s assessment of how ethical the resulting decisions or solutions were (i.e., interviewee judgment; interrater agreement = 0.73). Second, coders provided their own perspective regarding overall team decision ethicality, based on their understanding of the dilemma and relevant professional guidelines (i.e., coder judgment; interrater agreement = 0.73). In most cases, interviewee and coder judgments were highly consistent with one another (r =.92) (see Table 2).

Results

Results were analyzed and will be presented in two steps. First, frequencies and descriptive statistics were calculated to understand the prevalence and intensity of various dilemma characteristics, team characteristics, team decision processes, and team outcomes as observed in the dilemmas reported by interviewees. Second, correlations were estimated to explore potential quantitative relationships between team characteristics, decision processes, and overall team ethicality, based on the theoretical model presented in Fig. 1.

Prevalence Statistics and Descriptives

As shown in Table 1, most (87.5%) of the dilemmas reported by interviewees explicitly involved a team component where two or more members interacted as part of the dilemma, as opposed to dilemmas involving an individual researcher (12.5%). This provides an answer to our first research question and suggests the importance of understanding research ethics from a team-based perspective.

Interviewees were about as likely to report an ethical dilemma that occurred at a former institution (57.8%) versus their current institution (42.5%). More importantly, the subject area of the ethical dilemmas reported represented a diverse range of issues. All 9 of the subject areas coded were present in our sample except for issues dealing with the welfare of laboratory animals (despite representation of two animal researchers in our sample). By far, the most prevalent dilemma subject reported in the sample was research misconduct (i.e., situations involving fabrication, falsification, or plagiarism), which was present in 75% of all the reported dilemmas. This was followed in prevalence by dilemmas involving the protection of human subjects (55%) and dilemmas involving research collaborations (52.5%). In contrast, dilemmas involving issues of peer review were observed in only 12.5% of cases while issues involving the welfare of laboratory animals were absent. Prevalence statistics for each dilemma subject area are presented in Table 1. Six example dilemmas from the dataset are summarized in the Appendix.

Table 2 summarizes descriptives, definitions, and interrater agreement coefficients (rwg) for the team decision outcomes and inputs. Based on the judgments of interviewees and coders, which were strongly correlated (r =.92), overall team decision ethicality was, on average, moderate (pooled M = 2.02). However, there was substantial variability around this mean (pooled SD = 0.80). Thus, later we explore whether the team decision inputs and processes help to explain differences in overall team decision ethicality.

With respect to team decision inputs, Table 2 demonstrates that teams typically ranged between three and seven members, with an average of five. Most teams also consisted of mixed levels of expertise (i.e., included faculty members and graduate students) and 90% of the teams were homogenous with respect to field. On average, the ethical orientation of teams trended utilitarian, while psychological safety and moral intensity were moderate. Finally, an unethical instigator could be identified in 83% of dilemmas, while an ethical champion could be identified in 55% of dilemmas. As an example of an unethical instigator, one researcher noted an incident where a faculty supervisor attempted to pressure research assistants into “naming names” after another research assistant anonymously reported a data confidentiality violation to the department. An ethical championing example can be observed in another incident where a researcher noted how one of their collaborators asked in a recent meeting if “what we are doing is okay or not”, and then suggested a strategy for ensuring that they complied with data privacy standards.

Table 3 presents definitions, descriptives, and agreement statistics for the team decision strategies. Of the 13 decision strategies, the most prevalently used included attending to professional guidelines (65%), attending to privacy and confidentiality concerns (60%), problem analysis (60%), transparency and honesty (60%), and consulting with colleagues (50%). In contrast, the least prevalent decision strategies used were forecasting (15%), investigating the facts (20%), and empathy (23%). On average, teams used a total of 5.5 strategies (SD = 2.8).

Table 3.

Definitions, descriptives, and agreement statistics for team decision strategies (+)

| Variable | Definition | M | SD | Interrater agreement |

|---|---|---|---|---|

| Forecasting | Thinking about potential consequences of different courses of action | 0.15 | 0.36 | 0.81 |

| Consulting | Asking relevant stakeholders (e.g., legal, employee relations, manager) or trusted colleagues for their advice, perspective, or assistance | 0.50 | 0.51 | 0.71 |

| Privacy & confidentiality | Demonstrating commitment to the rights of clients and research subjects to privacy, confidentiality, and informed consent | 0.60 | 0.50 | 0.71 |

| Investigating the facts | Gathering additional information (e.g., policies, survey data) to clarify or test assumptions | 0.20 | 0.41 | 0.88 |

| Voicing ethical concerns | Reporting one’s ethical concerns to those in authority (e.g., manager, HR, legal), or discussing concerns directly with those involved | 0.38 | 0.50 | 0.81 |

| Professional guidelines | Considering one’s responsibilities and duties to the profession (e.g., APA Ethics Code) | 0.65 | 0.48 | 0.74 |

| Problem analysis | Analyzing the problem to identify key issues, causes, and situational constraints | 0.60 | 0.49 | 0.71 |

| Empathy | Showing consideration for the feelings and welfare of others | 0.23 | 0.43 | 0.81 |

| Personal values | Evaluating one’s priorities and personal responsibilities | 0.35 | 0.48 | 0.76 |

| Conflicts of interest | Recognizing and managing or otherwise avoiding conflicts of interest that might bias one’s professional decision making | 0.40 | 0.50 | 0.71 |

| Policies and procedures | Evaluating responsibilities to one’s employer and following organizational policies and procedures | 0.40 | 0.49 | 0.71 |

| Transparency & honesty | Being forthright about potential limitations or errors and telling the truth | 0.60 | 0.49 | 0.71 |

| Creative thinking | Generating novel and useful solutions to complex problems | 0.40 | 0.49 | 0.90 |

| Overall use of strategies | Sum of decision strategy variables | 5.46 | 2.75 | n/a |

Note n = 40 ethical dilemmas; variables with means between 0 and 1 were coded as binary and can also be interpreted as a percentage; M = mean; SD = standard deviation; n/a = not applicable; the Cronbach’s alpha reliability estimate was 0.67 for overall use of strategies

Table 4 presents definitions, descriptives, and agreement statistics for the team decision biases. Of the 8 decision biases, the most prevalent were self-orientation (73%), rationalization (60%), and deception (48%). The least prevalent team decision bias was oversimplification (18%). On average, teams used a total of 3.3 biases (SD = 1.8).

Table 4.

Descriptives, definitions, and interrater agreement for team decision biases (-)

| Variable | Definition | M | SD | Interrater agreement |

|---|---|---|---|---|

| Oversimplification | Oversimplifying ethical problems, such as rigid (e.g., black-and-white) thinking, wishful thinking, overemphasizing short-term concerns or practical issues, and ignoring competing issues | 0.18 | 0.39 | 0.90 |

| Deferring to others | Outsourcing one’s personal responsibility for ethical decisions to others, including but not limited to authority figures | 0.35 | 0.48 | 0.83 |

| Self-orientation | Prioritizing personal convenience and failing to consider how one’s decisions impact others | 0.73 | 0.45 | 0.76 |

| Compromising | Violating policies or standards to meet two or more conflicting demands | 0.30 | 0.46 | 0.79 |

| Limiting responsibility | Avoiding taking personal responsibility for ethical decisions | 0.30 | 0.46 | 0.74 |

| Silence | Unwillingness to use one’s voice to report or confront unethical situation | 0.33 | 0.47 | 0.71 |

| Deception | Dishonest behavior which may include hiding, manipulating, or destroying information | 0.48 | 0.51 | 0.71 |

| Rationalization | Attempting to justify unethical decisions via rationalization, such as considering how the ends justify the means or making extreme case comparisons | 0.60 | 0.50 | 0.86 |

| Overall use of biases | Sum of decision bias variables | 3.27 | 1.77 | n/a |

Note n = 40 ethical dilemmas; variables with means between 0 and 1 were coded as binary and can also be interpreted as a percentage; M = mean; SD = standard deviation; n/a = not applicable; the Cronbach’s alpha reliability estimate was 0.50 for overall use of biases

Examples of several decision strategies and biases can be observed in an incident where a junior researcher suspected a more senior member of the research team to be fabricating or falsifying data. The junior researcher reported using several decision strategies, including attempting to find and replicate the senior researchers’ data (i.e., investigating the facts), considering potential consequences for the lab (i.e., forecasting), and asking another researcher on a different project for advice (i.e., consulting). At the same time, the junior research reported using several decision biases as well. Specifically, the junior researcher decided to listen to the advice of a more experienced researcher who advised them not to say anything because of their junior status (i.e., deferring to others, silence).

Quantitative Relationships Corresponding to Theoretical Model

Finally, based on the predictions summarized in Fig. 1, one-tailed Pearson correlations were estimated between team decision inputs, processes, and outcomes. To streamline the large number of team decision processes explored, composite (i.e., sum) variables were used for team decision strategies and biases. Correlations were interpreted as statistically significant if they evidence a p-value less than 0.05. Table 5 presents these correlations.

Table 5.

Correlations corresponding to conceptual model

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Team Characteristics (+) | ||||||||||||||

| 1. Expertise | 2.09 | 0.34 | 1.00 | |||||||||||

| 2. Formalistic orientation | 1.64 | 0.82 | 0.17 | 1.00 | ||||||||||

| 3. Champion presence | 0.55 | 0.50 | − 0.13 | 0.21 | 1.00 | |||||||||

| Team Characteristics (-) | ||||||||||||||

| 4. Size | 4.78 | 2.38 | − 0.13 | − 0.12 | 0.21 | 1.00 | ||||||||

| 5. Field homogeneity | 0.90 | 0.30 | 0.22 | − 0.05 | − 0.30* | − 0.13 | 1.00 | |||||||

| 6. Instigator presence | 0.83 | 0.38 | − 0.18 | 0.08 | 0.38** | 0.09 | − 0.15 | 1.00 | ||||||

| Situational Moderators (+/-) | ||||||||||||||

| 7. Psychological safety | 1.90 | 0.82 | 0.11 | 0.15 | 0.16 | − 0.19 | − 0.35* | 0.07 | 1.00 | |||||

| 8. Issue moral intensity | 2.00 | 0.73 | 0.08 | − 0.17 | − 0.11 | 0.18 | 0.02 | 0.04 | − 0.47** | 1.00 | ||||

| Team Decision Processes | ||||||||||||||

| 9. Overall use of strategies (+) | 5.45 | 2.75 | − 0.13 | 0.19 | 0.50** | 0.09 | 0.21 | 0.29* | − 0.07 | − 0.06 | 1.00 | |||

| 10. Overall use of biases (-) | 3.25 | 1.77 | − 0.20 | − 0.16 | − 0.16 | 0.02 | 0.29* | 0.03 | − 0.25 | 0.14 | 0.22 | 1.00 | ||

| Team Decision Outcomes | ||||||||||||||

| 11. Interviewee judgment | 2.04 | 0.85 | 0.04 | 0.56** | 0.50** | − 0.22 | − 0.05 | − 0.10 | 0.36* | − 0.27* | 0.44** | − 0.04 | 1.00 | |

| 12. Coder judgment | 1.97 | 0.77 | 0.01 | 0.60** | 0.41** | − 0.24 | − 0.01 | − 0.11 | 0.42** | − 0.36* | 0.37* | 0.00 | 0.92** | 1.00 |

Note N = 40; The Cronbach’s alpha reliability estimate was 0.67 for the sum of 13 team decision strategies and 0.50 for the sum of 8 decision biases. *p <.05 (one-tailed); **p <.01 (one-tailed)

As expected, the overall use of decision strategies by the team was positively related to overall decision ethicality (average r =.40). However, the overall use of decision biases was unrelated to overall decision ethicality.

Regarding the team characteristics that were expected to facilitate team ethical decision making, formalistic orientation and champion presence were both strongly related to overall team decision ethicality (average r =.58 and 0.46, respectively). Champion presence was also strongly related to the overall use of team decision strategies (r =.50). However, team expertise was unrelated to team decision processes or outcomes.

Team size, field homogeneity, and instigator presence were expected to inhibit team ethical decision making. Team size was unrelated to decision processes or outcomes. More homogenous teams, in terms of field, were found to use moderately more decision biases (r =.29). Interestingly, instigator presence was positively related to overall use of decision strategies (r =.29), perhaps because the presence of instigators activates the need for greater usage of ethical decision processes.

While the main effects of situational characteristics were not predicted, their correlations are presented in Table 5 as well. Psychological safety was positively related while moral intensity was negatively related to overall team decision ethicality (average r =.39 and − 0.32, respectively). Psychological safety and moral intensity were also negatively correlated (r = −.47), suggesting that as the moral stakes of the dilemma increased, teams were less comfortable voicing opinions that went against the status quo.

Finally, a series of linear regressions were conducted to explore if the two situational characteristics interacted with team characteristics to explain team decision processes or outcomes. The two overall decision ethicality variables were averaged into a composite (a = 0.92) to reduce the number of statistical tests conducted. Predictor variables were mean-centered. Two interactions approached statistical significance (p <.10) and were probed using follow-up simple slopes analyses (+ 1 vs. -1 SD).

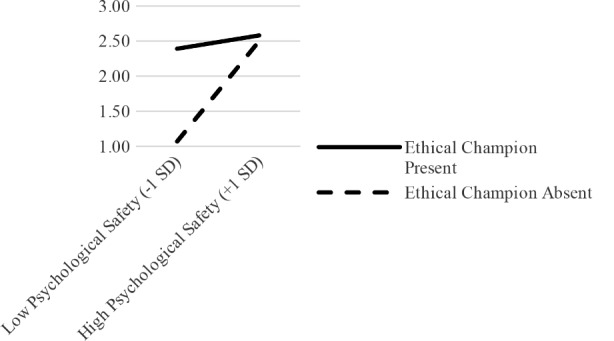

First, the presence of an ethical champion interacted with team psychological safety to predict overall usage of team decision strategies (β = − 0.25, t = -1.78, p =.084). Specifically, ethical champion presence was strongly and positively related to the use of team decision strategies when psychological safety was low (β = 0.75, t = 3.80, p <.001), but unrelated to decision strategy usage when psychological safety was high (β = 0.23, t = 1.08, p =.287). As illustrated in Fig. 2, the presence of an ethical champion appears more important for facilitating ethical team decision processes in climates marked by poor psychological safety.

Fig. 2.

Two-way interaction between psychological safety and ethical champion presence (vs. absence) on overall team decision ethicality

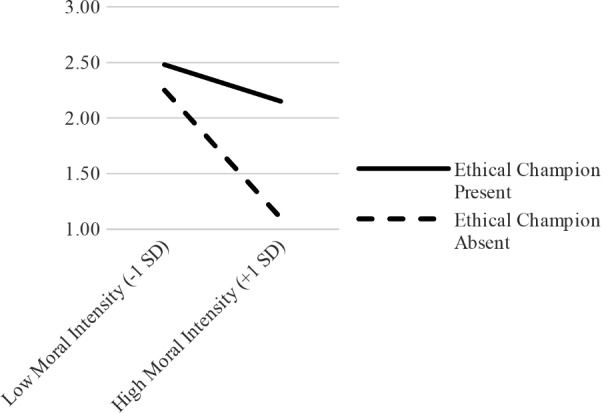

The second interaction that approached statistical significance was between moral intensity and the presence of an ethical champion on overall team decision ethicality (β = 0.28, t = 2.02, p =.051). The presence of an ethical champion was positively related to overall decision ethicality when moral intensity was high (β = 0.69, t = 3.60, p <.001), but not when moral intensity was low (β = 0.13, t = 0.68, p =.502). This suggests that as the moral stakes of the situation increase, ethical champions become more important for facilitating their team’s overall decision ethicality. Figure 3 illustrates this interaction pattern.

Fig. 3.

Two-way interaction between moral intensity and ethical champion presence (vs. absence) on overall team decision ethicality

Discussion

The purpose of this research was to introduce a team-based lens to the study of ethical decision making in order to understand the team factors that might facilitate and inhibit a research team’s ethical performance. Through transforming qualitative interview transcripts with scientists and engineers into quantitative variables, we observed several statistically significant relationships between team inputs, processes, and outputs in line with our theoretical model (Fig. 1). Overall, research teams that adopted a formalistic orientation, had a clear ethical champion, and used a greater number of ethical decision strategies tended to make more ethical decisions. We also found that psychological safety and moral intensity moderated the relationship between ethical champion presence and overall team decision ethicality, such that ethical champions were more beneficial in situations characterized by low psychological safety or high moral intensity. Moreover, these relationships were all moderate to large in terms of practical significance, suggesting that even small improvements in these factors may translate into noticeable improvements in team ethical decision making, and potentially, reduced instances of research misconduct.

Implications for Research and Practice

Our findings suggest a number of implications for research and practice. First, given the prevalence of team-based work not only among researchers, but also the knowledge economy writ large (Guzzo & Dickson, 1996), it is critical for scholars to reevaluate our assumptions and models of ethical decision making to incorporate a team-based perspective. Of course, much additional work is required to further explore and isolate the factors that are most critical to team-based ethical decision making. Of particular interest here is not only understanding the main effects of team-level factors, but also the potential cross-level interactions between team factors and other levels of analysis (e.g., Yang et al., 2007). For example, we suspect that professional and institutional-level factors (e.g., incentive systems, norms, competition) might interact with team factors to influence the ethical decision making of research teams—avenues for future research. Additionally, it may be worthwhile to investigate traditional methods applied to the study of groups and teams (e.g., experiments, observational studies) to better understand the nuances in how researchers communicate and negotiate around ethical decisions.

With respect to practical implications, the findings point to five critical team factors related to ethical decision making in research teams, including formalistic orientation, ethical championing, ethical decision strategies, psychological safety, and moral intensity. The first three of these factors are team characteristics and processes that might be improved using formal training and development programs. Meta-analytic research on ethics training effectiveness demonstrates that professionals’ ethical knowledge, awareness, and decision making improve when educated in the ethical principles and standards of their profession and when given sufficient opportunities to practice applying decision-making strategies (Watts et al., 2017). What might be especially useful in this regard is adopting a multi-level perspective in future research on ethics training instruction that emphasizes important team-based factors (e.g., McCormack & Garvan, 2014). Along related lines, research teams and lab directors might benefit from receiving instruction on the principles of psychological safety and moral intensity, as well as engaging in interactive scenarios where team members can practice taking turns playing the role of an ethical champion.

Individual researchers might also be interested in applying some of the findings here to improve ethical decision making on their own research teams. For example, researchers might look for opportunities to wear the “ethical champion hat” on their teams by proactively voicing any questions or concerns related to ethics, by suggesting decision strategies in response to active (or potential) problems, or by vocalizing support for other team members who point out ethical considerations. Of course, context and tact can be critical in ethical communication (Watts & Sahatjian, 2024). In teams with low psychological safety or a poor ethical climate, voicing ethical concerns could be perceived as a threat, increasing team member defensiveness instead of ethical learning (Bisel et al., 2011). One strategy for improving reactions to ethical communication is to routinize it, such as by incorporating everyday scripts around ethics into research meetings on a regular basis. Instead of always ending research meetings with the generic question, “Does anyone have any questions or concerns?”, ask the team about potential ethical concerns. This small shift in communication may, over time, help produce an important climate shift in research teams to prioritize ethics on a daily basis.

Limitations and Future Research

There are a few noteworthy limitations that should be borne in mind when interpreting these findings. First, given the difficulty of recruiting scientists and engineers to participate in interviews about morally sensitive, personal situations, our sample size was relatively small. While it is not uncommon for qualitative studies to demonstrate thematic saturation with only 15–20 interviews (e.g., Francis et al., 2010), small sample sizes constrain statistical power in quantitative designs and therefore increase the probability of Type II error (i.e., failing to detect a “true” relationship). For example, as predicted, team size trended towards a small-to-moderate, negative relationship with overall decision ethicality (average r = −.23), but the effect size may simply have failed to reach the threshold for statistical significance due to an insufficient sample size. In other words, the team inputs and processes noted as statistically significant here are based on conservative estimates that are unlikely to represent the full range of team factors that are important to ethical decision making in research teams. Future research that draws on more convenient research methods to recruit larger groups of researchers (e.g., online surveys; Lefkowitz & Watts, 2022) might be better suited to detecting those team factors with weaker, but still practically meaningful, associations with team ethical decision making.

Additionally, our interview data were single-source and retrospective. We did not interview all the team members associated with each ethical dilemma, nor would it have been feasible to do so given privacy concerns. As a result, we cannot verify the veracity of the incidents reported by interviewees or assess the extent to which their reports agreed or conflicted with other members. It is well established that as adults age, there is a tendency to distort memories of autobiographical events in favor of positive information (Mather & Carstensen, 2005). Such distortions may have interfered with the collection of objective information about the ethical incidents we collected. However, our sample of interviewees trended toward graduate students with only a few years of research experience, and interviewees noted that nearly all (92.5%) of the incidents we collected occurred within the last three years. Thus, we are not especially concerned with memory distortion effects in our sample. Nevertheless, future research that uses real-time simulations to model team ethical decision making in response to hypothetical research dilemmas may be especially fruitful. Multi-source perspectives could also be useful for exploring how ethical climate dimensions may be related to team decision processes and outcomes.

Finally, the background characteristics of the teams in our sample were limited. For example, we did not assess team tenure, or the amount of time in which the research team had operated together at the time of the incident. There may be positive and negative aspects to having a history of collaboration on a team. Additionally, all the researchers we interviewed were affiliated with a single research university in the United States, which limits our ability to investigate how multicultural factors could pose unique ethical challenges for research teams. For example, multinational research teams may be forced to navigate conflicting values as well as conflicting codes of conduct (Leong & Lyons, 2010; Khoury & Akoury-Dirani, 2023). Thus, future research might benefit from investigating and coding for alternative background characteristics with respect to ethical decision making on research teams.

Appendix

Example Summaries of Team-Based Ethical Incidents

Sometimes it’s not outright fabrication. A student will do the experiment multiple times and show or present only the best results. OK, so that’s also not ethical. I mean it is not outright lying but it’s not representing truth, as it were. This individual will present results that were extraordinarily good and at other times he’ll be oblivious to the fact that results he is presenting to me make no sense…The individual otherwise was very nice, very polite, and I just let him down slowly. So, he actually went and joined another professor’s group and he got his PhD eventually.

Professor [Chemistry]

We’re doing this one project where we don’t have access directly to the data set. We need to scrape the website and sometimes, in terms of service, they say ‘It’s a violation of our terms and service if you try to scrape anything.’ If we try to do it, is it ethical enough or not? Are we going to be facing any issues later on? Is it legally allowed or not, even though legally no one will know? Everyone would say don’t do it, but… we need to scrape that data from the website. So what we are thinking now is to actually have an e-mail trail with [the company]. So that if we do submit it somewhere and we never hear back from [the company], we can kind of back our claim saying that we made attempts to contact them, but we never got a response.

Graduate Student [Computer Science]

It would fall under questionable research practices as they call them now. At the time, it seemed like it was normal practice. Basically, running the statistics after every batch of data comes in. At the time that just seemed normal because you’re opening up the data set every time some new batch of data comes in. But you know that that has kind of gone out of practice. I think that the consensus among researchers is that that comes about by people running the numbers until they look good and then stopping. I don’t think we did anything about it. I mean, it was partly that seems fishy, but who knows? I mean, there’s always the benefit of the doubt to say, well, maybe there was a legitimate trimming of outliers. But you know, when you trim outliers, you’re supposed to do it on both ends of the distribution. This paper probably got published and is in the scientific record now, telling a story that might not be as true as it looks.

Professor [Psychology]

Our firm has strict policy about using its equipment and software for only office use. But one of my colleagues, or you can say close friend, is using it for his own personal use. So when he used it once or twice I told him not to do that anymore and I warned him that I will tell the boss about this. But he didn’t listen and continued to do so. So after that I reached out to my boss and my boss strictly prohibited all the activities from him, his laptop, and prohibited him for doing that and suspended him for like one week. I do not think that resolved the issue.

Graduate Student [Engineering]

We have several groups of students every summer of whom maybe two would eventually publish their research, and it was nearly always the faculty supervisor who would push the research on to submit it to a journal, deal with the revisions and things like that, because the students by that time have gone on. But I didn’t do that with this one group, and three or four years later, I saw that the group’s research report had been published in a journal without my name on it, but using my suggestions. The program director basically had just taken the report and all of my suggestions and published them with his name on them.

Professor [Mathematics]

I had a power analysis that recommended about 240 participants. I collected about 280 and then I got plagued by bots. And it brought my sample size down by a lot. It was across a couple of studies, so about 180 to 190, which wasn’t sufficient for my power analysis. And so I was just deciding whether or not to keep the bot responses, which ethically, of course, no I shouldn’t. And I decided not to. And I think the main reason I decided not to was because it was for my thesis. I put a lot of time and energy into it, but I think if it had been a bigger project that I was looking to get published and it wasn’t my… I don’t know. Ultimately I decided to not include any of the bots which led to some nonsignificant results in a couple of my hypotheses.

Graduate Student [Psychology]

*Note. Quotes were edited for grammar and succinctness.

Footnotes

The think-aloud interview protocol is available from the authors upon request.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Armstrong, R. W., Williams, R. J., & Barrett, J. D. (2004). The impact of banality, risky shift and escalating commitment on ethical decision making. Journal of Business Ethics, 53, 365–370. [Google Scholar]

- Balliet, D., Wu, J., & De Dreu, C. K. W. (2014). Ingroup favoritism in cooperation: A meta-analysis. Psychological Bulletin, 140(6), 1556–1581. [DOI] [PubMed] [Google Scholar]

- Beu, D. S., Buckley, M. R., & Harvey, M. G. (2003). Ethical decision–making: A multidimensional construct. Business Ethics: A European Review, 12(1), 88–107. [Google Scholar]

- Bisel, R. S., Kelley, K. M., Ploeger, N. A., & Messersmith, J. (2011). Workers’ moral mum effect: On facework and unethical behavior in the workplace. Communication Studies, 62(2), 153–170. [Google Scholar]

- Bohns, V. K., Roghanizad, M. M., & Xu, A. Z. (2014). Underestimating our influence over others’ unethical behavior and decisions. Personality and Social Psychology Bulletin, 40(3), 348–362. [DOI] [PubMed] [Google Scholar]

- Borkowski, S. C., & Ugras, Y. J. (1998). Business students and ethics: A meta-analysis. Journal of Business Ethics, 17, 1117–1127. [Google Scholar]

- Brock, M. E., Vert, A., Kligyte, V., Waples, E. P., Sevier, S. T., & Mumford, M. D. (2008). An evaluation of a sense-making approach to ethics training using a think aloud protocol. Science and Engineering Ethics, 14, 449–472. [DOI] [PubMed] [Google Scholar]

- Byrt, T., Bishop, J., & Carlin, J. B. (1993). Bias, prevalence, and kappa. Journal of Clinical Epidemiology, 46(5), 423–429. [DOI] [PubMed] [Google Scholar]

- Chen, A., & Treviño, L. K. (2023). The consequences of ethical voice inside the organization: An integrative review. Journal of Applied Psychology. [DOI] [PubMed]

- Chen, A., Treviño, L. K., & Humphrey, S. E. (2020). Ethical champions, emotions, framing, and team ethical decision making. Journal of Applied Psychology, 105(3), 245–273. [DOI] [PubMed] [Google Scholar]

- De Wit, F. R. C., Greer, L. L., & Jehn, K. A. (2012). The paradox of intragroup conflict: A meta-analysis. Journal of Applied Psychology, 97(2), 360–390. [DOI] [PubMed] [Google Scholar]

- Edmondson, A. (1999). Psychological safety and learning behavior in work teams. Administrative Science Quarterly, 44(2), 350–383. [Google Scholar]

- Edmondson, A. C., & Lei, Z. (2014). Psychological safety: The history, Renaissance, and future of an interpersonal construct. Annual Review of Organizational Psychology and Organizational Behavior, 1(1), 23–43. [Google Scholar]

- Francis, J. J., Johnston, M., Robertson, C., Glidewell, L., Entwistle, V., Eccles, M. P., & Grimshaw, J. M. (2010). What is an adequate sample size? Operationalising data saturation for theory-based interview studies. Psychology and Health, 25(10), 1229–1245. [DOI] [PubMed] [Google Scholar]

- Geller, G., Boyce, A., Ford, D. E., & Sugarman, J. (2010). Beyond compliance: The role of institutional culture in promoting research integrity. Academic Medicine, 85(8), 1296–1302. [DOI] [PubMed] [Google Scholar]

- Guzzo, R. A., & Dickson, M. W. (1996). Teams in organizations: Recent research on performance and effectiveness. Annual Review of Psychology, 47(1), 307–338. [DOI] [PubMed] [Google Scholar]

- Hunt, T. G., & Jennings, D. F. (1997). Ethics and performance: A simulation analysis of team decision making. Journal of Business Ethics, 16, 195–203. [Google Scholar]

- Jennings, D. F., Hunt, T. G., & Munn, J. R. (1996). Ethical decision making: An extension to the group level. Journal of Managerial Issues, 425–439.

- Jones, T. K. (1991). Ethical decision making by individuals in organizations: An issue-contingent model. Academy of Management Review, 16(2), 366–395. [Google Scholar]

- Kakuk, P. (2009). The legacy of the Hwang case: Research misconduct in biosciences. Science and Engineering Ethics, 15, 545–562. [DOI] [PubMed] [Google Scholar]

- Khoury, B., & Akoury-Dirani, L. (2023). Ethical codes in the arab region: Comparisons and differences. Ethics & Behavior, 33(3), 193–204. [Google Scholar]

- King, G. (2002). Crisis management & team effectiveness: A closer examination. Journal of Business Ethics, 41, 235–249. [Google Scholar]

- Kozlowski, S. W. J., & Ilgen, D. R. (2006). Enhancing the effectiveness of work groups and teams. Psychological Science in the Public Interest, 7(3), 77–124. [DOI] [PubMed] [Google Scholar]

- LeBreton, J. M., & Senter, J. L. (2008). Answers to 20 questions about interrater reliability and interrater agreement. Organizational Research Methods, 11(4), 815–852. [Google Scholar]

- Lefkowitz, J., & Watts, L. L. (2022). Ethical incidents reported by industrial-organizational psychologists: A ten-year follow-up. Journal of Applied Psychology, 107(10), 1781–1803. [DOI] [PubMed] [Google Scholar]

- Leong, F. T., & Lyons, B. (2010). Ethical challenges for cross-cultural research conducted by psychologists from the United States. Ethics & Behavior, 20(3–4), 250–264. [Google Scholar]

- Lu, L., Yuan, Y. C., & McLeod, P. L. (2012). Twenty-five years of hidden profiles in group decision making: A meta-analysis. Personality and Social Psychology Review, 16(1), 54–75. [DOI] [PubMed] [Google Scholar]

- MacDougall, A. E., Martin, A. A., Bagdasarov, Z., & Mumford, M. D. (2014). A review of theory progression in ethical decision making literature. Journal of Organizational Psychology, 14(2), 9–19. [Google Scholar]

- Mather, M., & Carstensen, L. L. (2005). Aging and motivated cognition: The positivity effect in attention and memory. Trends in Cognitive Sciences, 9(10), 496–502. [DOI] [PubMed] [Google Scholar]

- McCormack, W. T., & Garvan, C. W. (2014). Team-based learning instruction for responsible conduct of research positively impacts ethical decision-making. Accountability in Research, 21(1), 34–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medeiros, K. E., Mecca, J. T., Gibson, C., Giorgini, V. D., Mumford, M. D., Devenport, L., & Connelly, S. (2014). Biases in ethical decision making among university faculty. Accountability in Research, 21(4), 218–240. [DOI] [PubMed] [Google Scholar]

- Mulhearn, T. J., Steele, L. M., Watts, L. L., Medeiros, K. E., Connelly, S., & Mumford, M. D. (2017). Review of instructional approaches in ethics education. Science and Engineering Ethics, 23, 883–912. [DOI] [PubMed] [Google Scholar]

- Mumford, M. D., Connelly, S., Brown, R. P., Murphy, S. T., Hill, J. H., Antes, A. L., Waples, E. P., & Devenport, L. D. (2008). A sensemaking approach to ethics training for scientists: Preliminary evidence of training effectiveness. Ethics & Behavior, 18(4), 315–339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan, Y., & Sparks, J. R. (2012). Predictors, consequence, and measurement of ethical judgments: Review and meta-analysis. Journal of Business Research, 65(1), 84–91. [Google Scholar]

- Pearsall, M. J., & Ellis, A. P. J. (2011). Thick as thieves: The effects of ethical orientation and psychological safety on unethical team behavior. Journal of Applied Psychology, 96(2), 401–411. [DOI] [PubMed] [Google Scholar]

- Richter, A., Dawson, J. W., & West, M. (2011). The effectiveness of teams in organizations: A meta-analysis. International Journal of Human Resource Management, 22(13), 2749–2769. [Google Scholar]

- Schmutz, J. B., Meier, L. L., & Manser, T. (2019). How effective is teamwork really? The relationship between teamwork and performance in healthcare teams: A systematic review and meta-analysis. British Medical Journal Open, 9(9), e028280. [DOI] [PMC free article] [PubMed]

- Sox, H. C., & Rennie, D. (2006). Research misconduct, retraction, and cleansing the medical literature: Lessons from the Poehlman case. Annals of Internal Medicine, 144(8), 609–613. [DOI] [PubMed] [Google Scholar]

- Steneck, N. H. (2007). ORI introduction to the responsible conduct of research (revised edition). US Government Printing Office.

- Stroebe, W., Postmes, T., & Spears, R. (2012). Scientific misconduct and the myth of self-correction in science. Perspectives on Psychological Science, 7(6), 670–688. [DOI] [PubMed] [Google Scholar]

- Thiel, C. E., Bagdasarov, Z., Harkrider, L., Johnson, J. F., & Mumford, M. D. (2012). Leader ethical decision-making in organizations: Strategies for sensemaking. Journal of Business Ethics, 107, 49–64. [Google Scholar]

- Treviño, L. K., & Youngblood, S. A. (1990). Bad apples in bad barrels: A causal analysis of ethical decision-making behavior. Journal of Applied Psychology, 75(4), 378–385. [Google Scholar]

- Valentine, S., & Hollingworth, D. (2012). Moral intensity, issue importance, and ethical reasoning in operations situations. Journal of Business Ethics, 108, 509–523. [Google Scholar]

- Valkenburg, G., Dix, G., Tijdink, J., & de Rijcke, S. (2021). Expanding research integrity: A cultural-practice perspective. Science and Engineering Ethics, 27(1), 10–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Someren, M., Barnard, Y. F., & Sandberg, J. (1994). The think aloud method: A practical approach to modelling cognitive. London: Academic Press, 11, 29–41. [Google Scholar]

- Watts, L. L., & Sahatjian, Z. (2024). Navigating employee moral defensiveness: Guidelines for managers [Poster]. Society for Industrial and Organizational Psychology Annual Conference, Chicago, IL.

- Watts, L. L., Medeiros, K. E., Mulhearn, T. J., Steele, L. M., Connelly, S., & Mumford, M. D. (2017). Are ethics training programs improving? A meta-analytic review of past and present ethics instruction in the sciences. Ethics & Behavior, 27, 351–384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watts, L. L., Medeiros, K. E., McIntosh, T. J., & Mulhearn, T. J. (2021). Ethics training for managers: Best practices and techniques. Routledge.

- Watts, L. L., Gray, B., & Medeiros, K. E. (2022). Side effects associated with organizational interventions: A perspective. Industrial and Organizational Psychology, 15(1), 76–94. [Google Scholar]

- Yang, J., Mossholder, K. W., & Peng, T. K. (2007). Procedural justice climate and group power distance: An examination of cross-level interaction effects. Journal of Applied Psychology, 92(3), 681–692. [DOI] [PubMed] [Google Scholar]

- Zeni, T. A., Buckley, M. R., Mumford, M. D., & Griffith, J. A. (2016). Making sense of ethical decision making. The Leadership Quarterly, 27(6), 838–855. [Google Scholar]

- Zheng, D., Witt, L. A., Waite, E., David, E. M., van Driel, M., McDonald, D. P.,... & Crepeau, L. J. (2015). Effects of ethical leadership on emotional exhaustion in high moral intensity situations. The Leadership Quarterly, 26(5), 732–748.