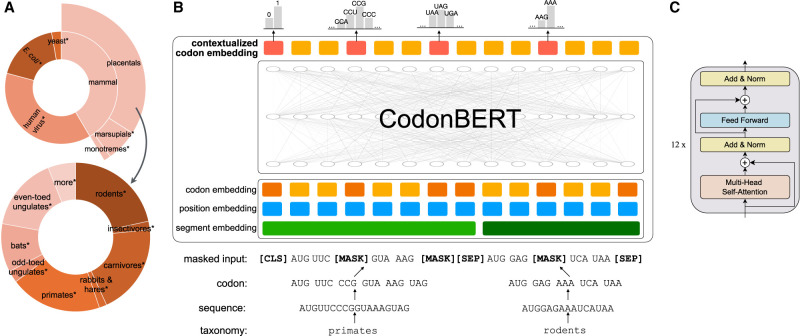

Figure 1.

Pretraining data distribution and CodonBERT model architecture. (A) Hierarchically classified mRNA sequences for pretraining. All the 14 leaf-level classes (those annotated with an asterisk are numbered). The angle of each segment is proportional to the number of sequences belonging to this group. (B) Model architecture and training scheme deployed for two tasks of CodonBERT. (C) A stack of 12 transformer blocks employed in CodonBERT model.