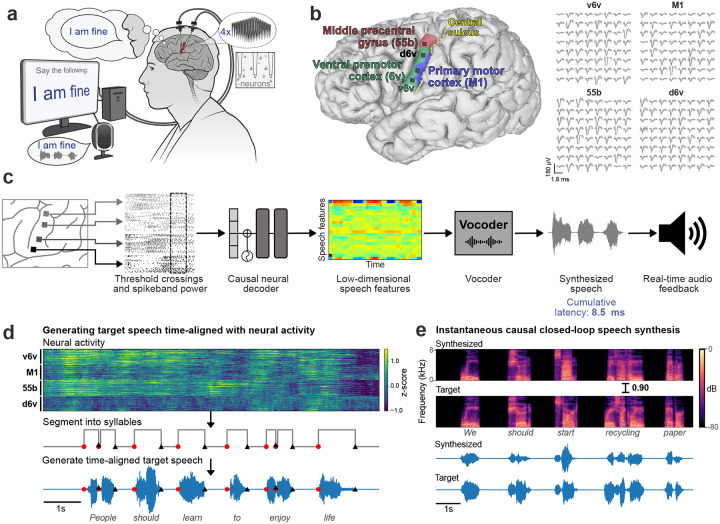

Fig. 1. Closed-loop voice synthesis from intracortical neural activity in a participant with ALS.

a. Schematic of the brain-to-voice neuroprosthesis. Neural features extracted from four chronically implanted microelectrode arrays are decoded in real-time and used to directly synthesize his voice. b. Array locations on the left hemisphere and typical neuronal spikes from each microelectrode recorded over 1s. Color overlays are estimated from a Human Connectome Project cortical parcellation. c. Closed-loop causal voice synthesis pipeline: voltages are sampled at 30 kHz; threshold-crossings and spike-band power features are extracted from 1ms segments; these features are binned into 10 ms non-overlapping bins, normalized and smoothed. The Transformer model maps these neural features to a low-dimensional representation of speech involving Bark-frequency cepstral coefficients, pitch, and voicing, which are used as input to a vocoder. d. Lacking T15’s ground truth speech, we first generated synthetic speech from the known text cue in the training data using text-to-speech, and then used the neural activity itself to time-align the synthetic speech on a syllable level with the neural data time-series to obtain a target speech waveform. e. Representative example causally synthesized neural data, which matches the target speech with high fidelity.