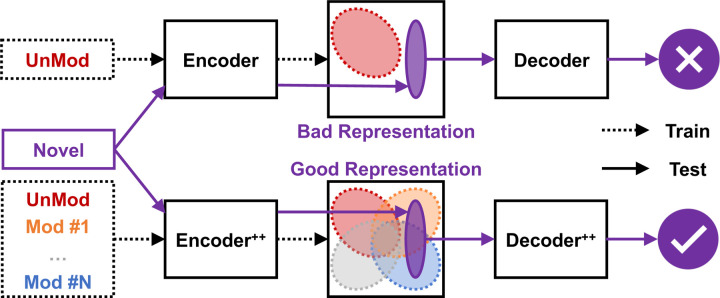

Figure 1. Overview of the basecaller training strategy.

Basecallers in general consist of encoder and decoder neural networks: encoders first condense nanopore sequencing readouts as highly-informative representations; decoders further transform the produced representations as nucleotide sequences. Diverse training modifications will expand the representation space, thus making basecallers generalizable to novel modifications. UnMod and Mod denote unmodified and modified training data categories, respectively. Novel denotes the out-of-sample modification in the test data. Encoder++ and Decoder++ comprise the basecaller trained with diverse modifications, as opposed to the basecaller trained with only the UnMod data.