Abstract

In this study, machine learning (ML) algorithms were employed to predict the pseudo-1st-order reaction rate constants for the sonochemical degradation of aqueous organic pollutants under various conditions. A total of 618 sets of data, including ultrasonic, solution, and pollutant characteristics, were collected from 89 previous studies. Considering the difference between the electrical power (Pele) and calorimetric power (Pcal), the collected data were divided into two groups: data with Pele and data with Pcal. Eight input variables, including frequency, power density, pH, temperature, initial concentration, solubility, vapor pressure, and octanol–water partition coefficient (Kow), and one target variable of the degradation rate constant, were selected for ML. Statistical analysis was conducted, and outliers were determined separately for the two groups. ML models, including random forest (RF), extreme gradient boosting (XGB), and light gradient boosting machine (LGB), were used to predict the pseudo-1st-order reaction rate constants for the removal of aqueous pollutants. The prediction performance of the ML models was evaluated using different metrics, including the root mean squared error (RMSE), mean absolute error (MAE), and R squared (R2). A significantly higher prediction performance was obtained using data without outliers and augmented data. Consequently, all the applied ML models could be used to predict the sonochemical degradation of aqueous pollutants, and the XGB model showed the highest accuracy in predicting the rate constants. In addition, the power density and frequency were the most influential factors among the eight input variables in prediction with the Shapley additive explanation (SHAP) values method. The degradation rate constants of the two pollutants over a wide frequency range (20–1,000 kHz) were predicted using the trained ML model (XGB) and the prediction results were analyzed.

Keywords: Machine learning, Ultrasound, Cavitation, Sonochemical degradation, Reaction rates

1. Introduction

With rapid advancements in computer processing capabilities and algorithmic methodologies, effective machine learning (ML) and deep learning (DL) algorithms have been developed and applied in numerous domains, such as healthcare, finance, autonomous driving, and sound analysis [1], [2], [3], [4], [5], [6], [7]. Environmental engineering researchers have begun applying these techniques for data analytics. Since the late 2010 s, extensive ML research has been conducted, with most studies focusing on air pollution monitoring and water quality management [8], [9], [10], [11], [12].

Over the last two decades, advanced oxidation processes (AOPs) have been widely investigated for the removal of recalcitrant and emerging pollutants using electromagnetic and mechanical wave energies and a variety of oxidizing chemicals and catalysts [13], [14], [15], [16], [17], [18]. Recent advancements in ML have led to the development of AOPs for investigating various process variables from new perspectives and predicting pollutant removal [19], [20], [21], [22], [23], [24], [25], [26], [27], [28], [29]. The ML-based approach is expected to streamline experimental processes by reducing time and obviating extensive chemical- and labor-based experiments [19], [20]. By analyzing and training experimental data collected from previous research, pollutant degradation (removal efficiencies and reaction rate constants) can be predicted using a well-trained ML algorithm. Recently, researchers have reported the application of ML techniques to ultrasound-based AOPs. Zhou et al. predicted pseudo-1st-order reaction rate constants for the removal of pharmaceuticals in sonoelectrochemical processes using various ML models. They selected 15 input variables, including six electro-oxidation features, two ultrasonic features, four pollutant features, and three environmental features [21]. A research group investigated ML models to predict the tetracycline removal rate in sonophotocatalytic processes using various catalysts. They developed ML algorithms with tetracycline concentration, catalyst dose, irradiation time, light power, ultrasound on/off, and additive oxidant concentration as input variables [22], [23], [30]. Glienke et al. obtained experimental data for the degradation of 32 phenol derivatives using an 860 kHz sonoreactor and analyzed the data using various ML models [31]. All the above-mentioned sonochemists used only their own experimental data, relatively small numbers of datasets compared to those in previous AOPs research, for ML training and evaluating ML performance.

This study aims to investigate the applicability of ML to sonochemistry using datasets collected from previous studies. Three well-known ML models, random forest (RF), extreme gradient boosting (XGB), and light gradient boosting machine (LGB), were used to predict the pseudo-1st-order reaction rate constants of sonochemical pollutant degradation. A total of 618 datasets from 89 previous research papers, providing a comprehensive understanding of sonochemical degradation, were used for the training of the ML models. Eight input variables, including frequency, power density, pH, temperature, initial concentration, solubility, vapor pressure, and octanol–water partition coefficient (Kow), and one target variable of the degradation rate, constant were considered. Different metrics, including the root mean squared error (RMSE), mean absolute error (MAE), and R squared (R2), were analyzed and compared to evaluate the performance of the ML models. In addition, the rate constants for the removal of two selected pollutants (naphthol blue black and phenol), which are the most frequently reported pollutants in our collected data, under various frequency conditions (20–1,000 kHz) were predicted using the trained ML model.

2. Experiment and analytical methodology

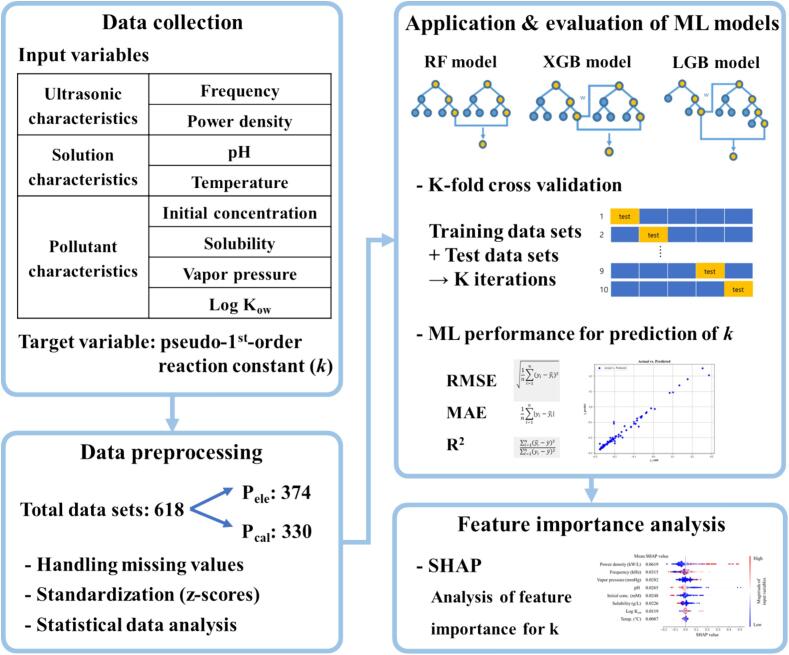

A brief flowchart for the ML using data from previous research on sonochemical processes in this study is shown in Fig. 1. A desktop computer for office (Intel i7-8700 CPU 3.20 GHz; RAM 16 GB) was used in this study.

Fig. 1.

Flow chart for machine learning using data from previous research on sonochemical processes.

2.1. Data collection

A literature search was conducted using bibliographic databases such as Google Scholar with five major keywords: “sonochemical,” ”degradation,” “ultrasound,” ”ultrasonic,” and “oxidation.” This search yielded 618 sets of data from 89 articles published since 2000 on the degradation of aqueous pollutants in sonochemical processes using only ultrasound, but not other AOPs. Each dataset included eight input variables, namely frequency, power density, pH, temperature, initial concentration, solubility, vapor pressure, and octanol–water partition coefficient (Kow), and one target variable of the pseudo-1st-order reaction rate constant for the removal of pollutants was collected considering the ultrasonic, solution, and pollutant characteristics. Some rate constants were obtained from the figures of research papers using a high-resolution monitor (QHD 2560 × 1440) and PlotDigitizer software (https://plotdigitizer.com/) [32], [33]. Some data on pollutant characteristics were obtained from well-known websites, such as PubChem (https://pubchem.ncbi.nlm.nih.gov/) and the Toxin and Toxin Target Database (T3DB, https://www.t3db.ca/). The collected data with a reference list was provided in the Supplementary Material. In spite of relatively larger numbers of datasets compared to those in previous research, more datasets should be added to enhance diversity in datasets [21], [22], [23], [30], [31]. In addition, it should be noted that the geometric effects were not considered in this study because most previous researchers rarely reported the details of the geometric conditions in their studies. Recently, it has been reported that small changes in the geometric conditions could result in significant differences in sonochemical activity [34], [35], [36], [37], [38], [39].

2.2. Data preprocessing

In sonochemistry, information on the input power for ultrasound irradiation is provided in terms of electrical power (Pele), which represents the energy consumed by the ultrasound generator, or calorimetric power (Pcal), which represents the heat energy induced by the absorption of ultrasound in a liquid medium. It has been reported that the difference between electrical power and calorimetric power is quite significant and Pcal typically ranges from 45 to 85 % of Pele depending on the applied ultrasonic system [35], [36], [39]. Previous researchers provided either Pcal or Pele in their studies. Thus, the collected datasets were divided into two categories [data with Pele (374 sets) and data with Pcal (330 sets)] and analyzed separately. In addition, considering the size (or volume) difference of the sonoreactors, the power density (Pele or Pcal / liquid volume) was used instead of Pele or Pcal.

Some data, including temperature, pH, solubility, vapor pressure, and log Kow, were not available, and missing data were replaced to obtain complete ML datasets. The temperature and pH were assumed as 25℃ and 6, respectively. Missing values of solubility, vapor pressure, and log Kow were appropriately estimated based on related references and the reliable data we collected [40], [41].

By statistically analyzing the datasets, outliers, whose values were higher or lower than three standard deviations of each variable were determined [42], [43], [44] and the total data were divided into two groups and analyzed using ML models: data including the outliers and data excluding the outliers. It has been reported that more accurate results can be obtained for predicting target values using data without outliers in ML algorithms [42], [44], [45], [46].

As the final step in data preprocessing for ML training, the data were standardized to have a mean of zero and a standard deviation of one using z-scores, owing to the large differences in the magnitudes of the variables [47], [48], [49], [50]. The preprocessed data were then partitioned into training and validation data using K-fold cross validation (K=10) to prevent overfitting of the ML models [47], [48], [49].

2.3. Model experiment(construction)

ML models, including RF, XGB, and LGB, which are commonly used in the application of ML models in various fields, were used in this study [20], [26], [27], [28], [29], [50], [51], [52]. In our preliminary tests, relatively poor prediction accuracies were obtained using Linear, Ridge and Lasso regression models. All the programs for running the ML models were implemented using Python (ver. 3.10.13) and the Scikit_learn (ver. 1.3.0), xgboost (ver. 1.7.3), and LightGBM (ver. 4.0.0) libraries were used. The hyperparameters of the applied ML models were optimized using tree-structured Parzen Estimator algorithm, RMSE, and cross validation results. The optimized hyperparameter values for XGB, LGB, and RF were shown in Table 1S [53], [54].

Table 1.

Model performance evaluation metrics.

| Evaluation metrics | Mathematical expressions |

|---|---|

| Root Mean Squared Error (RMSE) | |

| Mean Absolute Error (MAE) | |

| R Squared (R2) |

2.4. Performance evaluation metrics

The accuracies of the ML models were evaluated using MAE, RMSE, and R2, also known as the coefficient of determination (Table 1) [55], [56]. MAE represents the average absolute error between the predicted and actual values, and RMSE is the square root of the mean squared error (MSE), which is the average of the squared errors. The MAE and RMSE values closer to 0 indicate higher prediction performance. The R2 value reflects how well the generated model explains the data for both the actual and predicted values, with a value closer to 1 indicating a higher model performance.

Furthermore, the SHAP value method was employed to analyze the contribution of each input variable to the predicted target variable. SHAP assigns a 'SHAPley value' to each input variable (or feature), precisely measuring the contribution of individual variable to the model output in comparison to a baseline prediction—the mean prediction in the data. These values play a pivotal role in understanding the impact of specific features on the predictive decisions of a model [57].

3. Result and discussion

3.1. Statistical analysis of collected data

Table 2, Table 3 show the results of a simple statistical analysis of the collected data, including the outliers [eight input variables: frequency, power density, pH, temperature, initial concentration, solubility, vapor pressure, and octanol–water partition coefficient (Kow); one target variable: pseudo-1st-order reaction rate constant] with Pele and Pcal, respectively. Table 2S and 3S depict the statistical analysis results without outliers. Fig. 1S–4S also show the histogram plots of data with Pele including the outliers (374 sets), data with Pele excluding the outliers (326 sets), data with Pcal including the outliers (330 sets), and data with Pcal excluding the outliers (275 sets). Although many more datasets for the ultrasonic degradation of organic pollutants under a wide range of experimental conditions were collected compared to previous research [22], [31], [58], the data were not distributed evenly, which could lead to low accuracies in the predicted target values using the developed ML algorithms for the input variables in the untrained or poorly trained ranges.

Table 2.

Descriptive statistics of the collected data with Pele (374 sets) (the outliers were included).

| Frequency (kHz) |

Power density (kW/L) |

pH | Temp. | Initial conc. (mM) | Solubility (g/L) |

Log Kow | Vapor pressure (mmHg) |

Rate constants (×10-3/sec) |

|

|---|---|---|---|---|---|---|---|---|---|

| mean | 358 | 0.82 | 5.92 | 22.8 | 2.25 | 12.88 | 2.64 | 0.25 | 0.72 |

| std | 283 | 2.92 | 1.87 | 5.59 | 28.34 | 47.9 | 1.80 | 1.31 | 1.23 |

| min | 20 | 8 × 10–5 | 2.0 | 10 | 3.67 × 10–5 | 1.30 × 10–6 | −1.20 | 2.40 × 10–13 | 7.56 × 10–5 |

| 25 % | 200 | 0.16 | 5.5 | 20 | 0.01 | 0.04 | 1.46 | 4.74 × 10–6 | 0.16 |

| 50 % | 300 | 0.24 | 6.0 | 23.5 | 0.03 | 0.2 | 2.9 | 9.01 × 10–5 | 0.39 |

| 75 % | 497 | 0.48 | 6.5 | 25 | 0.10 | 4.3 | 3.83 | 3.08 × 10–3 | 0.90 |

| max | 1,700 | 50 | 13.0 | 80 | 545.9 | 818 | 6.91 | 12.0 | 19.19 |

Table 3.

Descriptive statistics of the collected data with Pcal (330 sets) (the outliers were included).

| Frequency (kHz) |

Power density (kW/L) |

pH | Temp. | Initial conc. (mM) | Solubility (g/L) |

Log Kow | Vapor pressure (mmHg) |

Rate constants (×10-3/sec) |

|

|---|---|---|---|---|---|---|---|---|---|

| mean | 432 | 0.47 | 5.84 | 24.6 | 1.11 | 15.67 | 2.74 | 16.66 | 1.24 |

| std | 396 | 1.79 | 1.96 | 9.59 | 6.42 | 53.64 | 2.03 | 69.08 | 4.42 |

| min | 20 | 0.02 | 2.0 | 1 | 4.76 × 10–5 | 1.30 × 10–6 | −9.7 | 2.57 × 10–12 | 7.56 × 10–5 |

| 25 % | 35 | 0.08 | 5.5 | 20 | 26.7 × 10–3 | 0.02 | 1.94 | 6.68 × 10–6 | 0.08 |

| 50 % | 404 | 0.15 | 6.0 | 25 | 0.086 | 0.50 | 2.9 | 9.01 × 10–5 | 0.23 |

| 75 % | 600 | 0.25 | 7.0 | 25 | 0.5 | 10 | 3.83 | 0.087 | 0.78 |

| max | 1,700 | 12.6 | 12.7 | 70 | 100 | 717 | 6.91 | 297 | 44.33 |

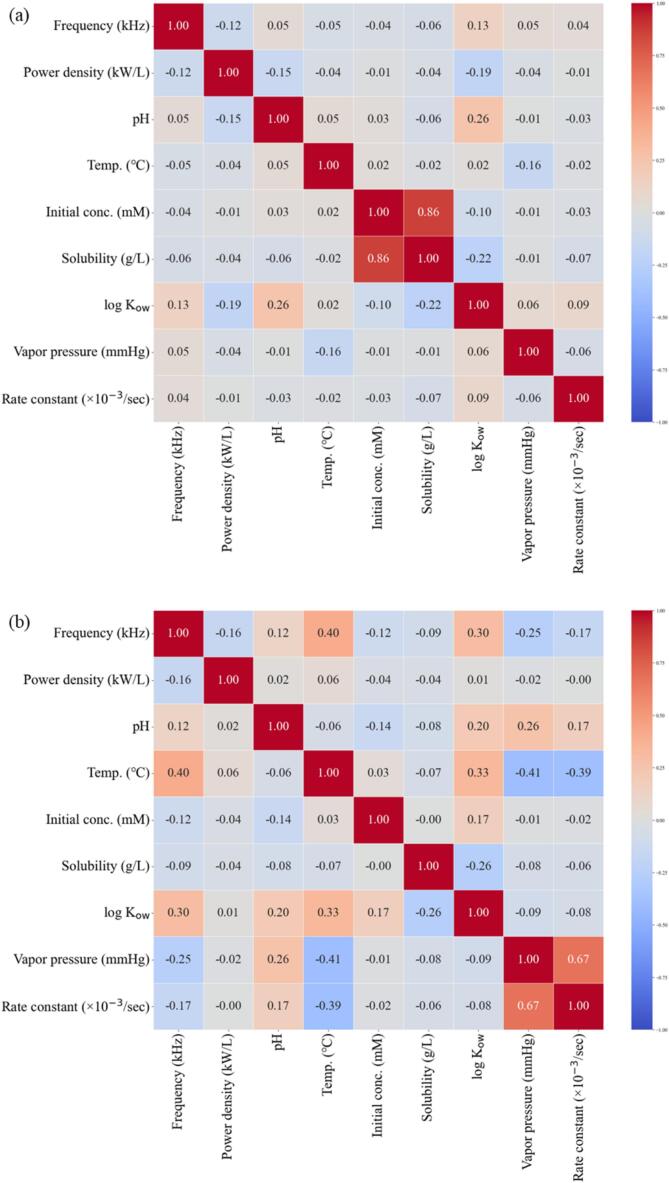

Before the ML analysis, the linear relationship between the variables was investigated using Pearson correlation with the data including the outliers as shown in Fig. 2. The Pearson correlation coefficient ranges from −1.00 to + 1.00 and represents the strength and direction of the linear relationship between two variables [19], [25], [59]. Most variables in the collected data did not demonstrate a linear relationship. For the data with Pcal, a relatively strong linear relationship between vapor pressure and rate constant was obtained, which could be due to the presence of large outliers [the maximum vapor pressure was 1015 times higher than the minimum pressure (malachite green: 2.4 × 10–13 mmHg; carbon disulfide: 297 mmHg)]. As shown in Fig. 5S(b), a weak linear relationship between the vapor pressure and rate constant was obtained using data that excluded outliers.

Fig. 2.

Pearson correlation matrix of eight input variables and one target variable: a) data with Pele including the outliers, b) data with Pcal including the outliers.

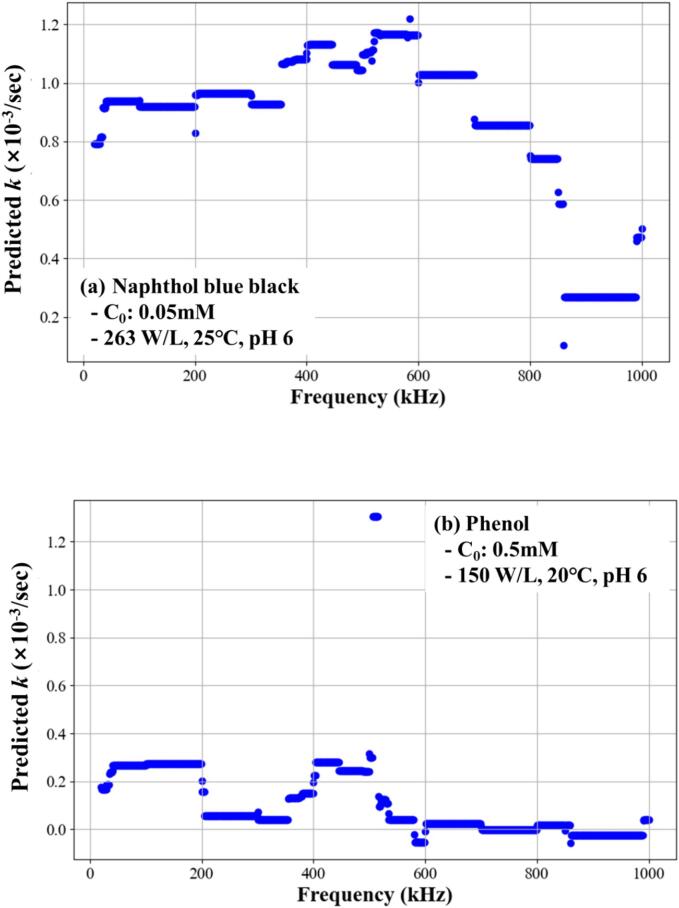

Fig. 5.

Predicted pseudo-1st-order reaction kinetic constants for the sonochemical degradation of naphthol blue black or phenol under various frequency conditions (20 to 1,000 kHz) using the XGB model with the augmented data.

ML algorithms are widely recognized for their ability to handle nonlinear relationships and offer more flexible solutions when the data distribution is unknown or nonnormal. Therefore, given the limitations of simple statistical methods in providing valuable conclusions, we opted for ML methods to analyze our data further [47]. The feature engineering techniques were not used in this study because of relatively small number of features [60], [61], [62].

3.2. Comparison of generated models

The performance of the trained ML models was evaluated for the prediction of rate constants based on their accuracy for four types of data using the evaluation metrics of RMSE, MAE, and R2: 1) data with Pele including the outliers (374 sets), 2) data with Pele excluding the outliers (326 sets), 3) data with Pcal including the outliers (330 sets), and 4) data with Pcal excluding the outliers (275 sets). Table 4 shows the averaged results from 10 iterations for the RMSE, MAE, and R2 for the cases of “Train” and “Test”: “Train” represents the accuracy evaluation result for the test using training data (randomly selected 90 % of the data) and “Test” represents the accuracy evaluation result for the test using untraining data (remaining 10 % of the data) in K-fold cross validation. The MAE and RMSE values closer to 0 and a value of R2 closer to 1 indicate a higher prediction performance [55], [56].

Table 4.

Evaluation of the performance of ML models for the rate constant prediction using the RMSE, MAE, and R2 [“Train” represents the accuracy evaluation result for the test using training data (randomly selected 90% of the data) and “Test” represents the accuracy evaluation result for the test using untraining data (remaining 10% of the data) in K-fold cross validation.].

| Evaluation of ML models | Data with Pele including the outliers | Data with Pele excluding the outliers | Data with Pcal including the outliers | Data with Pcal excluding the outliers | |

|---|---|---|---|---|---|

| RF | RMSE (Train) | 0.4265 | 0.0368 | 0.2185 | 0.0020 |

| MAE (Train) | 0.1220 | 0.0742 | 0.0988 | 0.0187 | |

| R2 (Train) | 0.5937 | 0.9134 | 0.7791 | 0.9334 | |

| RMSE (Test) | 0.9679 | 0.2286 | 0.5358 | 0.0160 | |

| MAE (Test) | 0.3883 | 0.2740 | 0.2357 | 0.0744 | |

| R2 (Test) | −0.2514 | 0.4041 | −0.1461 | 0.4792 | |

| XGB | RMSE (Train) | 0.4588 | 0.0505 | 0.0791 | 0.0019 |

| MAE (Train) | 0.1683 | 0.1109 | 0.0686 | 0.0243 | |

| R2 (Train) | 0.5614 | 0.8811 | 0.9198 | 0.9360 | |

| RMSE (Test) | 0.9586 | 0.2144 | 0.5457 | 0.0160 | |

| MAE (Test) | 0.3487 | 0.2616 | 0.1813 | 0.0678 | |

| R2 (Test) | −0.1954 | 0.4490 | −2.4308 | 0.4709 | |

| LGB | RMSE (Train) | 0.4231 | 0.0318 | 0.0020 | 0.0001 |

| MAE (Train) | 0.1103 | 0.0559 | 0.0197 | 0.0052 | |

| R2 (Train) | 0.5975 | 0.9251 | 0.9979 | 0.9972 | |

| RMSE (Test) | 0.9688 | 0.2380 | 0.3436 | 0.0140 | |

| MAE (Test) | 0.3702 | 0.2892 | 0.1775 | 0.0665 | |

| R2 (Test) | −0.2378 | 0.3414 | 0.2291 | 0.5357 | |

Higher accuracy was obtained for the data with Pcal than those with Pele, which indicated that the Pcal data might be more appropriate for quantitatively analyzing sonochemical activity and applying the ML algorithms. It should be noted that the Pele data included the measured Pele using a power meter, and the displayed (or provided by the manufacturer) Pele on a device. Moreover, the results using data that excluded outliers showed higher accuracy because of the more focused ML effect for smaller scopes [42], [43], [44], [45]. However, this could significantly reduce diversity in the collected datasets and narrow the variable scope of the ML application. More datasets should be collected to reduce the outliers and appropriate outlier handling methods should be developed to ensure diversity in datasets [63], [64].

It was found that the evaluation metrics for the test using “Train” data resulted in higher accuracy than the test using “Test” data for RMSE, MAE, and R2. This was because the trained ML model was tested on the data sets that it had already experienced for the cases of “Train”. On the other hand, the evaluation metrics for “Test” were obtained from the new data sets that the ML model had never experienced [65]. This indicates that ML methods might be somewhat vulnerable for less-trained or unseen data, which is why more related data should be accumulated over wider ranges for the potential use of ML. The results in Table 4 also indicated that relatively low accuracy for “Test” might represent the data in wide ranges for the variables collected in this study [66]. Among the three ML models, the XGB model showed the most reliable results, especially for the data with Pcal excluding the outliers. The difficulty of determining which ML model is more appropriate for handling collected data has previously been reported [19], [25], [30], [67].

3.3. Data augmentation

To improve the accuracy of ML performance, data augmentation has been widely utilized to address data scarcity in the image and natural language domains [68], [69], [70]. Data augmentation has been also used to increase the number of chemical structure datasets in chemistry [71], [72], [73], [74], [75], [76]. However, in the chemical and environmental engineering fields, its application in ML/DL has rarely been reported. Numerical data can be augmented using a Gaussian distribution, or by randomly generating new datasets of input variables from the original data [77]. However, data that are randomly generated or significantly different from the original data may result in low accuracy of the ML model, despite the use of more datasets. In this study, we suggested a novel noise injection method to increase the size of the training data. New datasets were generated by adding values ranging from ± 0.01 % to 10 % of the original dataset (data with Pcal excluding the outliers), which were used for the prediction of the rate constants in the ML models.

As shown in Fig. 3, the augmented data resulted in significantly higher accuracy for all applied models, and the smallest change in the original datasets (adding a value of 0.01 %) induced the most reliable ML performance in this study. This indicates that an increase in the number of datasets with a slight change in the variables could noticeably enhance the ML performance. However, it is not clear whether augmentation methods such as noise injection are statistically reliable because few ML results using data augmentation have been reported in the context of chemical and environmental engineering data.

Fig. 3.

Comparison of performance of ML models using the augmented data.

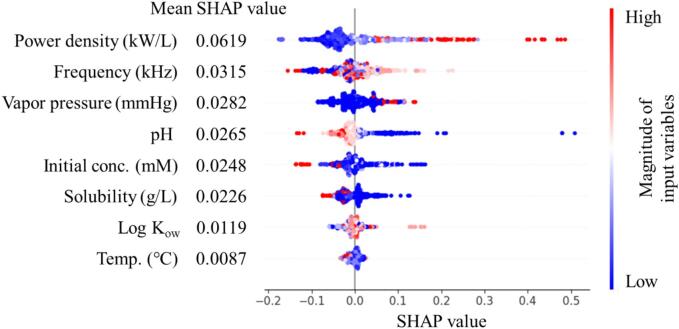

It has been known that ML models are barely understood intuitively and they are called as black-box models [57], [78]. To understand the contribution of each input variable to the predicted target values (rate constants in this study), the outcome of the trained ML method, the SHAP method, was applied to the aforementioned augmented data. In Fig. 4, the input variables are listed from top to bottom in the order in which they predominantly affect the ML predicted target value, and the most influential variables were the power density (Mean SHAP value: 0.0619) and frequency (Mean SHAP value: 0.0315) in this study. Vapor pressure, pH, initial conc., and solubility also made considerable contributions to the ML performance. In addition, more positive SHAP values of the input variables have a more positive impact on the predicted target values, whereas negative SHAP values have the opposite effect. Thus, the application of a high power density (positive SHAP values/red color and negative SHAP values/blue color), moderate frequency (positive SHAP values/white color and negative SHAP value/red and blue color), and low pH (positive SHAP values/blue color and negative SHAP values/red color) can result in high rate constants for sonochemical degradation. This result appeared somewhat consistent with previous sonochemistry results [79], [80] and further data accumulation was required to better understand the contribution of the input variables in the ML analysis.

Fig. 4.

Input variable contribution analysis on the target value (rate constants) using the SHAP method.

3.4. Application of trained ML model

The applicability of the trained ML models using augmented data was investigated to predict the pseudo-1st-order reaction kinetic constant for sonochemical degradation in the frequency range of 20–1,000 kHz. Fig. 5 shows the predicted results of the ML models for two pollutants, naphthol blue black and phenol, which were the most reported pollutants in our collected data. Other input variables, such as the power density, initial concentration, temperature, and pH, were determined as the median values of the collected datasets of the target pollutant (naphthol blue black or phenol), as shown in Fig. 5.

Higher rate constants were obtained in the frequency ranges of 100–600 and 400–500 kHz for naphthol blue black and phenol, respectively. As no significant trends in the rate constants were observed for the two pollutants, it was difficult to determine whether the predicted results were reliable. It is notable that the datasets of naphthol blue black (21 sets) were obtained from two papers from a single research group, indicating that limited experimental conditions were applied using frequencies of 585, 860, and 1140 kHz. Thus, the predicted results for the low-frequency range had relatively low accuracy. For phenol, datasets (23 sets) were obtained from eight papers by various research groups, indicating that a variety of experimental conditions and their results were trained in the ML models. The frequencies of the phenol datasets collected were 20, 30, 35, 200, 300, 500, 520, and 534 kHz. However, less training of the ML models under certain conditions may be conducted for phenol, despite the more varied frequency conditions. Consequently, it is recommended that more data over wider experimental conditions including ultrasound-based AOPs from different research groups be accumulated and analyzed to obtain more reliable predicted results using ML models.

4. Conclusion

In this study, novel ML models, including RF, XGB, and LGB, were used to predict pseudo-1st-order reaction rate constants for the sonochemical degradation of aqueous organic pollutants. A total of 618 sets of data, including ultrasonic, solution, and pollutant characteristics, were used for training the ML models. The ML models were well trained with high accuracy for the prediction of rate constants using data with Pcal excluding the outliers; the XGB model exhibited the highest performance among the applied models. Data augmentation can significantly enhance the accuracy of an ML model by increasing the size of the training data. However, the application of the trained ML model for the two selected pollutants in the frequency range of 20–1,000 kHz resulted in uncertain predictions. Additional data on a wider range of experimental conditions from different research groups are required for the use of ML models in sonochemistry.

CRediT authorship contribution statement

Iseul Na: Writing – review & editing, Writing – original draft, Validation, Methodology, Investigation, Formal analysis, Data curation, Conceptualization. Taeho Kim: Validation, Methodology, Investigation. Pengpeng Qiu: Methodology, Validation, Writing – review & editing. Younggyu Son: Writing – review & editing, Writing – original draft, Validation, Supervision, Methodology, Investigation, Funding acquisition, Formal analysis, Data curation, Conceptualization.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

This work was supported by the National Research Foundation of Korea [RS-2024-00350023] and the Korea Ministry of Environment (MOE) as part of the “Subsurface Environment Management (SEM)” Program [RS-2021-KE001466].

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.ultsonch.2024.107032.

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- 1.Zhou X., Liang W., Wang K.I.K., Wang H., Yang L.T., Jin Q. Deep-Learning-Enhanced Human Activity Recognition for Internet of Healthcare Things. IEEE Internet Things. 2020;7:6429–6438. [Google Scholar]

- 2.Shamshirband S., Fathi M., Dehzangi A., Chronopoulos A.T., Alinejad-Rokny H. A review on deep learning approaches in healthcare systems: Taxonomies, challenges, and open issues. J. Biomed. Inform. 2021;113 doi: 10.1016/j.jbi.2020.103627. [DOI] [PubMed] [Google Scholar]

- 3.Abdullah A.A., Hassan M.M., Mustafa Y.T. A Review on Bayesian Deep Learning in Healthcare: Applications and Challenges. IEEE Access. 2022;10:36538–36562. [Google Scholar]

- 4.Bianco M.J., Gerstoft P., Traer J., Ozanich E., Roch M.A., Gannot S., Deledalle C.-A. Machine learning in acoustics: Theory and applications. J. Acoust. Soc. Am. 2019;146:3590–3628. doi: 10.1121/1.5133944. [DOI] [PubMed] [Google Scholar]

- 5.Nagarhalli T.P., Vaze V., Rana N.K. Impact of Machine Learning in Natural Language Processing: A Review, in. Third International Conference on Intelligent Communication Technologies and Virtual Mobile Networks (ICICV) 2021;2021:1529–1534. [Google Scholar]

- 6.Bachute M.R., Subhedar J.M. Autonomous Driving Architectures: Insights of Machine Learning and Deep Learning Algorithms. Mach. Learn. Appl. 2021;6 [Google Scholar]

- 7.Hoang D., Wiegratz K. Machine learning methods in finance: Recent applications and prospects. Eur. Financ. Manag. 2023;29:1657–1701. [Google Scholar]

- 8.Chen H., Zhang C., Yu H., Wang Z., Duncan I., Zhou X., Liu X., Wang Y., Yang S. Application of machine learning to evaluating and remediating models for energy and environmental engineering. Appl. Energ. 2022;320 [Google Scholar]

- 9.Sharmila V.G., Shanmugavel S.P., Banu J.R. A review on emerging technologies and machine learning approaches for sustainable production of biofuel from biomass waste. Biomass. Bioenergy. 2024;180 [Google Scholar]

- 10.Konya A., Nematzadeh P. Recent applications of AI to environmental disciplines: A review. Sci. Total Environ. 2024;906 doi: 10.1016/j.scitotenv.2023.167705. [DOI] [PubMed] [Google Scholar]

- 11.Asadollah S.B.H.S., Sharafati A., Motta D., Yaseen Z.M. River water quality index prediction and uncertainty analysis: A comparative study of machine learning models. J. Environ. Chem. Eng. 2021;9 [Google Scholar]

- 12.Zhong S., Zhang K., Bagheri M., Burken J.G., Gu A., Li B., Ma X., Marrone B.L., Ren Z.J., Schrier J., Shi W., Tan H., Wang T., Wang X., Wong B.M., Xiao X., Yu X., Zhu J.-J., Zhang H. Machine Learning: New Ideas and Tools in Environmental Science and Engineering. Environ. Sci. Technol. 2021;55:12741–12754. doi: 10.1021/acs.est.1c01339. [DOI] [PubMed] [Google Scholar]

- 13.Kang J., Choi J., Lee D., Son Y. UV/persulfate processes for the removal of total organic carbon from coagulation-treated industrial wastewaters. Chemosphere. 2024;346 doi: 10.1016/j.chemosphere.2023.140609. [DOI] [PubMed] [Google Scholar]

- 14.Lee D., Kang J., Son Y. Effect of violent mixing on sonochemical oxidation activity under various geometric conditions in 28-kHz sonoreactor. Ultrason. Sonochem. 2023;101 [Google Scholar]

- 15.Son Y. In: Handbook of Ultrasonics and Sonochemistry. Ashokkumar M., editor. Springer Singapore; Singapore: 2016. Advanced Oxidation Processes Using Ultrasound Technology for Water and Wastewater Treatment; pp. 1–22. [Google Scholar]

- 16.Son Y., Lim M., Khim J., Ashokkumar M. Attenuation of UV Light in Large-Scale Sonophotocatalytic Reactors: The Effects of Ultrasound Irradiation and TiO2 Concentration. Ind. Eng. Chem. Res. 2012;51:232–239. [Google Scholar]

- 17.Fang Z., Huang R., Chelme-Ayala P., Shi Q., Xu C., Gamal El-Din M. Comparison of UV/Persulfate and UV/H2O2 for the removal of naphthenic acids and acute toxicity towards Vibrio fischeri from petroleum production process water. Sci. Total Environ. 2019;694 doi: 10.1016/j.scitotenv.2019.133686. [DOI] [PubMed] [Google Scholar]

- 18.Lee Y., Lee S., Cui M., Kim J., Ma J., Han Z., Khim J. Improving sono-activated persulfate oxidation using mechanical mixing in a 35-kHz ultrasonic reactor: Persulfate activation mechanism and its application. Ultrason. Sonochem. 2021;72 doi: 10.1016/j.ultsonch.2020.105412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kim C.-M., Jaffari Z.H., Abbas A., Chowdhury M.F., Cho K.H. Machine learning analysis to interpret the effect of the photocatalytic reaction rate constant (k) of semiconductor-based photocatalysts on dye removal. J. Hazard. Mater. 2024;465 doi: 10.1016/j.jhazmat.2023.132995. [DOI] [PubMed] [Google Scholar]

- 20.Jiang S., Zhou Y., Xu W., Xia Q., Yi M., Cheng X. Machine learning-driven optimization and application of bimetallic catalysts in peroxymonosulfate activation for degradation of fluoroquinolone antibiotics. J. Chem. Eng. 2024;486 [Google Scholar]

- 21.Zhou Y., Ren Y., Cui M., Guo F., Sun S., Ma J., Han Z., Khim J. Sonoelectrochemical system mechanisms, design, and machine learning for predicting degradation kinetic constants of pharmaceutical pollutants. J. Chem. Eng. 2023;478 [Google Scholar]

- 22.A. Esmaeili, S. Pourranjabar Hasan Kiadeh, A. Ebrahimian Pirbazari, F. Esmaeili Khalil Saraei, A. Ebrahimian Pirbazari, A. Derakhshesh, F.-S. Tabatabai-Yazdi, CdS nanocrystallites sensitized ZnO nanosheets for visible light induced sonophotocatalytic/photocatalytic degradation of tetracycline: From experimental results to a generalized model based on machine learning methods, Chemosphere 332 (2023) 138852. [DOI] [PubMed]

- 23.S. Khademakbari, A. Ebrahimian Pirbazari, F. Esmaeili Khalil Saraei, A. Esmaeili, A. Ebrahimian Pirbazari, A. Akbari Kohnehsari, A. Derakhshesh, Designing of plasmonic 2D/1D heterostructures for ultrasound assisted photocatalytic removal of tetracycline: Experimental results and modeling, J. Alloys Compd. 975 (2024) 172994.

- 24.Xiao Z., Yang B., Feng X., Liao Z., Shi H., Jiang W., Wang C., Ren N. Density Functional Theory and Machine Learning-Based Quantitative Structure-Activity Relationship Models Enabling Prediction of Contaminant Degradation Performance with Heterogeneous Peroxymonosulfate Treatments. Environ. Sci. Technol. 2023;57:3951–3961. doi: 10.1021/acs.est.2c09034. [DOI] [PubMed] [Google Scholar]

- 25.Zhang S.-Z., Chen S., Jiang H. A new tool to predict the advanced oxidation process efficiency: Using machine learning methods to predict the degradation of organic pollutants with Fe-carbon catalyst as a sample. Chem. Eng. Sci. 2023;280 [Google Scholar]

- 26.Taoufik N., Boumya W., Achak M., Chennouk H., Dewil R., Barka N. The state of art on the prediction of efficiency and modeling of the processes of pollutants removal based on machine learning. Sci. Total Environ. 2022;807 doi: 10.1016/j.scitotenv.2021.150554. [DOI] [PubMed] [Google Scholar]

- 27.Zhu T., Yu Y., Chen M., Zong Z., Tao C. An innovative method for predicting oxidation reaction rate constants by extracting vital information of organic contaminants (OCs) based on diverse molecular representations. J. Environ. Chem. Eng. 2024;12 [Google Scholar]

- 28.Sun Y., Zhao Z., Tong H., Sun B., Liu Y., Ren N., You S. Machine Learning Models for Inverse Design of the Electrochemical Oxidation Process for Water Purification. Environ. Sci. Technol. 2023;57:17990–18000. doi: 10.1021/acs.est.2c08771. [DOI] [PubMed] [Google Scholar]

- 29.Ren Y., Cui M., Zhou Y., Lee Y., Ma J., Han Z., Khim J. Zero-valent iron based materials selection for permeable reactive barrier using machine learning. J. Hazard. Mater. 2023;453 doi: 10.1016/j.jhazmat.2023.131349. [DOI] [PubMed] [Google Scholar]

- 30.A. Ajami Yazdi, A. Ebrahimian Pirbazari, F. Esmaeili Khalil Saraei, A. Esmaeili, A. Ebrahimian Pirbazari, A. Akbari Kohnehsari, A. Derakhshesh, Design of 2D/2D β-Ni(OH)2/ZnO heterostructures via photocatalytic deposition of nickel for sonophotocatalytic degradation of tetracycline and modeling with three supervised machine learning algorithms, Chemosphere 352 (2024) 141328. [DOI] [PubMed]

- 31.Glienke J., Schillberg W., Stelter M., Braeutigam P. Prediction of degradability of micropollutants by sonolysis in water with QSPR - a case study on phenol derivates. Ultrason. Sonochem. 2022;82 doi: 10.1016/j.ultsonch.2021.105867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Son Y., Choi J. Effects of gas saturation and sparging on sonochemical oxidation activity in open and closed systems, part II: NO2−/NO3− generation and a brief critical review. Ultrason. Sonochem. 2023;92 doi: 10.1016/j.ultsonch.2022.106250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ghosh D., Sarkar A., Basu A.G., Roy S. Effect of plastic pollution on freshwater flora: A meta-analysis approach to elucidate the factors influencing plant growth and biochemical markers. Water Res. 2022;225 doi: 10.1016/j.watres.2022.119114. [DOI] [PubMed] [Google Scholar]

- 34.Na I., Son Y. Sonochemical oxidation activity in 20-kHz probe-type sonicator systems: The effects of probe positions and vessel sizes. Ultrason. Sonochem. 2024;108 doi: 10.1016/j.ultsonch.2024.106959. [DOI] [PubMed] [Google Scholar]

- 35.Son Y., No Y., Kim J. Geometric and operational optimization of 20-kHz probe-type sonoreactor for enhancing sonochemical activity. Ultrason. Sonochem. 2020;65 doi: 10.1016/j.ultsonch.2020.105065. [DOI] [PubMed] [Google Scholar]

- 36.Son Y. Simple design strategy for bath-type high-frequency sonoreactors. J. Chem. Eng. 2017;328:654–664. [Google Scholar]

- 37.Son Y., Lim M., Ashokkumar M., Khim J. Geometric Optimization of Sonoreactors for the Enhancement of Sonochemical Activity. J. Phys. Chem. C. 2011;115:4096–4103. [Google Scholar]

- 38.T. Khuyen Viet Bao, A. Yoshiyuki, K. Shinobu, Influence of Liquid Height on Mechanical and Chemical Effects in 20 kHz Sonication, Jpn. J. Appl. Phys. 52 (2013) 07HE07.

- 39.Asakura Y., Nishida T., Matsuoka T., Koda S. Effects of ultrasonic frequency and liquid height on sonochemical efficiency of large-scale sonochemical reactors. Ultrason. Sonochem. 2008;15:244–250. doi: 10.1016/j.ultsonch.2007.03.012. [DOI] [PubMed] [Google Scholar]

- 40.Isnard P., Lambert S. Aqueous solubility and n-octanol/water partition coefficient correlations. Chemosphere. 1989;18:1837–1853. [Google Scholar]

- 41.Dai J., Jin L., Wang L., Zhang Z. Determination and estimation of water solubilities and octanol/water partition coefficients for derivates of benzanilides. Chemosphere. 1998;37:1419–1426. doi: 10.1016/s0045-6535(98)00132-5. [DOI] [PubMed] [Google Scholar]

- 42.Pollet T.V., van der Meij L. To Remove or not to Remove: the Impact of Outlier Handling on Significance Testing in Testosterone Data. Adap. Human Behav. Physiol. 2017;3:43–60. [Google Scholar]

- 43.Dave D., Varma T.H.R., Méan A.M. A Review of various statestical methods for Outlier Detection. International Journal of Computer Science & Engineering Technology (IJCSET) 2014;5(2):137–140. [Google Scholar]

- 44.Johansen M.B., Christensen P.A. A simple transformation independent method for outlier definition. Clinical Chemistry and Laboratory Medicine (CCLM) 2018;56:1524–1532. doi: 10.1515/cclm-2018-0025. [DOI] [PubMed] [Google Scholar]

- 45.Liu H., Shah S., Jiang W. On-line outlier detection and data cleaning. Comput. Chem. Eng. 2004;28:1635–1647. [Google Scholar]

- 46.Kwak S.K., Kim J.H. Statistical data preparation: management of missing values and outliers. Korean J Anesthesiol. 2017;70:407–411. doi: 10.4097/kjae.2017.70.4.407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Li L., Rong S., Wang R., Yu S. Recent advances in artificial intelligence and machine learning for nonlinear relationship analysis and process control in drinking water treatment: A review. J. Chem. Eng. 2021;405 [Google Scholar]

- 48.Wong T.T., Yeh P.Y. Reliable Accuracy Estimates from k-Fold Cross Validation. IEEE t. Knowl. Data En. 2020;32:1586–1594. [Google Scholar]

- 49.Zhang X., Liu C.-A. Model averaging prediction by K-fold cross-validation. J. Econometrics. 2023;235:280–301. [Google Scholar]

- 50.Ke G., Meng Q., Finley T., Wang T., Chen W., Ma W., Ye Q., Liu T.-Y. LightGBM: a Highly Efficient Gradient Boosting Decision Tree, in. Curran Associates Inc.; Long Beach, California, USA: 2017. pp. 3149–3157. [Google Scholar]

- 51.Breiman L. Random Forests. Mach. Learn. 2001;45:5–32. [Google Scholar]

- 52.Chen T., Guestrin C. Xgboost: A Scalable Tree Boosting System, in. Association for Computing Machinery; San Francisco, California, USA: 2016. pp. 785–794. [Google Scholar]

- 53.Nguyen H.-P., Liu J., Zio E. A long-term prediction approach based on long short-term memory neural networks with automatic parameter optimization by Tree-structured Parzen Estimator and applied to time-series data of NPP steam generators. Appl. Soft Comput. 2020;89 [Google Scholar]

- 54.T.T. Khoei, S. Ismail, N. Kaabouch, Boosting-based Models with Tree-structured Parzen Estimator Optimization to Detect Intrusion Attacks on Smart Grid, in: 2021 IEEE 12th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), 2021, pp. 0165-0170.

- 55.Chicco D., Warrens M.J., Jurman G. The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Comput. Sci. 2021;7:e623. doi: 10.7717/peerj-cs.623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Chai T., Draxler R.R. Root mean square error (RMSE) or mean absolute error (MAE)? – Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014;7:1247–1250. [Google Scholar]

- 57.Štrumbelj E., Kononenko I. Explaining prediction models and individual predictions with feature contributions. Knowl. Inf. Syst. 2014;41:647–665. [Google Scholar]

- 58.Ren Q., Yin C., Chen Z., Cheng M., Ren Y., Xie X., Li Y., Zhao X., Xu L., Yang H., Li W. Efficient sonoelectrochemical decomposition of chlorpyrifos in aqueous solution. Microchem. J. 2019;145:146–153. [Google Scholar]

- 59.Deng B., Chen P., Xie P., Wei Z., Zhao S. Iterative machine learning method for screening high-performance catalysts for H2O2 production. Chem. Eng. Sci. 2023;267 [Google Scholar]

- 60.Bini S.A. Artificial Intelligence, Machine Learning, Deep Learning, and Cognitive Computing: What Do These Terms Mean and How Will They Impact Health Care? J. Arthroplasty. 2018;33:2358–2361. doi: 10.1016/j.arth.2018.02.067. [DOI] [PubMed] [Google Scholar]

- 61.Di Mauro M., Galatro G., Fortino G., Liotta A. Supervised feature selection techniques in network intrusion detection: A critical review. Eng. Appl. Artif. Intel. 2021;101 [Google Scholar]

- 62.T. Verdonck, B. Baesens, M. Óskarsdóttir, S. vanden Broucke, Special issue on feature engineering editorial, Mach. Learn., 113 (2024) 3917-3928.

- 63.Smiti A. A critical overview of outlier detection methods. Comput. Sci. Rev. 2020;38 [Google Scholar]

- 64.Wang H., Bah M.J., Hammad M. Progress in Outlier Detection Techniques: A Survey. IEEE Access. 2019;7:107964–108000. [Google Scholar]

- 65.Uçar M.K., Nour M., Sindi H., Polat K. The Effect of Training and Testing Process on Machine Learning in Biomedical Datasets. Math. Probl. Eng. 2020;2020:2836236. [Google Scholar]

- 66.Ying X. An Overview of Overfitting and its Solutions. J. Phys. Conf. Ser. 2019;1168 [Google Scholar]

- 67.R. Mohammadzadeh Kakhki, Y. Jafarian shahri, M. Mohammadpoor, KCl mediated Ag/Co@Fe2O3/C3N4 heterojunction as a highly efficient visible photocatalyst for tetracycline degradation: Application of machine learning, J. Mol. Struct. 1299 (2024) 137139.

- 68.Zur R.M., Jiang Y., Pesce L.L., Drukker K. Noise injection for training artificial neural networks: A comparison with weight decay and early stopping. Med. Phys. 2009;36:4810–4818. doi: 10.1118/1.3213517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Khan U., Zahid S., Ali M.A., Ul-Hasan A., Shafait F. In: Document Analysis and Recognition – ICDAR 2021. Lladós J., Lopresti D., Uchida S., editors. Springer International Publishing; Cham: 2021. TabAug: Data Driven Augmentation for Enhanced Table Structure Recognition; pp. 585–601. [Google Scholar]

- 70.Maharana K., Mondal S., Nemade B. A review: Data pre-processing and data augmentation techniques. Glob. Transit. Proc. 2022;3:91–99. [Google Scholar]

- 71.Zhang Y., Wang L., Wang X., Zhang C., Ge J., Tang J., Su A., Duan H. Data augmentation and transfer learning strategies for reaction prediction in low chemical data regimes. Org. Chem. Front. 2021;8:1415–1423. [Google Scholar]

- 72.Magar R., Wang Y., Lorsung C., Liang C., Ramasubramanian H., Li P., Barati Farimani A. AugLiChem: data augmentation library of chemical structures for machine learning. Mach. Learn.: Sci. Technol. 2022;3 [Google Scholar]

- 73.Ulrich N., Goss K.-U., Ebert A. Exploring the octanol–water partition coefficient dataset using deep learning techniques and data augmentation. Commun. Chem. 2021;4:90. doi: 10.1038/s42004-021-00528-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Zhong S., Hu J., Yu X., Zhang H. Molecular image-convolutional neural network (CNN) assisted QSAR models for predicting contaminant reactivity toward OH radicals: Transfer learning, data augmentation and model interpretation. J. Chem. Eng. 2021;408 [Google Scholar]

- 75.Wu X., Zhang Y., Yu J., Zhang C., Qiao H., Wu Y., Wang X., Wu Z., Duan H. Virtual data augmentation method for reaction prediction. Sci. Rep. 2022;12:17098. doi: 10.1038/s41598-022-21524-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Fricke F., Brandalero M., Liehr S., Kern S., Meyer K., Kowarik S., Hierzegger R., Westerdick S., Maiwald M., Hübner M. Artificial Intelligence for Mass Spectrometry and Nuclear Magnetic Resonance Spectroscopy Using a Novel Data Augmentation Method. IEEE Trans. Emerg. Top. Comput. 2022;10:87–98. [Google Scholar]

- 77.M. Arslan, M. Guzel, M. Demirci, S. Ozdemir, SMOTE and Gaussian Noise Based Sensor Data Augmentation, in: 2019 4th International Conference on Computer Science and Engineering (UBMK), 2019, pp. 1-5.

- 78.Li Z. Extracting spatial effects from machine learning model using local interpretation method: An example of SHAP and XGBoost. Comput. Environ. Urban Syst. 2022;96 [Google Scholar]

- 79.Wei Z., Spinney R., Ke R., Yang Z., Xiao R. Effect of pH on the sonochemical degradation of organic pollutants. Environ. Chem. Lett. 2016;14:163–182. [Google Scholar]

- 80.Wood R.J., Lee J., Bussemaker M.J. A parametric review of sonochemistry: Control and augmentation of sonochemical activity in aqueous solutions. Ultrason. Sonochem. 2017;38:351–370. doi: 10.1016/j.ultsonch.2017.03.030. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.