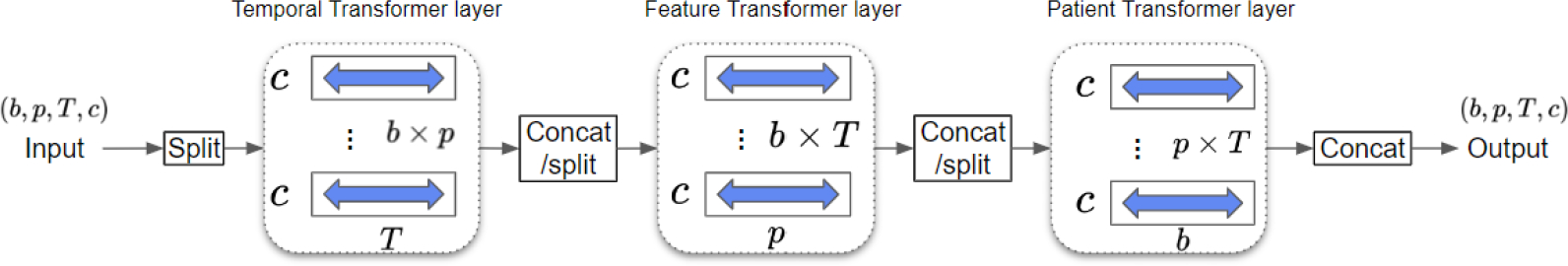

Figure 2:

Architecture of 3D attention mechanism. Given a tensor from the -th group with patients, features, time points, and channels, the temporal transformer layer processes inputs of shape to learn temporal dependency. The feature transformer layer processes inputs of shape to learn feature dependency. The patient transformer layer processes inputs of shape to learn patient dependency.