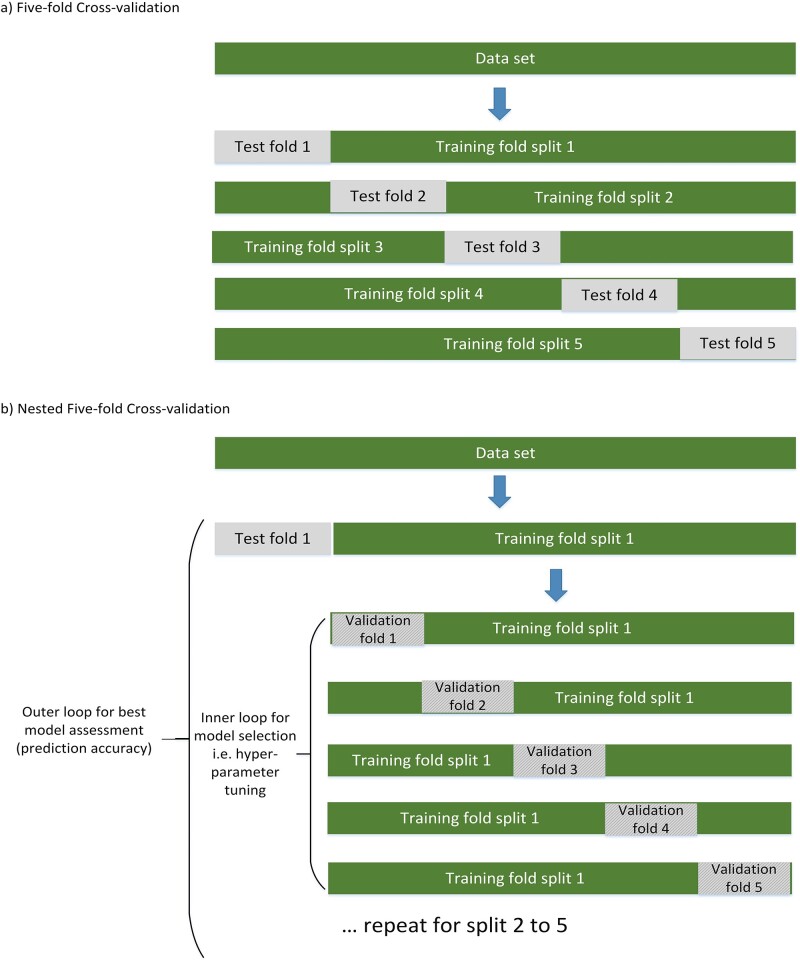

Figure 2.

Model selection and model assessment using nested cross-validation. Model assessment without model selection, i.e. without hyperparameter selection as in regression models, can be performed using 5–10-fold cross-validation. Five-fold cross-validation, as shown in (a), retains one-fifth of the data as an independent test set for model assessment and the remaining four-fifths for training. This process is repeated across five data splits, with each case being used once as part of a validation dataset. The results of the five test folds are averaged, and the final model is fitted using the entire sample. If model selection, such as hyperparameter tuning, is undertaken, nested cross-validation (b) must be performed. Here, for each split of the data, an additional five-fold splitting of the training data is implemented. The inner loop is used for model selection and the outer loop for model testing. This arrangement of an ‘inner loop’ for model selection (hyperparameter tuning) and an ‘outer loop’ for model testing effectively prevents information leakage and ensures a more robust evaluation of the model’s performance. Additionally, to obtain a more stable estimate of performance, both the standard and nested cross-validation procedures can be repeated multiple times with different partitions of the data, and the results averaged across different these repeats.