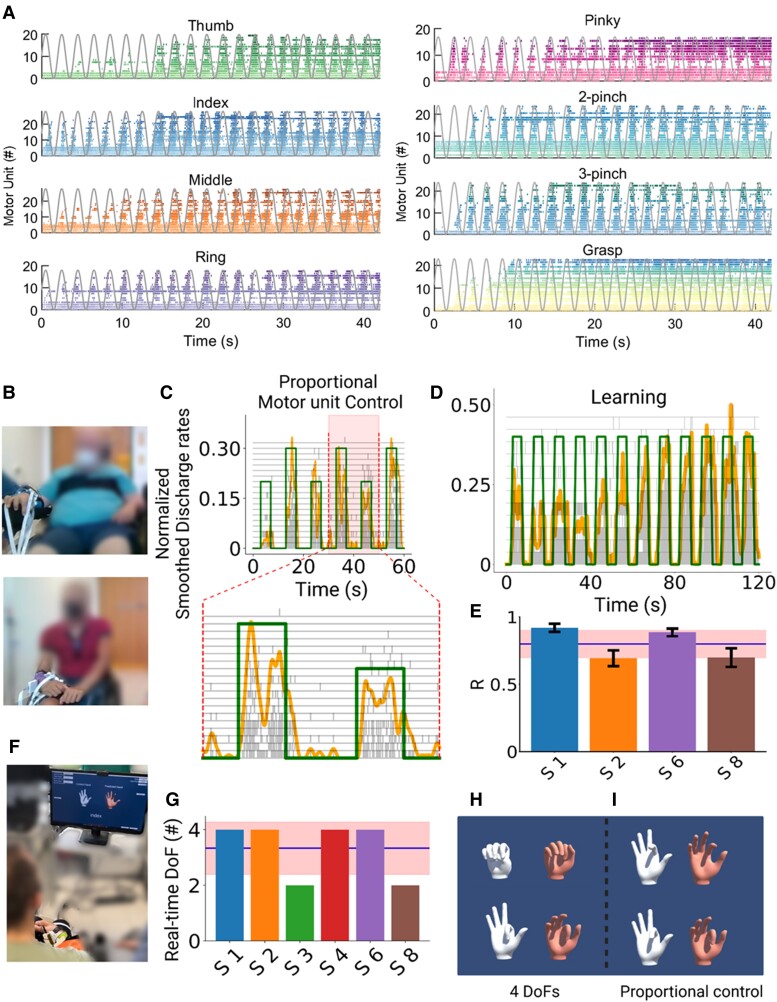

Figure 5.

Real-time control of motor units and virtual hand. (A) Raster plot for all motor units identified for Subject S6 (S6) during the respective task (colour-coded) and the virtual hand movement trajectories (grey line). Note the task-modulated activity of the motor unit firing patterns that encoded flexion and extension movements. (B) Real-time tasks for two participants (Subjects S1 and S6). (C) The participants were asked to follow a trajectory on a screen (green line) by attempting a grasp movement. The motor units were decomposed online and the cumulative smoothed discharge rate (yellow line) was used as biofeedback. After a few seconds of training (D), the subjects could track the trajectories with high accuracy and at different target levels (C). (E) Cross-correlation coefficient (R) between the smoothed discharge rate and the requested tasks for four subjects. (F) After the online motor unit decomposition, we used a supervised machine learning method to proportionally control the movement of a virtual hand. Four of six subjects were able to proportionally open and close the hand (G–I) and proportionally control in both movement directions (flexion and extension) the index finger (H and I). These subjects were able to control four degrees of freedom (DoFs) that corresponded to hand opening, closing, index flexion and extension. These subjects were able to control four DoFs that corresponded to hand opening, closing, index flexion and extension.