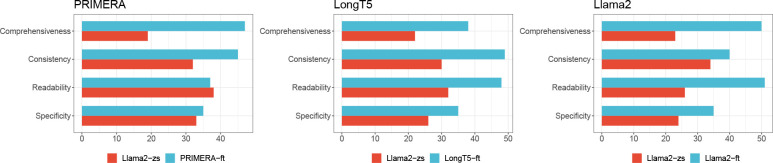

Figure 5:

The number of summaries where zero-shot LLama-2 generated better summaries (left/red), in contrast to the cases where the fine-tuned models generated better summaries (right/blue). As compared to zero-shot LLama-2, fine-tuned models produced more comprehensive, readable, consistent, and specific summaries in general. Despite PRIMERA and LongT5 having much smaller model architectures, they significantly outperformed zero-shot LLama-2 after fine-tuning. LLama-2 was also improved in all aspects via fine-tuning.