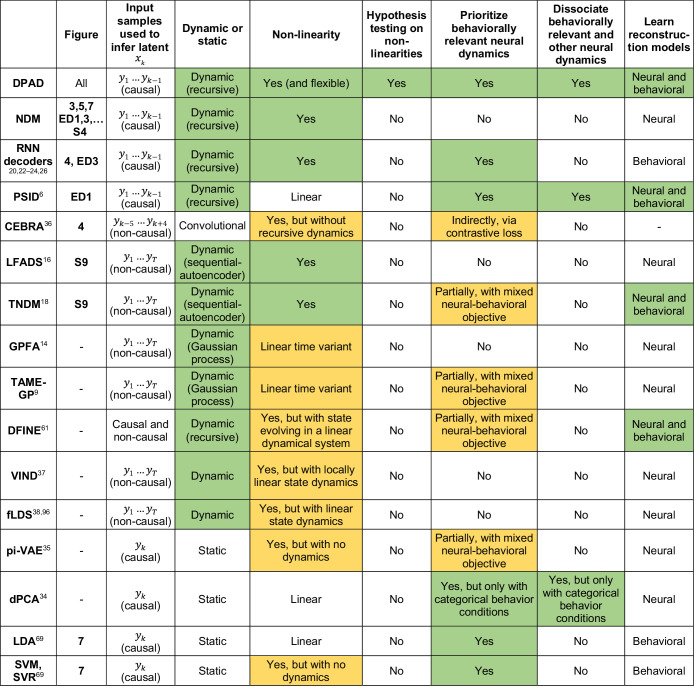

Extended Data Table 1.

Architectural differences between DPAD and various other methods

An extended description for some columns is provided in Supplementary Note 4. Here we provide a summary. Figure: Figure numbers for figures that show results from the named method. ED: Extended Data Figure. S: Supplementary Figure. Input samples used to infer latent xk: The subset of the input neural time series {y1, y2,…} that are used to estimate the latent variable xk associated with time sample k. Dynamic or static: Dynamic models have an explicit description of the temporal structure in data, which allows them to aggregate information over time. In contrast, static models consider each given data sample on its own, and thus extract the same encoding regardless of the temporal order/structure of the input sequence. Convolutional models (for example, CEBRA) consider each small data window on its own and can’t aggregate information beyond that window, and in this sense are similar to static models. Nonlinearity: Nonlinear models can learn nonlinear mappings within some model elements, but unlike DPAD, they have not been flexible in terms of which model elements are made nonlinear and with what structure (note in this work we also implement NDM with flexible nonlinearity). Hypothesis testing on nonlinearities: DPAD is the only method that provides fine-grained control over the nonlinearity versus linearity of each model element and thus enables localization of nonlinearities and hypothesis testing regarding them (Fig. 6). Prioritize behaviorally relevant neural dynamics: Methods that can incorporate the reconstruction of behavior from neural data as part of their learning objective, ideally with priority. Dissociate behaviorally relevant and other neural dynamics: DPAD is the only dynamical nonlinear method that learns both behaviorally relevant neural dynamics and other neural dynamics, and dissociates the two into separate latent states. Learned reconstruction models: The reconstruction models that are natively learned by the method when extracting latents, in order to reconstruct neural or behavioral data from these learned latents.