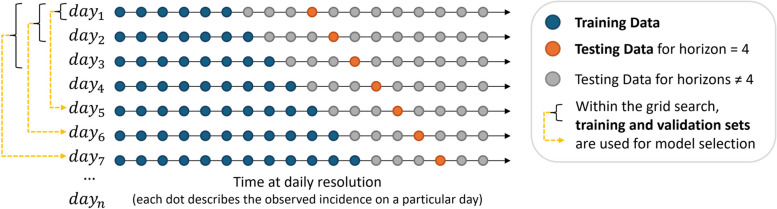

Fig. 1.

One-step walk-forward validation strategy. This scheme depicts the one-step walk-forward validation, with one day as one step. Given a particular day in the data, for example, day 7, the data is split into training and testing sets so that the training data includes this day as the last observed value (blue dots). These training samples are used to train a model that computes a forecast. The testing set (grey and orange dots) is used to evaluate the forecast. In this example, the orange dots represent the data points used to validate day 4 on the forecasting horizon. However, this strategy is applied equally for other days on the horizon. The training and testing split strategy applies when using the default parameter settings without hyperparameter tuning. For model selection, when tuning the hyperparameters, training/testing splits, created previously during the walk-forward validation, are used as training/validation splits, as indicated by the yellow dashed arrows. On day 7, for example, 3 training/validation splits can be used (day 1, day 2, day 3). The different parameter settings in the grid search are validated using these training/validation splits. As multiple training/validation splits are usually available, the average error rate defines the parameter setting with the lowest error rate (model selection). This best parameter setting is then used to train a model on the training data available for day 7. The forecast of this model is evaluated using the testing set. Model selection is skipped for the first steps in this walk-forward validation (days 1 to 4 in the figure), for which no training/validation splits are available. In this case, the default model provides the forecast. Using this strategy to create training/testing and training/validation splits within the walk-forward validation allows us to make the most use of the available data