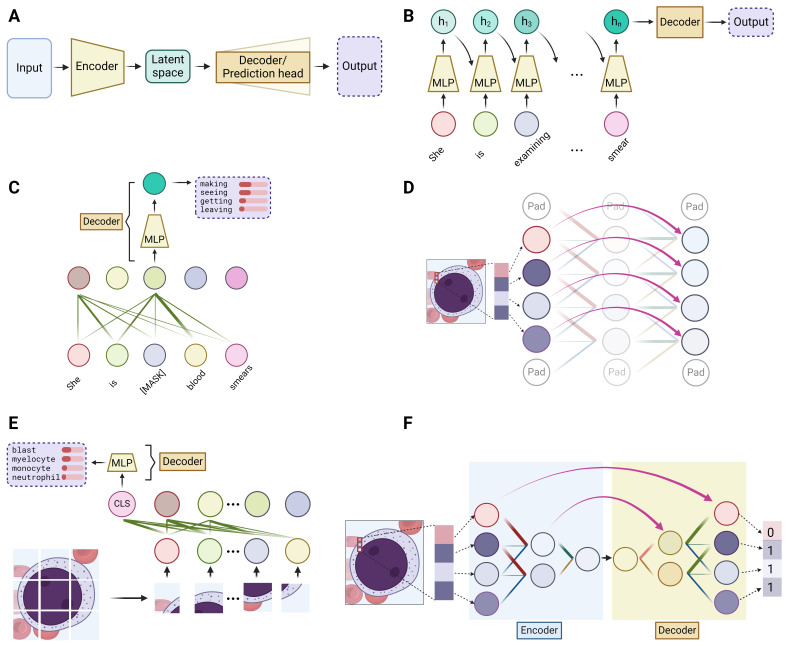

Figure 3. Deep learning models.

(A) At a high level, deep learning models consist of an encoder, which transforms or condenses the input data into a more informative intermediate representation (latent space), and a decoder or prediction head, which generates the desired output from this latent representation. (B) Recurrent neural networks (RNNs) process data sequentially, updating a hidden state (h) at each step by incorporating information from the current input and the previous hidden state. For example, when processing the sentence “she is examining a blood smear,” the first hidden state (h1) is generated based on the word “she.” The second hidden state (h2) is then computed using the second word “is” and h1, allowing it to capture information from both the current and previous words. This process continues for each word in the sequence, with the final hidden state (hn) incorporating information from all the preceding words. (C) Bidirectional Encoder Representations from Transformers (BERT) utilizes the encoder component of the Transformer model, which consists of self-attention layers and multi-layer perceptrons (MLPs). During the training process, the objective is to predict randomly masked words in a sentence. Although the words are masked, the self-attention mechanism allows BERT to capture the contextual relationships between words, enabling it to infer the semantic meaning based on the surrounding context. (D) Residual connections in convolutional neural networks (CNN) enable the direct flow of information by skipping one or more layers, facilitating the creation of deeper networks. (E) The Vision Transformer (ViT) is a novel approach to image recognition tasks that adapts the Transformer architecture. In ViT, an input image is divided into small patches where self-attention is performed. A special classification token, denoted as “CLS” in the figure, is appended to the patch embeddings and participates in the self-attention process, allowing it to gather information from all patches. The output representation corresponding to the “CLS” token is then used for image classification or other downstream tasks. (F) The U-Net is a specialized CNN architecture that has gained popularity in medical image segmentation tasks due to its ability to perform pixel-level classification. The U-Net consists of an encoder path, which uses convolutional layers to encode it into a compact latent representation, followed by a symmetric decoder path that employs transposed convolutions to gradually restore the latent feature back to the original image resolution. Residual connections between corresponding encoder and decoder layers allow for the direct transfer of localized spatial information. In the example, the U-Net segments the blast cell from the background by assigning the value 1 to pixels within the blast and 0 to the background pixels.