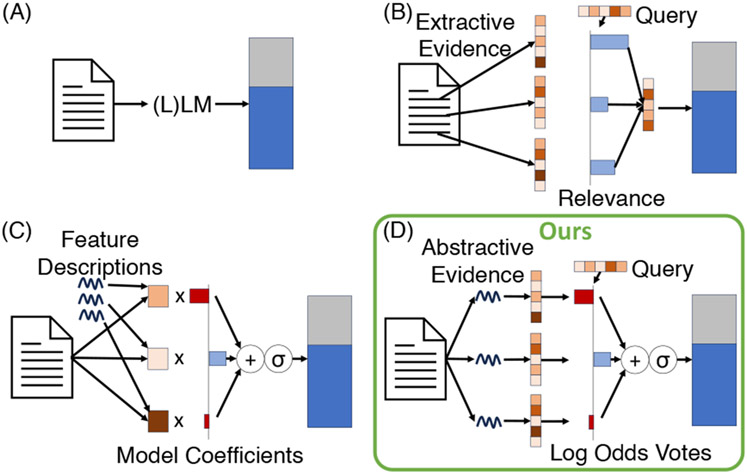

Figure 1: Inherently “interpretable” approaches to prediction.

Typically, ‘interpretable’ models trade off between the expressiveness of intermediate representations and the faithfulness of the resulting interpretability to the models’ true mechanisms. Our approach (D) manages to use very expressive intermediate representations in the form of abstractive natural language evidence while still maintaining true transparency during aggregation of this evidence. See Table 1 for more details.