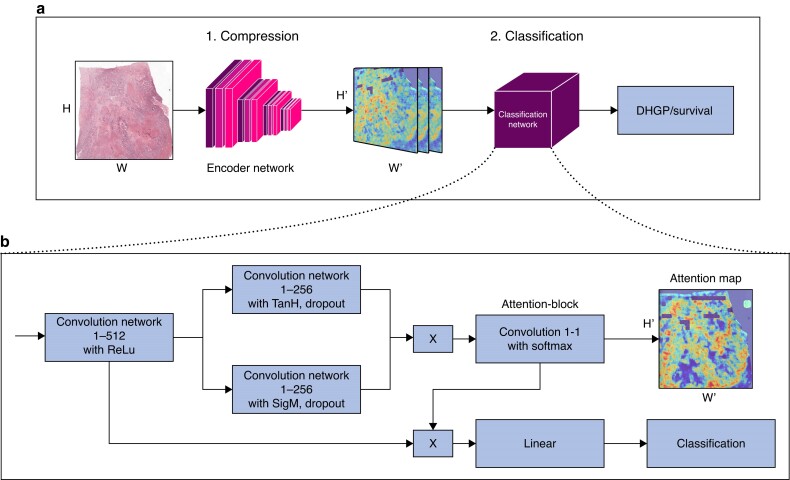

Fig. 1.

Neural image compression pipeline (A) with a supervised multitask learning encoder framework and convolutional neural networks classifier (B) Neural image compression with attention pipeline. First the slide is compressed, then classified. The classification architecture consists of four 1 × 1 convolutional layers and a final linear layer starting with a 1 × 1 convolution reducing the input channels from 2048 to 512 (conv1-512). H and W stand for height and width of the image respectively. H' and W' are the height and width of the compressed images respectively with H' << than H and W' << than W.