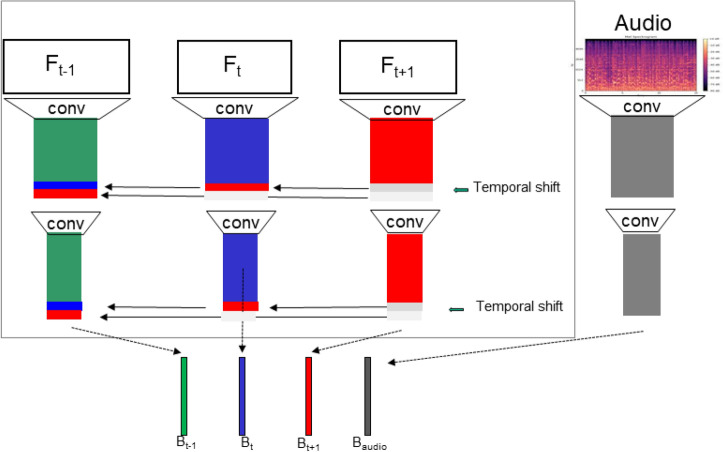

Fig. 3.

TSAM model: the multi-modal CNN architecture takes as input a predefined number of video frames (video segments) and audio converted into mel-spectrograms (audio segments). The ResNet50 backbone is used to extract features from both video and audio segments. Features from video segments are shifted between each other at different blocks of ResNet50. The audio input is represented by the mel-spectrogram) and is processed by the same backbone without shifting. The extracted features are fused by averaging and mapped to the output classes using a fully connected layer.