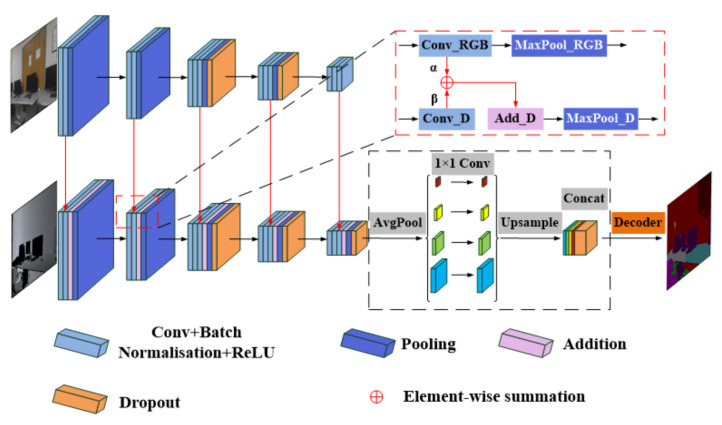

Figure 3.

An illustration of the proposed DDNet. There are two branches of CNNs, which are used to extract RGB and depth features, respectively. The depth branch is the main part of the encoder, while the RGB branch is the auxiliary encoder, because the depth image has more direct geometric and appearance information which is beneficial to segmentation. The fusion details in the red box show that the RGB feature map merges with the depth feature map through element-wise summation with two fusion weights. In addition, the pyramid pooling module is employed before the symmetric decoder to strengthen the contextual relationship.