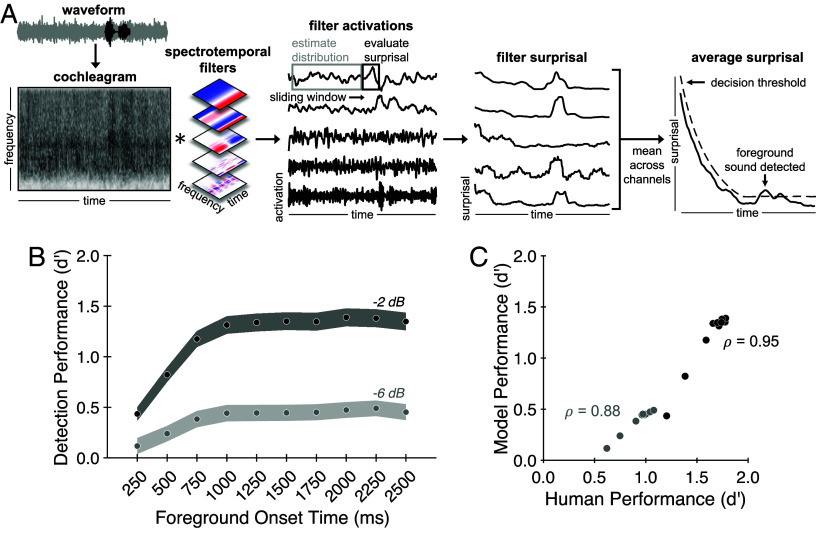

Fig. 3.

An observer model based on background noise estimation replicates human results. (A) Model schematic. First, an input sound waveform is passed through a standard model of auditory processing. This model consists of two stages: a peripheral stage modeled after the cochlea (yielding a cochleagram, first panel), followed by a set of spectrotemporal filters inspired by the auditory cortex that operate on the cochleagram, yielding time-varying activations of different spectrotemporal features (second panel). A sliding window is used to evaluate the negative log-likelihood (surprisal) within each filter channel over time (third panel). Finally, the resulting filter surprisal curves are averaged across channels and compared to a time-varying decision threshold to decide whether a foreground sound is present (fourth panel; yaxis is scaled differently in third and fourth panels to accommodate the surprisal plots for multiple individual filters). (B) Model results. Model foreground detection performance (quantified as d′) is plotted as a function of SNR and foreground onset time. Shaded regions plot SD of performance obtained by bootstrapping over stimuli. (C) Human-model comparison. Model performance is highly correlated with human performance on the foreground detection task (Experiment 1) for both the −2 dB (black circles) and −6 dB (gray circles) SNR conditions.