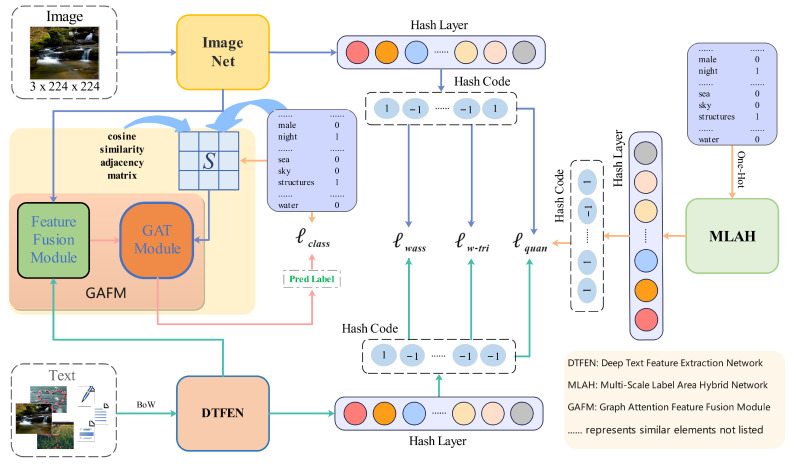

Figure 1.

The overall framework of TEGAH can be divided into five parts: (1) Image-Net: employing the Swin Transformer-Small (SwinT-S) model to extract semantic features from images and map these features into the feature space; (2) Graph Attention Feature Fusion Module (GAFM): a feature fusion and alignment network that weights and merges image and text features to address semantic discrepancies between different modalities; (3) Multiscale Label Area Hybrid Network (MLAH): utilizing multiscale features across four layers and incorporating multiscale attention to mitigate issues related to insufficient textual information; (4) Deep Text Feature Extraction Network (DTFEN): improving upon traditional methods by capturing high-quality textual feature information; (5) Hash Learning Module: transforming features into hash codes through nonlinear changes, with training assisted by a combination of cosine-weighted triplet loss, label distillation loss, Wasserstein loss, and quantization loss, each component specifically designed to enhance the extraction, fusion, and representation of multimodal features, thereby improving the accuracy and efficiency of cross-modal hash retrieval.