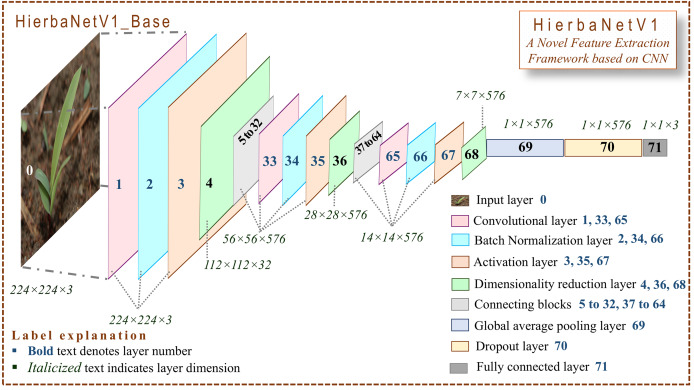

Figure 2. Architecture of HierbaNetV1.

Our model, firstly resizes each input image to 224 × 224 × 3, convolves, batch-normalizes, and activates neurons with LeakyReLU followed by downsampling. Secondly, runs a two-block feature extraction technique with two modules. Module I extracts high-level features ( ) using four diversified filters of multiple levels of complexity. Module II extracts low-level features ( ). Progressively, feature set F is formed by integrating and , with which the model is trained. Thirdly, the generated features are flattened, and 20% of its neurons are dropped followed by three-way softmax activation. Lastly, the trained model predicts the class of the input sample.