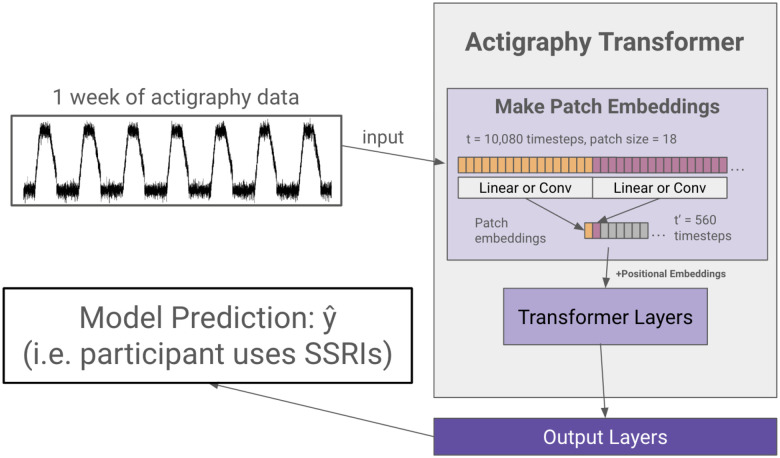

Figure 2. Actigraphy Transformer (Not Pretrained).

The figure presents an example case of using an Actigraphy Transformer (AT) for classification. Actigraphy data is fed into the AT and transformed into patches. Assuming a patch size of 18, the number of time steps fed into our transformer is reduced from 10,080 to 560. We add positional embeddings to our patch embeddings before feeding them to the transformer layers. An output layer head is attached to our Actigraphy Transformer and is shown to perform a model classification task.