Abstract

Background

Long terminal repeats (LTRs) represent important parts of LTR retrotransposons and retroviruses found in high copy numbers in a majority of eukaryotic genomes. LTRs contain regulatory sequences essential for the life cycle of the retrotransposon. Previous experimental and sequence studies have provided only limited information about LTR structure and composition, mostly from model systems. To enhance our understanding of these key sequence modules, we focused on the contrasts between LTRs of various retrotransposon families and other genomic regions. Furthermore, this approach can be utilized for the classification and prediction of LTRs.

Results

We used machine learning methods suitable for DNA sequence classification and applied them to a large dataset of plant LTR retrotransposon sequences. We trained three machine learning models using (i) traditional model ensembles (Gradient Boosting), (ii) hybrid convolutional/long and short memory network models, and (iii) a DNA pre-trained transformer-based model using k-mer sequence representation. All three approaches were successful in classifying and isolating LTRs in this data, as well as providing valuable insights into LTR sequence composition. The best classification (expressed as F1 score) achieved for LTR detection was 0.85 using the hybrid network model. The most accurate classification task was superfamily classification (F1=0.89) while the least accurate was family classification (F1=0.74). The trained models were subjected to explainability analysis. Positional analysis identified a mixture of interesting features, many of which had a preferred absolute position within the LTR and/or were biologically relevant, such as a centrally positioned TATA-box regulatory sequence, and TG..CA nucleotide patterns around both LTR edges.

Conclusions

Our results show that the models used here recognized biologically relevant motifs, such as core promoter elements in the LTR detection task, and a development and stress-related subclass of transcription factor binding sites in the family classification task. Explainability analysis also highlighted the importance of 5’- and 3’- edges in LTR identity and revealed need to analyze more than just dinucleotides at these ends. Our work shows the applicability of machine learning models to regulatory sequence analysis and classification, and demonstrates the important role of the identified motifs in LTR detection.

Supplementary Information

The online version contains supplementary material available at 10.1186/s13040-024-00410-z.

Keywords: Eukaryote, Repeat, Transposable elements, Deep learning, CNN-LSTM, DNABERT, Sequence analysis, Regulatory mechanisms, Transcription factor binding sites, TFBS, SHAP score

Background

Long terminal repeats (LTRs) are essential regulatory sequences of retrotransposons and retroviruses, often found in high copy numbers in many eukaryotic genomes [1, 2]. LTR retrotransposons are the main repeat type in most plant genomes [3, 4]. While retrotransposons propagate through transcription and subsequent insertion, experimental methods for studying transposable elements are limited due to the inactivation of a majority of the genomic copies in most of the life cycle except for reproductive cells and in response to stress [5–7]. In addition, experiments are typically only carried out on a small number of model sequences and organisms.

Genomic sequence analysis can thus provide important additional information about the composition, classification, and function of LTRs in LTR retrotransposons and even in their evolutionarily contrasting element subtypes (superfamilies and families, see [8]). This approach has shown some success when applied to full-length LTR retrotransposon sequences in plants [9], including machine learning approaches [10], but has not been applied specifically to LTRs whose structure is more loosely defined than the structure of internal coding regions of the retrotransposons.

There are several advantages of studying LTRs in isolation. Firstly, the coding sequences add potentially a whole new set of signals for the learning algorithms, where codons of the resulting protein are present and reflect the limitations of protein structure and function that have been imposed on the DNA sequence in their evolution. These signals are expected to be orthogonal to the regulatory code of the LTRs and as such should not add much to the understanding of the LTRs. This allows us to study a simpler system with easier interpretation of the results. Secondly, as a result, the sequences we work with are shorter and thus better adapted to the fixed-length oriented ML procedures. Finally the approach could accommodate so-called solo-LTRs that are present in high numbers in some genomes, although we have decided against using them here, to maintain higher reliability of the dataset.

In a way, LTRs are “the closest cousins” of regulatory sequences such as promoters and enhancers. First, LTRs themselves function as promoters in transcription of their own LTR retrotransposon copy [11], not unlike what happens in human LTR retroviruses, such as HIV [12]. They can drive the transcription of neighboring genes [13]. Second, there is ample evolutionary evidence that LTR-TEs contribute to the makeup of older regulatory sequences either by inserting into them, nearby, or providing the initial building material for subsequent regulation [14]. Both LTRs and gene regulatory sequences (promoters, enhancers), have an increased ability to bind transcription factors [15].

In LTR retrotransposons, it is relatively easy to delineate the LTRs since they occur in two copies, one at each end of the transposable element (TE), and in the case of bona-fide insertions also carry tandem site duplications (TSDs) at their outer boundaries [16]. However, their internal composition is often difficult to unravel. Functional LTRs must always contain three regions important for the life cycle of the entire TE. These are known as U3, R and U5, and can be determined experimentally [17]. U3 is known to bind regulatory proteins important for transcription and its components are capable of serving both as enhancers and promoters. U5 may contain additional regulatory signals and it borders on or partially overlaps the primer binding site [18]. The R region is delineated by the transcription start and termination sites. Region identification by in-silico sequence analysis is problematic. Sequences of plant LTRs are variable not only in sequence composition but also in their length, ranging from around a hundred bps to several thousands [19]. We are looking for ways in which sequence analysis can shed light on to the internal structure of LTRs and identify regulatory regions, such as transcription factor binding sites (TFBS) and their type and absence/presence in different TE families.

Deep learning [20] and transformer-based models [21] have the potential to address these challenges, having been successfully applied in recent genomic data analyses [22, 23], including the classification of full length LTR retrotransposons [24]. While this approach demonstrated high classification accuracy, the learning process reflecting the biological features of LTR retrotransposon sequences has not yet been fully examined. Here we have employed these models for LTR sequence identification and classification, focusing on model interpretability as a tool to extract both existing and new biological knowledge about these regulatory sequences.

Due to the highly variable length and sequence composition of LTR sequences, LTR identification using common bioinformatics solutions poses a complicated problem. Machine learning (ML) methods can provide insight into complex relationships within the data with minimal prior assumptions due to the process of learning on input features. This allows us to uncover previously unrecognized properties, from the successful interpretation of the learned internal structure of such models. Here, we will focus on the state-of-the-art ML and deep learning (DL) methodologies that have already proven useful in similar scenarios.

The Gradient Boosting classifier (GBC) is an ensemble-based method which iteratively trains multiple weaker learners on the pseudo-residuals of learners from previous iterations, with the goal of improving upon their prediction errors. The accuracy of the model is dependent on its hyperparameters, such as the number of sub-estimators used, as well as the specific hyperparameters of the sub-estimators. This can be improved by techniques that identify an optimal combination of these parameters for a given dataset. In general, the ensemble model tends to be relatively robust to overfitting and achieves good results in fairly complex biological tasks [25, 26].

The combination of convolutional neural networks (CNN) and LSTM nodes has proven efficient both in natural language processing tasks and in the biological domain [27, 28]. The effectiveness of this combination stems from the ability of convolutional filters to capture local patterns, including, but not limited to, those of TFBS and the ability of the LSTM to recognize remote dependencies and the co-existence of these patterns. LSTM nodes are able to selectively filter out information about the input sequence through the use of a gating mechanism. This enables the LSTM network to retain relevant information, discard irrelevant details, and carry over crucial context from previous elements in the sequence [29].

The BERT family of models [30] is a relatively recent tool that has seen many successful applications, mainly in natural language processing but also recently in the challenge of transposable element classification [24]. The BERT model is a transformer-based neural network model that utilizes the mechanism of attention to recognize the context of words and embed sentences into a fixed-size vector. Such embeddings have a number of key properties, which make them useful for further downstream tasks. One example is that semantically similar sentences tend to have embeddings whose cosine distance is small. An important feature of popular BERT-based models is their pre-trained nature, meaning that fine-tuning to custom data requires much smaller datasets, making it also much faster than training from scratch. One such model pre-trained on DNA sequences is DNABERT. Analogical to natural language, the function of DNA is also based on its internal structure and the order of its sub-features, making the DNABERT model an attractive candidate when dealing with variable length sequences with unknown structure.

Machine learning and deep learning have seen many advancements in the area of model interpretability techniques, promoting better comprehension beyond the black-box approach that particularly deep learning models have been known for. Appreciation of how a model makes decisions will give us a better understanding of our data and the ability to detect class-specific features. Such applications provide a way to pinpoint key structural properties of data such as DNA sequences, where the order of elementary features defines a certain biological function.

The techniques used in this work include the direct analysis of first layer convolutional filters to infer sequential motif position weight matrices in the three hybrid neural network models. These have then been mapped to a database of known sequential motifs to identify their biological significance and function as suggested in related works [31].

Another method used in this work is SHAP [32], a framework for interpreting model predictions. The framework presents a way of approximating feature significance among multiple model architectures and presents a unified approach to model interpretation that often outperforms other known techniques such as LIME [33] and DeepLIFT [34] in both efficiency and consistency with human interpretation.

As machine learning and deep learning have already been successfully used to delineate promoters and TF binding sites [35] and lncRNAs [36] in genomic sequences, we set out to investigate here their ability to improve our understanding of LTR structure, modularity and genomic sequence composition. We also wanted to know whether models based on different algorithmic principles would show any shortcomings or advantages in regulatory sequence analysis.

Methods

Sequence data

LTR input data

Annotated full-length transposable elements were obtained from [37]. Available annotations were searched for LTR pairs (a pair of 5’- and 3’-LTRs belonging to the same full-length TE). Element insertion time was estimated based on LTR pair divergence as previously described [3]. A corresponding FASTA file containing all the sequences further used here is provided as Supplementary File 1.

A separate set of LTR-negative sequences was prepared for model training (Supplementary File 2). In supervised learning (used here) a set of DNA sequences that do not contain LTRs is necessary to allow the models to identify classification features that are typical of one set, or the other. Selecting a reasonable negative set was an important and considered step. Apart from generating random sequences, we also strove to include naturally occurring sequences, and sequences with more intricate internal structure. For this, sequences were extracted from the same species as those used for LTR extraction, however this time the annotations were used to avoid regions marked as LTRs. To further the complexity of the training dataset and reduce the influence of easily distinguishable features, a set of sequences generated using Markov chains trained on clusters of LTR sequences was added. First, LTR sequences were clustered using the program CD-HIT [38] with a relatively low similarity threshold of 70 % in order to create larger clusters of less similar sequences. On each cluster, a Markov chain model of order 2 was trained and used to generate artificial sequences [39]. These contained 3-mers often found in LTRs, but lacked the spatial and organizational properties of LTR sequences. Counts of these different types of non-LTR sequences are given in Supplementary File 3.

Although training on sequences with more complex differences than those between LTRs and random sequences is a more challenging task, the resulting data provides a more informative trained model, avoiding fitting on trivial or non-biological features, such as sequence composition or features that are typical of any plant genomic sequence.

Cleaning and filtering of input data

Due to annotations and their corresponding reference genomes not always being reliably identified from the published data, only annotations that produced a single-mode distribution of LTR insertion ages were used. We required an LTR sequence alignment identity of 0.7–1.0 which provided 513663 LTR pairs from 79 plant species.

In order to filter the database of LTR sequences and remove redundant, highly identical training examples, a clustering technique based on sequence similarity was applied using the program CD-HIT [38]. This approach was tested for sequence similarity >95 % and >85 % (these numbers were chosen to provide sequence sets of two sizes, while even the smaller set would still contain multiple members of individual TE families, which must have less than 80 % divergence along more than 80 % length by definition [8]). The identity percentage represents the lower boundary of similarity, above which, sequences have been clustered together. One representative was selected from each cluster to be used for training. Applying the 85 % boundary results in a relatively smaller, more strict training database, which should contribute to training more robust models. The resulting LTR database consisted of 176917 sequences and the LTR-negative database of 543310 sequences from 75 species. Supplementary File 4 provides a list of species and the respective LTR counts.

As the original annotated set contained only full-length transposable elements and their corresponding LTR sequences, we wanted to verify that no solo-LTRs from the annotated organisms’ DNA had ended up in the negative training set during the extraction process. Solo-LTR sequences are LTR sequences orphaned through a process of unequal homologous recombination between LTRs of the same full-length element [40]. The negative training database was therefore aligned to our LTR database in order to filter out any potential solo-LTR candidates.

LTR sequence comparison - classical approaches

Using a custom python script the Jaccard similarity index was counted for k-mers (k = 6) of all the LTRs and/or non-LTRs sequences of the corresponding plant species in order to compare (i) k-mers between Ty1/Copia and Ty3/Gypsy (Supplementary Figure 1a,b); and (ii) LTR and non-LTR sequences originated from the same plan genome (Supplementary Figure 1c). Dendrograms and barplots were generated in R (version 4.3.3) using the default ’hclust’ function with ’ape’ package and ggplot2 package, respectively.

Input sequence data preprocessing

Three sequence preprocessing strategies have been implemented, each corresponding to a particular model type and the type of input features that it can handle. These include the identification of transcription factor binding sites, one-hot encodings and k-mer tokenization.

TF binding site identification

To transform the sequences into a fixed-dimension feature space that can be utilized with most conventional models, the sequences were parsed with position weight matrices (PWM) of common plant transcription factor binding sites obtained from the JASPAR database [41] (Supplementary File 5). Sequences were searched for TFBS motifs using the Motifs module provided by the biopython package [42] and a feature vector of size 656 containing the number of occurrences of each motif was obtained for every input sequence. The vector was then transformed using the term frequency-inverse document frequency (TF-IDF) measure [43] to reduce the impact of less specific and common TF binding sites during classification. The advantage of using the TFBS identification approach for training models is the fixed size of the input vector, the easy interpretability and ability to use with most conventional machine learning models. The downside is that we make preemptive assumptions about the data and may omit other important properties, which are hidden within the sequences.

One-hot encoding

In order to maintain the structural information of input sequences, two further approaches to transforming the sequences were undertaken. The first method was transforming the sequences to one-hot encoded vectors. In contrast to other popular methods for encoding DNA sequences, such as k-mer frequency, one-hot encoding allows the CNN filters to fit on specific sequence patterns, maintains sequence structure, and improves the interpretability of the network where each filter can also be viewed as a PWM [31].

k-mer tokenization

The method used in combination with DNABERT-based fine-tuning tasks was k-mer tokenization. In this case, three different values of k (4, 5, 6) were tested for each classification task. The input sequence was split into k-mers and encoded into numerical vectors using the assigned tokenizer of the pre-trained DNABERT [22] for further training in the transformer model.

Models

Gradient boosting classifier

We implemented and executed a Gradient Boosting classifier (GBC) pipeline that consisted of three steps. First, we identified TFBS occurrences in input sequences using the src/utils/run_jaspar_parser.py script (see GIT repository in the Code availability section). We then used these TFBS occurrences as input for the pipeline containing a TF-IDF transformer and the GBC model, both from the scikit-learn version 1.3.0 [44]. The corresponding pipelines containing trained models are provided in Supplementary File 6.

CNN-LSTM

We trained the CNN-LSTM model by first preprocessing the sequences using one-hot encoding. We removed unknown bases from the input sequences (represented by “N”) and then encoded each of the 4 bases (A, C, G, T) using a one-hot vector where 1 represents the presence of the particular base in the specified position and all other positions are set to 0. The preprocessing utils are located in the src/utils/CNN_utils.py. We trained the model for input sequences of size 4000bp, using the pad_sequences function from the keras.utils module version 2.14.0 [45] to pad sequences shorter than 4000bp with a 0-vector and truncate sequences that are longer. We set up the CNN-LSTM model with optimal parameters detected during the hyperparameter sweep (described in Model training). The trained models are are provided in Supplementary File 6. The overall topology of the model is shown in Supplementary Figure 2.

DNABERT

For the pre-trained DNABERT model fine-tuning process, we loaded the model along with the assigned tokenizer at zhihan1996/DNA_bert_6 from the Hugging Face hub (huggingface.co) using the transformers module [46] with the AutoTokenizer and AutoModelForMaskedLM functions provided. The fine-tuned models for the specific classification tasks to draw predictions from pooled embeddings are available in Supplementary File 6 and work out-of the box for sequences below 512bps. For sequences above this length, the pooling strategy (described in Model training) needs to be implemented. The functionality for this is provided by the src/utils/seq_to_embedding.py script. The overall topology of the model is shown in Supplementary Figure 3.

Model training

Training and predictions on CNN-LSTM and DNABERT models were run using a NVIDIA A100 80 GB PCIe GPU. The datasets were divided into training, validation, and testing with the ratio of 70 %, 10 %, and 20 %, respectively.

The TFBS models were subject to a 5-fold cross-validation grid search in order to detect the optimal model and its hyper-parameters (Supplementary Table 1). During this grid search, Random forest classifier, Gradient Boosting classifier and Multilayer Perceptron classifiers were tested for different combinations of parameters (Supplementary File 7). The LTR and superfamily classification models were trained maximizing the binary cross entropy function. The family classifiers were trained maximizing the categorical cross entropy function weighted by the proportion of representatives per class (Supplementary Table 2).

The CNN-LSTM contains an input layer of size 4000, followed by a 1D convolutional layer, a max pooling layer followed by an LSTM layer into the output node. A zero-vector padding technique with masking was used for sequences shorter than 4000bp. All classifiers were trained using the Adam optimizer for 15 epochs, with batches of size 64, using the early stopping criterion with a 3 epoch patience on the validation set to prevent overfitting. The LTR and Superfamily classifiers were trained to optimize the binary cross entropy loss function, whereas the family classifier was trained optimizing the categorical cross entropy function. The models were connected to the Weights and Biases interface (https://wandb.ai) to monitor training progress and a hyperparameter sweep was run to detect the best network hyperparameters (Supplementary File 8).

For the training process of the DNABERT model, it was also connected to the W&B interface, and 3 k-mer sizes were tested for the various classification tasks - 4, 5, 6. All models were trained using the AdamW optimizer for 5 epochs, utilizing the early stopping criterion with a 2 epoch patience on the validation set, to prevent overfitting. The LTR and superfamily classifiers were trained optimizing the binary cross entropy loss function, whereas the family classifier was trained optimizing the BCE with logits loss function [47]. These models were trained on sequences under 510 bps in length, 512 being the standard maximum input sequence length of the BERT transformer model. For sequences larger than 510 bps, a window pooling approach was taken, where a window of size 510 corresponding to the input size of the trained model was moved along the input sequence with a stride size of 170 (one third of the of the model’s input length). The classification head of the model was removed, and the produced embedding vector of size 768 was average-pooled along the sequence, generating a final vector of size 768 containing averaged embeddings along the sequence. A convolutional network model was then trained to classify sequences based on the pooled embedding vector. This network uses 32 filters of size 3 pooled into a dense layer of size 32 and a logistic sigmoid at the activation function in the output layer for the LTR and superfamily classification tasks and a softmax layer of size 15 for the family classification task.

Model testing

The models were tested on the full reported testing subset. We further employed a 6-fold stratified cross-validation method ensuring that the distribution of classes remains the same across all folds [48]. A model was trained on 5 folds and the F1 score was calculated on the remaining validation fold. In order to verify that the results reported on the testing set are not a result of overfitting [49], we compared the obtained scores of the 6 models on the validation fold to the reported score on the test dataset using the Wilcoxon signed-rank test from the scipy.stats package [50], as the folds were not independent from each other.

To further support the claim that a model achieves better scores than the remaining two, we used a 10-fold cross-validation technique and compared the results using the the Friedman repeated sample test with the null hypothesis that the scores of the different models follow the same distribution [51]. In cases where the null hypothesis was rejected, we conducted a post-hoc analysis using the Nemenyi test [52] using the posthoc_nemeyi_friedman function from the scikit-posthocs package [53].

Trained model interpretation

SHAP

The SHAP (SHapley Additive exPlanations) is a model-agnostic approach used for estimating the impact that input features have on the output of the model. Shapley values exhibit desirable properties such as efficiency, symmetry, and additivity, making them an ideal foundation for understanding the contribution of each feature to a given prediction [54]. Due to the additive nature of Shapley values, they may be used for local, instance-wise explanation, as well as global understanding of input features across multiple instances when aggregated.

The algorithm works by training a model with feature i present during the training and a model with feature i not present. The outputs of these models are then compared and the SHAP value for feature i is computed as the difference of outputs of the models and scaled by the weighted average of all possible differences.

To produce the SHAP values used in this study, the python module SHAP (version 0.44.1) was used. The gradient boosting classifier feature importance was interpreted using the TreeExplainer [55] class provided by the package on the full testing dataset of LTRs and LTR-negatives in the case of LTR classification, and the full set of only LTRs in the case of superfamily classification. In order to validate the findings and evaluate the effect of the top 20 TFBS identified as significant, we conducted a perturbation of the specific TFBS, setting their value to a randomized one within the range where represents the vector of values for feature i in all of the tested sequences. We repeated this in 5 random iterations and observed the change of score of the model.

For interpreting the CNN-LSTM hybrid network model, the LTR test set was subsampled down to 2000 instances, and was parsed using the DeepExplainer module of the SHAP package to explain feature importance of input sequence positions.

For interpreting the k-mer based fine-tuned DNABERT model, the Explainer module with automatic selection of estimator was used to interpret the importance of k-mers in sequences within 512 length, for the selected set of 2000 LTR sequences. In order to obtain the importance of particular k-mers, we aggregated the SHAP values for each k-mer across the selected subsample. Their corresponding values were then scaled using Min-Max scaling separately for k-mers with largest negative and largest positive contributions.

Additionally, we analyzed the importance of specific regions of LTRs (sequence start, TATA box, TSS, and sequence end). First, we predicted the positions of TATA and TSS sites using TSSPlant [56]. Next, we assessed the importance of each sequence position across 2000 subsampled LTR sequences by either calculating the sum of squares of SHAP values in each position (CNN-LSTM model) or calculating the mean SHAP value of all k-mers containing a given position (BERT). Median position importance, centered around specific regions, was then visualized using the plotHeatmap function from deepTools [57].

CNN filter analysis

To analyze the learned CNN filters, they were first extracted from the trained network, then normalized in the following way: Ŝ where Ŝ represents the normalized filter, S the original filter and is a scaling factor whose value was set to 3 as suggested in [31]. These normalized filters were then converted to the MEME format using the jaspar2meme tool from the meme suite version 5.5.5. [58] and compared to the JASPAR CORE 2022 [41] plant database using the Tomtom motif comparison tool [58] with a cutoff E-value of 0.1.

Results

As mentioned above, our aim was to specifically analyze plant LTR sequences that are more variable and dynamic, and therefore more difficult to study than coding regions of LTR retrotransposons. Due to their inherent modularity as promoters and higher variability in overall length, comparing and clustering them via sequence alignment is more complicated. An alternative approach appears to be motif identification. Dedicated software, such as MEME [59] has often been successful in extracting motifs from promoters. However, the absence of expression data makes the identification of common motifs much more difficult. A preliminary analysis has also shown that LTRs from different superfamilies and families cannot be reliably clustered based on unique k-mer content (Supplementary File 9).

In response to these shortcomings of the above-mentioned classical approaches, we decided to employ forms of machine learning that allow flexibility both in terms of the motifs being sought (we may not necessarily know them in advance) and their relative or absolute positions within the LTR.

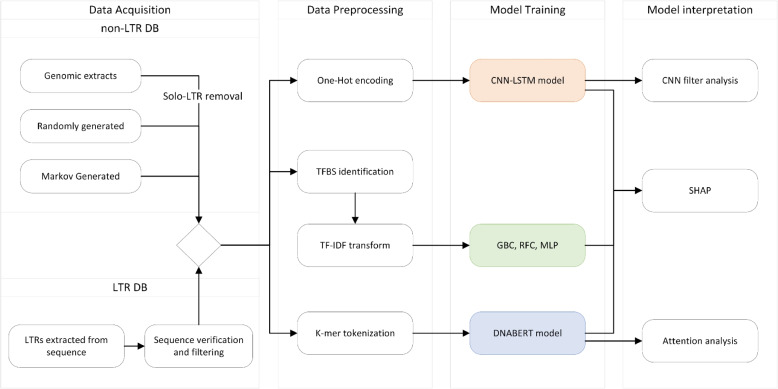

The main focus of our work was to discover features of plant LTRs that contribute the most to the ability of a machine learning model to detect or classify LTR nucleotide sequence of plant LTR retrotransposons. Figure 1 shows the overall data flow and tools used here, which consisted of the input data collection and filtering, its preparation and encoding for subsequent use in ML models, model construction and learning, the final interpretation of the results, and identification of the most influential features.

Fig. 1.

Data processing and computational workflow diagram. Input DNA sequences (positive and negative LTR sets) were pre-processed for the three alternative modeling approaches (to obtain TF binding site presence, one-hot encoding, and k-mers). The last two columns show the software tools used in individual branches of the analysis

Three main tasks of this study were: (i) LTR detection - learning to distinguish LTR and LTR-negative sequences; (ii) Superfamily classification - learning to distinguish Ty3/Gypsy and Ty1/Copia LTRs; and (iii) Family classification - classification into any of the 15 families selected for the input data.

Three types of machine learning models were employed for the above DNA sequence classification tasks (see Section 3.3 of Methods for more details). First, a conventional ML model that uses TFBS counts as input, specifically a Gradient Boost classifier (GBC). Second, a hybrid CNN-LSTM network trained on one-hot encoded sequences and finally, a pre-trained, transformer-based DNABERT model [22] further trained on k-mer-tokenized sequences.

Recently several large-scale studies of plant LTR retrotransposons have been carried out. To provide us with a sufficiently high number of complete annotations we chose a study by [37] that produced a comprehensive annotation dataset of intact LTR retrotransposons of 300 plant genomes. Altogether, 2,593,685 LTR retrotransposons are available in this dataset, however after applying additional criteria for quality and redundancy, we ended up with 176,917 LTR retrotransposons (and their corresponding LTR pairs) from 75 plant species (see Sequence data in Methods). To facilitate machine learning, an LTR-negative sequence dataset was prepared as described in Sequence data in Methods.

Model training

The three types of models were trained on the input data as described in the Model training section in Methods. The accuracy of the trained models as evidenced by computed F1 characteristics was in the range 0.68–0.89 (Table 1). Binary classifications of LTRs and their superfamily membership were easier to learn, while the hybrid CNN-LSTM model was the best overall. It is evident that learning just on JASPAR TFBS (in GBC) leads to lower accuracy, especially in LTR detection (F1=0.73 v. 0.85; difference of 0.12), while the differences in family and superfamily classification tasks are much lower (difference of 0.06–0.07). The lower differences in accuracy between models at the family level could reflect the biological fact that all LTR retrotransposons share the common elements important for their life cycle (and also for their detection and classification at higher levels), while less information is available from features recruited by individual families. More detailed results of the learning step are available in Supplementary Figures 4–7. The post-hoc testing conducted on pairs of models (see Model testing in Methods) has failed to detect significant differences between the DNABERT and CNN-LSTM models, however the scores achieved by the GBC model were identified to be significantly lower than those of the other two models across all of the tasks. Results of the testing are available in Supplementary Table 3.

Table 1.

Precision, recall and F1 measure of three types of models in the three tasks

| Task | Model | Precision | Recall | F1 measure | p-value |

|---|---|---|---|---|---|

| LTR detection | GBC | 0.74 | 0.74 | 0.73 | 0.09 |

| CNN-LSTM | 0.85 | 0.85 | 0.85 | 0.16 | |

| DNABERT | 0.83 | 0.83 | 0.83 | 0.11 | |

| Superfamily classification | GBC | 0.82 | 0.82 | 0.82 | 0.31 |

| CNN-LSTM | 0.89 | 0.89 | 0.89 | 0.84 | |

| DNABERT | 0.85 | 0.85 | 0.85 | 0.81 | |

| Family classification | GBC | 0.71 | 0.69 | 0.68 | 0.68 |

| CNN-LSTM | 0.77 | 0.73 | 0.74 | 0.84 | |

| DNABERT | 0.73 | 0.73 | 0.73 | 0.22 |

GBC Gradient Boosting classifier, CNN-LSTM A hybrid network model, DNABERT pre-trained BERT. The best F1 values for a given task are shown in bold. P-values correspond to statistical testing on cross-validated sets by the Wilcoxon signed-rank test with the null hypothesis that the reported accuracy is not significantly diffferent (see Model testingMethods)

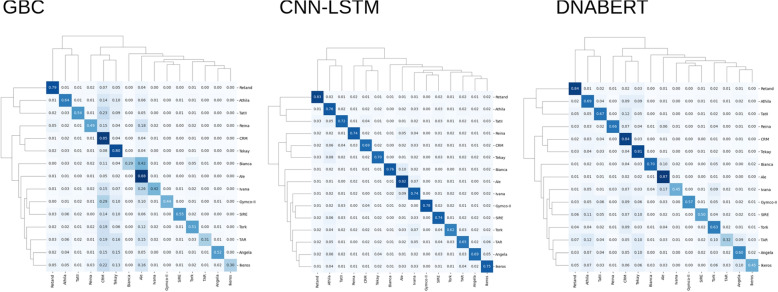

The superiority of the CNN+LSTM hybrid network model can be clearly seen in the family classification task (Fig. 2). Despite having generally lower recall in the three most numerous families (Ale, CRM, Tekay), this network had however maintained higher precision than the other models (see also Supplementary Figures 8 and 9). When compared in the cross-validation analysis, the accuracy of GBC models is significantly lower than that of the other two on all three classification tasks (Supplementary Figure 10).

Fig. 2.

Cross-accuracy of the three model types in the family classification task. GBS - Gradient Boosting classifier; CNN-LSTM - a hybrid network model; DNABERT - pre-trained BERT

Model interpretation

While the models trained to detect and classify LTRs can be useful in themselves, they largely represent black box models that provide little understanding of how these classifications actually materialized. This is a well-known and universal problem of machine-learning, and particularly deep-learning methods. Current deep learning approaches try to address this problem by specialized post-training analysis of the model and its inputs and outputs. We adapted two such approaches to the LTR classification problem presented here, some of which can only be used on specific model types. Derivation of Shapley additive explanations (SHAP) is by principle a model-agnostic method and can be applied to all models. Convolutional filter analysis was used for neural network models. The methods for interpretable machine learning used here are described in more detail in the Trained model interpretation section in Methods.

LTR

SHAP explanations were used on the GBC model to gauge the effect of TFBS in the analyzed DNA sequences and to calculate SHAP values along the analyzed LTR sequences in CNN and DNABERT models (see Trained model interpretation in Methods). Filter analysis was used for CNN model interpretation (see Trained model interpretation section in Methods).

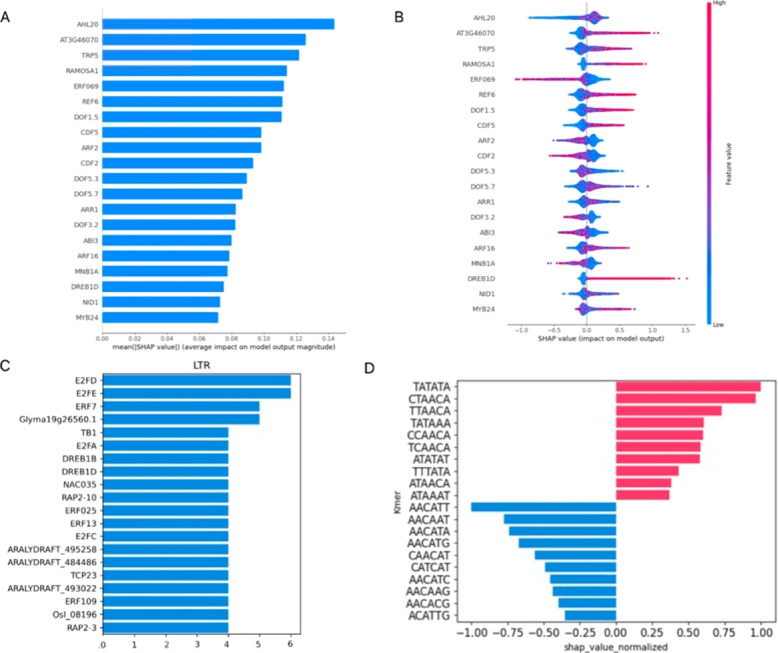

SHAP TreeExplainer (GBC)

First we analyzed models trained to recognize LTRs (as opposed to other random, or genomic sequences) (Figs. 3 and 4). SHAP analysis of the GBC model shows the top 20 most impactful transcription factor binding sites (TFBS) present in LTR sequences and contributing most towards their classification (Fig. 3a) as identified by the SHAP algorithm. The effects of perturbations of these significant features (see Trained model interpretation in Methods) on classification accuracy display varying degrees of effect, ranging from a 1–13% decrease in the LTR classification task and 0–8% in the superfamily detection task (Supplementary Figure 11).

Fig. 3.

Main results of explainability analysis carried out on trained LTR detection models. A Top twenty transcription factor binding sites (TFBS) with the highest mean SHAP value contribution (as input features) to the GBC model performance in LTR classification. Accross all LTRs the presence or absence of these TFBS was most important for classification of sequences as LTRs by the model (compared to other TFBS). B Beeswarm plot showing the extent to which input features influenced model output. Color codes for the TF-IDF transformed occurrences of that particular TFBS as described in Models in Methods, horizontal axis shows SHAP values, indicating whether the effect of a TFBS presence in the analyzed sequence was positive, or negative. C TomTom hits of first-layer CNN filters on JASPAR Core 2022 database. The X axis represents the number of CNN filters that were mapped to the specific TFBS as described in the Trained model interpretation section in Methods. D Top contributions of individual k-mers to DNABERT classification as determined by SHAP analysis. Positively valued k-mers influence the classification towards the LTR class, while negative values influence the classification towards the non-LTR class

Fig. 4.

SHAP analysis of trained LTR detection models. k-mer based SHAP values were calculated along individual LTR sequences. To visualize their alignment between different sequences, sequences were aligned (from left to right) by their first base (start), predicted TATA box (TATA), predicted transcription start site (TSS), and their last base (end). Averaged SHAP values are shown as a line graph above, individual sequence values are color-coded. A CNN model. B DNABERT model

To get an indication about which TFBS, or what kind of regulation might be specific to LTR retrotransposons in plants, we ran this set through gProfiler GOSt functional analysis [60]. Apart from many hits to general TF-related terms (such as nucleus, DNA-binding, transcriptional regulation, etc.), the biological process results have also shown interesting subsets, namely: (i) response to stimulus, (ii) anatomical and multicellular development, and (iii) reproductive process (Supplementary Figures 12–14).

Beeswarm plots of the same analysis (Fig. 3b) differentiate between the positive and negative contribution of the individual TFBS to classification. Specifically, ERF069 and DREB1D show a particularly sharp boundary of high/low SHAP values and positive/negative classification, although in opposite directions. ERF069 is an ethylene responsive element mostly absent from LTRs, while DREB1D on the other hand, is a dehydration responsive element that is associated with LTRs. A complete list of evaluated TFBS and their performance in the LTR classification task using the GBC model is provided as Supplementary File 10.

Filter analysis (CNN)

Another opportunity to look at TFBS as instrumental in LTR classification was via filter analysis of the CNN model. Figure 3c shows the top 20 results from the comparison of first-layer convolutional filters to JASPAR database TFBS, sorted based on the number of motif hits. gProfiler GOSt functional analysis shows results similar to the above paragraph, however in this case, only response to stimulus was present as a subset of TF-specific terms, embodied by the only common hit with the above analysis in DREBD1B. Filter analysis brought up several E2F and ERF family members’ binding sites. While the former are cell-cycle progression regulators, the latter are ethylene responsive factors that may have been used by the model as a negative indicator of LTR sequences (analogically to SHAP results in GBC above). A complete list of evaluated filters/TFBS and their presence as filters in the CNN model is provided as Supplementary File 11.

DeepExplainer (CNN)

The DeepExplainer module was implemented to visualize the location of sequence positions with the highest SHAP values in the CNN-LSTM model (Fig. 4a). To visualize the alignment of possible signals between different sequences, the sequences were aligned by their first base (start), predicted TATA box, predicted transcription start site (TSS), and their last base (end) and shown as line graphs and heatmaps. In both the averaged line graph values and the heatmaps, three signals pop up as locations instrumental in LTR classification. They are the first few and the last few bases of the LTRs, as well as the TATA box predicted with TSSPlant [56]. It is also apparent in the CNN model, that the 5’ ends of the LTRs have a higher density of informative k-mers than their 3’ ends, possibly reflecting a typical TFBS position upstream of the TSS.

Explainer (k-mers, DNABERT)

Similarly to the SHAP analysis on TFBS and sequence positions, the analysis can be carried out on kmer-based models to identify k-mers present in LTR sequences that, in a given instance contribute more significantly to the classification of the sequence as an LTR or a non-LTR. The top 20 k-mers for the LTR classification task via the DNABERT model are shown in Fig. 3d. We have identified overlaps among the k-mers that indicated their origin from a wider sequence motif (Supplementary File 12) and found the following putative consensus motifs to be present: (i) TATA[AT]A (positive SHAP values, a likely TATA box), (ii) [CT][CT]AACA (positive SHAP value, likely 3’ end of LTR), and (iii) CAACAT[GT]G (negative SHAP value, unknown origin). A complete list of evaluated k-mers in the DNABERT model and their SHAP values is provided as Supplementary File 13. Visualization of signal alignment was conducted in the same way as previously described in LTRs to show the sequence regions containing significant k-mers (Fig. 4b).

Superfamily

Models trained to recognize superfamilies were analyzed analogically to those detecting LTRs and the respective visualizations can be seen in Supplementary Figures 15,16. Interestingly, gProfiler analysis of top 20 TFBS instrumental in superfamily classification by the GBC model did not show any biological process outside general transcriptional regulation as overrepresented in the set. The top 20 list shared 6 TFBS with a similar list from the LTR classification task, namely AHL20, DOF5.3, DOF5.7, ARF 16, ERF069, AT3G46070. Unlike the LTR classification task above, DeepExplainer visualization has not demonstrated the importance of TATA box sequences for superfamily classification, although a few sequences did show some higher SHAP values in the vicinity of the TATA box, some of which could be other core promoter elements preceding TATA. The extreme ends of the LTR sequences remained informative (Supplementary Figure 16), suggesting that these sequences may exist in superfamily variants, or at least are more conserved in one superfamily compared to the other (further analyzed in the following subsection and Table 2).

Table 2.

The most represented k-mers at the 5’ and 3’ ends of LTRs

| Copia | Gypsy | ||||

|---|---|---|---|---|---|

| 5’..3’ ends | count | assigned | 5’..3’ ends | count | assigned |

| TGTT..AACA | 768 | TGTT..AACA total: 1870 (84%) | TGTT..AACA | 248 | TGTT..AACA total: 248 (24%) |

| GTTA..AACA | 558 | TGAT..ATCA | 292 | TGAT..ATCA total: 652 (63%) | |

| GTTG..AACA | 544 | GATG..ATCA | 202 | ||

| TGTA..AACA | 183 | TGTA..AACA total: 364 (16%) | GATA..ATCA | 158 | |

| GTAA..AACA | 181 | TGTC..GACA | 139 | TGTC..GACA total: 139 (13%) | |

Left Copia superfamily, the most frequent tetramers are 5’-TGTT..AACA-3’; Right Gypsy superfamily, the most frequent tetramers are 5’-TGAT..ATCA-3’

LTR 5’ and 3’ edge analysis

The extremes of LTR sequences (at both the 5’ and 3’ ends) repeatedly surfaced in our explainability analysis results as informative. To get a more detailed picture of sequences present at these locations in various LTR subsets, we also counted the 5’ and 3’ k-mers in the input data. Table 2 shows the assignment of the top five tetramer pairs of Gypsy and Copia superfamily LTR ends to one of the two most frequent pairs, 5’-TGTT..AACA-3’ and 5’-TGAT..ATCA-3’. The assignments were based on the observation that in some LTR annotations, tetramers were apparently shifted by 1 base, presumably because of annotation imprecisions in the sourced files (based on this logic, as an example, GTTA..AACA was assumed to be shifted by 1 base at the 5’-end and therefore assigned to TGTT..AACA). The assumption of a shift in Table 2 was made in all cases where shifting the tetramer by 1bp improved the complementarity of the 5’ and 3’ tetramers and led to the presence of the canonical TG..CA pair. Interestingly, 16 % of Copia edges had only 3bp complementarity, compared to the rest of the five most occuring tetramer pairs in each superfamily shown in the table.

Family

Models trained to recognize families were analyzed analogically to those detecting LTRs and the respective visualizations can be viewed in Supplementary Figure 17. Significant signals typical for specific positions within the LTR all but disappeared in visualization of SHAP values from CNN and DNABERT models for individual families (Supplementary File 14). Top TFBS from GBC SHAP analysis had no overlap with the corresponding LTR and superfamily sets. A small number of families (namely Ale and Bianca) show a weak signal at their TATA box in the DNABERT model visualization grid. The same data show most families present a weak signal at the LTR’s 5’ end but only a few at their 3’ end (Ale, Bianca, Ivana, TAR and Tork). The CNN-LSTM visualization grid shows weak signals at both 5’ and 3’ ends, however no apparent learning around the TATA box. Clearly, the lower accuracy of family-level classification comes with very weak signal for any specific TF or k-mer that would have a specific location within the LTRs. However, when subjected to overrepresentation analysis with gProfiler (Supplementary Figure 14), six of the top 20 TF involved belonged to a functional group responding to plant hormones, specifically auxin and abscisic acid. Related overrepresented biological functions in eight TFBS included cell communication, meristem localization and phyllotaxis (PHY3, AIL6, AIL7, WRKY62, FUS3, ABF2, ABF3, BHLH112 ).

Discussion

We used machine learning methods to predict long terminal repeats (LTR) of plant LTR retrotransposons and to classify LTR sequences into retrotransposon families. Our results show that the models used here recognized biologically relevant motifs, such as core promoter elements (TATA box), as well as development- and stress-related subclasses of TF binding sites. Our analysis also reinforced the importance of 5’- and 3’- edges in LTR identity.

While our work is not the first to apply machine learning methods to LTR retrotransposon analysis, none of the previous studies analyzed LTRs in isolation as we did here. One of the earliest ML approaches, based on full-length TE sequences, was reported by [61] who used Random Forest-based models to detect and classify LTR retrotransposons into superfamilies, achieving average F1 values of 0.56. [62] trained a multi-layer perceptron model based on k-mers to classify full-length LTR TEs. The F1 scores on their data reached 0.95. This is a better performance than our deep learning models here at or above the superfamily level (0.73–0.85; Table 1), however it is also expected, since the internal parts of LTR TEs contain protein coding sequences that are more amenable to sequence alignment, as such form the basis of TE classification systems, and are generally easier to detect and cluster. Other previous works aimed at classifying LTR TEs as a class among other repeat classes used neural networks and hierarchical repeat sequence clustering [63, 64] to achieve precision of LTR-TE classification 0.88 and 0.94. These variances can be ascribed to different motivation. While we focused on model explainability and the associated detection of biological motifs in the analyzed LTR sequences, the other studies were mostly motivated by increasing the speed and/or precision of the classification tasks compared to possibly simpler but time-consuming procedures, such as sequence alignment. The use of isolated LTRs allowed us to focus on specific sequences that typically make up regulatory DNA, not only in LTRs but also in promoters and enhancers. Unlike the above approaches, we also carried out classification at the family level. While these models were the most difficult to analyze for explainability, and the least informative compared to the two higher levels, they still achieved a respectable F1 of 0.68–0.75.

Looking at previous attempts in this area, clearly models with a CNN component tend to be the most popular and give the best results [65, 66]; see also Table 1).

We tested three different techniques to achieve model explainability and identify features that the models learned, which then contributed most to model accuracy (Fig. 5). Interpretation of DNABERT attention heads (not shown) was problematic. Among other things, we did not find an effective way to correlate the data with the other methods (CNN filter analysis and SHAP) and therefore decided not to pursue this avenue of investigation. CNN filter analysis has shown that many of the filters learned in the neural network had resemblance to known JASPAR TFBS motifs and served to pinpoint the most prominent TFBS recognized by the models. Their biological underpinnings are discussed below. It turned out that SHAP was the most effective method to analyze the trained models, which allowed us to identify specific sequence motifs used by the models, such as the TATA-box motif and 5’- and 3’- ends of LTRs, that contributed most to LTR identification and classification and are identical to motifs described in plant TEs before [67]. Also, being a model-agnostic method, the use of SHAP allowed us to compare influential features across models using the same metric.

Fig. 5.

A hierarchy of promoter-like regulatory elements (including LTRs). black - subsets of regulatory sequences that ML models are trained on; red - sequence motifs specific for respective subsets that the models can use to classify the group correctly. Block diagram shows the structure of a typical LTR with sequence motifs assembled from k-mers discovered by the DNABERT model trained here

The dependence of models on TFBSs in LTRs is consistent with the concept that LTRs are regulatory regions capable of controlling the transcription of elements in a spatially and temporally specific manner [8]. By searching biological roles of the most prominent TFBSs, we found them to be associated particularly with (i) transcriptional activation of genes in stress conditions (DREB1, REF6, ERF7, ARR1), (ii) binding sites for transcription factors (TFs) acting during flowering and germline development (RAMOSA1, CDF5, DOF5.3, E2FA,C,D,E, MYB24, NID1,TB1, AT3G46070), (iii) binding sites for tissue-specific transcriptional repressors (AHL20, CDF2, ARF2), and (iv) binding sites for chromatin remodelers involved in DNA demethylation (REF6). This observation gained using LTRs from 75 plant species here should be interpreted with caution because TFs form large gene families of neo-functionalized and sub-functionalized genes sharing identical or similar TFBSs. TFs typically account for 5–10 % of genes in a species genome [68], for example, Arabidopsis thaliana has approximately 2300 TFs, which corresponds to 8.3 % of its total genes [69]. Some TFs also either require the binding of homodimers to two TFBSs at some distance apart or interaction with other TFs bound to a given locus to initiate transcription or other processes [70, 71]. Moreover, the roles of individual TFs have only been studied in a few model species to date, and it is unclear to what extent their functions are conserved in plants.

However, some of the prominent TFBSs recognized by the models have already been found and functionally validated in TEs. Therefore, we assume that our models have used TFBSs preferred in LTRs and related to general rules for transcriptional regulation of LTR retrotransposons. Of particular importance are TFBSs for binding stress-response TFs. Activation of TEs by abiotic and biotic stress is supported by a wealth of experimental data in A. thaliana (e.g. [72, 73], rice [74], sunflower [75] and other species [76]. Although earlier studies linked the activation of TEs by stress to epigenetic changes (euchromatinization of TEs), a number of TEs are now known to contain TFBSs identical to those of stress-responsive genes. A textbook example is ONSEN, a heat-induced (high temperature induced) LTR retrotransposon containing a heat shock element (HSE) for heat shock factor binding [77, 78]. In maize most TEs (gypsy, copia, LINEs) activated by stress contain motifs for stress-responsive DREB/CBF transcription factors [79], which have been recognized by our ML models. DREB/CBF and REF6 TFBSs have been detected in Gypsy and Copia TEs activated by heat stress in Arabidopsis [80]. REF6 is a plant-unique H3K27 demethylase that targets DNA motifs via its zinc-finger (ZnF) domain [81]. Its presence in TEs suggests that TEs are able to actively resist the host methylation machinery and/or control their epigenetic state in response to stress conditions. In addition, there is increasing evidence that TEs, by transferring stress-response TFBSs to the vicinity of genes, rewire new transcriptional networks that enable the host adaptation to stress [80, 82, 83] and changing environmental conditions [84].

Another frequent group of TFBSs is bound by TFs expressed in floral meristems and reproductive organs. TB1 (identified here by ML models) has been previously confirmed in the Hopscotch retrotransposon in maize, where it is expressed in developing glumes [85]. E2F TFBSs were found in several families of TEs in Brassica species, and E2Fa binding to TEs has been functionally validated in vivo [82]. E2F TFs regulate various processes mostly in developing pistils and anthers, and frequently TE-harbored MYB24, NID1, CDF5, and AT3G46070 TFs also show localized expression in stamens (based on https://bar.utoronto.ca/efp/cgi-bin/efpWeb.cgi, Klepikova Atlas). These findings suggest that TEs prefer certain short-term and localized windows in their host’s life cycle for transcription and transposition. Transposition in floral meristems or in reproductive cells allows TEs to minimize their spatiotemporal activity, thereby lowering the risk of reducing host fitness by deleterious insertions in somatic cells, while increasing the probability of transmitting new TE copies to the next generation. Clues to this behavior can be seen in dioecious plants with heteromorphic sex chromosomes. For example, in Silene latifolia and Rumex acetosa, the accumulation/absence of most LTR retrotransposons on Y chromosomes can be explained by transposition in either the male or female reproductive organs [86–91]. A very similar situation emerges in animals as well. For example, many TEs harbor DNA binding sites for pluripotency factors and are transiently expressed during the embryonic genome activation of primates [92].

Taken together, the ML tools opted for TFBSs, many of which have been independently described by other methods. We consider them to be indicative of the biology of TEs and the TE/host interaction. We can speculate that, in general, LTRs contain more often binding sites of TFs that ensure reproductive cell-specific activity or activity triggered by biotic and abiotic stresses. This is advantageous for both TEs and the host because (i) host viability is not threatened by deleterious TEs in somatic cells, (ii) transgenerational reproduction of TEs is ensured, and (iii) the evolutionary plasticity of the host genome is increased by new regulatory networks [93]. On the other hand, no single TFBS defines a specific taxonomic group of TEs. This is consistent with the notion that TEs can co-opt new TFs from other TEs and genes, and adapt their strategy to changing conditions in the host genome. As for family-level classification, we speculate that the combination of a low number of examples and a weak mostly distributed signal for each family may be partly responsible for the lower accuracy and lack of specific signals to observe in the results. This signal may have been just strong enough to bring up a small group of TFs in a gProfiler over-representation analysis, rather than showing a specific TF. To better classify LTRs at the family level, a larger training dataset may be necessary.

Our machine learning approach could be advantageous not only for a better LTR retrotransposon and solo LTR identification and annotation but could be useful also for the prediction of potential TF binding sites within LTRs. This way, our tool can also contribute to revealing the involvement of these mobile genetic elements in cellular regulatory networks.

Conclusion

In this work we tested the ability of deep learning techniques to learn features specific for certain sets of plant LTR sequences, and when combined with explainability analysis, to pinpoint regions of LTRs responsible for their accuracy. We found three features used by the trained models: i) 5’- and 3’- edges, ii) TATA-box region, and iii) TFBS motifs and discussed their biological relevance. Our work shows the applicability of the used models and the associated explainability analysis to the study of regulatory sequences and their classification.

Supplementary information

Supplementary Figures Supplementary Figure 1 - LTR sequences comparison - classical approaches. Supplementary Figure 2 - CNN-LSTM model topology Supplementary Figure 3 - DNABERT model topology Supplementary Figure 4 - LTR detection contingency tables Supplementary Figure 5 - LTR detection accuracy Supplementary Figure 6 - Superfamily classification contingency tables Supplementary Figure 7 - Superfamily classification accuracy Supplementary Figure 8 - Family classification contingency tables Supplementary Figure 9 - Family classification accuracy Supplementary Figure 10 - Cross-validation - differences between three models Supplementary Figure 11 - Perturbation analysis Supplementary Figure 12 - gProfiler GOSt analysis of the top 20 GBS model TFBS (LTR task) Supplementary Figure 13 - gProfiler GOSt analysis of the top 20 CNN model TFBS (LTR task) Supplementary Figure 14 - gProfiler GOSt analysis of the top 20 CNN model TFBS (sf task) Supplementary Figure 15 - Main results of explainability analysis (sf classification) Supplementary Figure 16 - DeepExplainer analysis of trained superfamily detection models Supplementary Figure 17 - Results of explainability analysis (family classification) Supplementary Figures Supplementary Table 1 - Tested hyperparameters Supplementary Table 2 - Loss functions Supplementary Table 3 - Cross-validation between models Supplementary Table 4 - Final hyperparameter values Supplementary Files File1_LTR_sequences.fa.gz File2_LTR-negative_sequences.fa.gz File3_non-LTR_counts.tab File4_LTR_species_counts.tab File5_JASPAR_matrices.tab File6_Trained_models.zip File7_Gridsearch_results.json File8_Hyperparameter_sweep.zip File9_Clustering_with_kmers.pdf File10_GBC_SHAP_values.tab File11_CNN_filters.tab File12_kmer_motifs.pdf File13_DNABERT_kmer_SHAP_values.tab File14_CNN_BERT_Grid.pdf

Acknowledgements

We thank Christopher Johnson for critical reading of this manuscript.

Code availability

GIT repository https://github.com/jakubhorvath/LTR_classification.

Authors’ contributions

JH, PJ and ML participated in data collection and preparation, JH carried out model implementation and training; JH, PJ, MK and ML generated final results and visualizations; all authors participated in data interpretation and writing the manuscript.

Funding

Financial support for this work was provided by a grant from the the Czech Science Foundation number 21-00580S to EK and ML, 24-11400S to EK, and 22-00364S to ZK. The work was supported from the project TowArds Next GENeration Crops, reg. no. CZ.02.01.01/00/22_008/0004581 of the ERDF Programme Johannes Amos Comenius.

Data availability

Supplementary figures, tables, files, frozen source code and data available via Zenodo archive 10.5281/zenodo.13958598.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Footnotes

The original version of this article was revised: The funding statement has been updated.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Change history

12/30/2024

A Correction to this paper has been published: 10.1186/s13040-024-00417-6

Contributor Information

Jakub Horvath, Email: jakubhorvath119@gmail.com.

Matej Lexa, Email: lexa@fi.muni.cz.

References

- 1.Baucom RS, Estill JC, Chaparro C, Upshaw N, Jogi A, Deragon JM, et al. Exceptional, non-random distribution, and rapid evolution of retroelements in the B73 maize genome. PLoS Genet. 2009;5(11):e1000732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Klaver B, Berkhout B. Comparison of 5’ and 3’ long terminal repeat promoter function in human immunodeficiency virus. J Virol. 1994;68(6):3830–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Jedlicka P, Lexa M, Kejnovsky E. What Can Long Terminal Repeats Tell Us About the Age of LTR Retrotransposons, Gene Conversion and Ectopic Recombination? Front Plant Sci. 2020;11. [DOI] [PMC free article] [PubMed]

- 4.Luo X, Chen S, Zhang Y. PlantRep: a database of plant repetitive elements. Plant Cell Rep. 2022;41:1163–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Bennetzen J, Wang H. The contributions of transposable elements to the structure, function, and evolution of plant genomes. Annu Rev Plant Biol. 2014;65:505–30. [DOI] [PubMed] [Google Scholar]

- 6.Grandbastien MA, Audeon C, Bonnivard E, Casacuberta JM, Chalhoub B, Costa APP, et al. Stress activation and genomic impact of Tnt1 retrotransposons in Solanaceae. Cytogenet Genome Res. 2005;110(1–4):229–41. [DOI] [PubMed] [Google Scholar]

- 7.Sigman MJ, Slotkin RK. The First Rule of Plant Transposable Element Silencing: Location, Location, Location. Plant Cell. 2016;28(2):304–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wicker T, Sabot F, Hua-Van A, Bennetzen JL, Capy P, Chalhoub B, et al. A unified classification system for eukaryotic transposable elements. Nat Rev Genet. 2007;8:973–82. [DOI] [PubMed] [Google Scholar]

- 9.Arango-López J, Orozco-Arias S, Salazar JA, Guyot R. Application of Data Mining Algorithms to Classify Biological Data: The Coffea canephora Genome Case. In: Solano A, Ordoñez H, editors. Advances in Computing. Cham: Springer International Publishing; 2017. pp. 156–70.

- 10.Orozco-Arias S, Candamil-Cortes MS, Jaimes PA, Valencia-Castrillon E, Tabares-Soto R, Isaza G, et al. Automatic curation of LTR retrotransposon libraries from plant genomes through machine learning. J Integr Bioinform. 2022;19(3):20210036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Casacuberta JM, Santiago N. Plant LTR-retrotransposons and MITEs: control of transposition and impact on the evolution of plant genes and genomes. Gene. 2003;311:1–11. [DOI] [PubMed] [Google Scholar]

- 12.Dutilleul A, Rodari A, van Lint C. Depicting HIV-1 transcriptional mechanisms: a summary of what we know. Viruses. 2020;12(12):1385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cui X, Cao X. Epigenetic regulation and functional exaptation of transposable elements in higher plants. Curr Opin Plant Biol. 2014;21:83–8. [DOI] [PubMed] [Google Scholar]

- 14.Thompson PJ, Macfarlan TS, Lorincz MC. Long Terminal Repeats: From Parasitic Elements to Building Blocks of the Transcriptional Regulatory Repertoire. Mol Cell. 2016;62:766–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hermant C, Torres-Padilla ME. TFs for TEs: the transcription factor repertoire of mammalian transposable elements. Genes Dev. 2021;35(1–2):22–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Turcotte K, Srinivasan S, Bureau T. Survey of transposable elements from rice genomic sequences. Plant J. 2001;25:169–79. [DOI] [PubMed] [Google Scholar]

- 17.Arkhipova IR, Mazo AM, Cherkasova VA, Gorelova TV, Schuppe NG, Ilyin YV. The steps of reverse transcription of drosophila mobile dispersed genetic elements and U3-R-U5 structure of their LTRs. Cell. 1986;44(4):555–63. [DOI] [PubMed] [Google Scholar]

- 18.Zhang L, Yan L, Jiang J, Wang Y, Jiang Y, Yan T, et al. The structure and retrotransposition mechanism of LTR-retrotransposons in the asexual yeast Candida albicans. Virulence. 2014;5(6):655–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Du J, Tian Z, Hans CS, Laten HM, Cannon SB, Jackson SA, et al. Evolutionary conservation, diversity and specificity of LTR-retrotransposons in flowering plants: insights from genome-wide analysis and multi-specific comparison. Plant J. 2010;63(4):584–98. [DOI] [PubMed] [Google Scholar]

- 20.Sapoval N, Aghazadeh A, Nute MG, Antunes DA, Balaji A, Baraniuk R, et al. Current progress and open challenges for applying deep learning across the biosciences. Nat Commun. 2022;13(1):1728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, et al. Attention Is All You Need. In: Guyon I, Von Luxburg U, Bengio S, Wallach H, Fergus R, Vishwanathan S, et al., editors. Advances in Neural Information Processing Systems. vol. 30. Red Hook, NY: Curran Associates, Inc.; 2017. p. 6000–10.

- 22.Ji Y, Zhou Z, Liu H, Davuluri RV. DNABERT: pre-trained Bidirectional Encoder Representations from Transformers model for DNA-language in genome. Bioinformatics. 2021;37(15):2112–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jumper J, Evans R, Pritzel A, et al. Highly accurate protein structure prediction with AlphaFold. Nature. 2021;596(7873):583–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Chen Y, Qi Y, Wu Y, Zhang F, Liao X, Shang X. BERTE: High-precision hierarchical classification of transposable elements by a transfer learning method with BERT pre-trained model and convolutional neural network. bioRxiv. 2024. 10.1101/2024.01.28.577612.

- 25.Kotov A, Zinovyev A, Monsoro-Burq A. scEvoNet: a gradient boosting-based method for prediction of cell state evolution. BMC Bioinformatics. 2023;24(1):83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Messad F, Louveau I, Koffi B, Gilbert H, Gondret F. Investigation of muscle transcriptomes using gradient boosting machine learning identifies molecular predictors of feed efficiency in growing pigs. BMC Genomics. 2019;20(1):659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gunasekaran H, Ramalakshmi K, Rex Macedo Arokiaraj A, Deepa Kanmani S, Venkatesan C, Suresh Gnana Dhas C. Analysis of DNA Sequence Classification Using CNN and Hybrid Models. Comput Math Methods Med. 2021;1835056. 10.1155/2021/1835056. [DOI] [PMC free article] [PubMed]

- 28.Liang S, Zhu B, Zhang Y, Cheng S, Jin J. A Double Channel CNN-LSTM Model for Text Classification. In: IEEE 22nd International Conference on High Performance Computing and Communications; IEEE 18th International Conference on Smart City; IEEE 6th International Conference on Data Science and Systems (HPCC/SmartCity/DSS). Washington: IEEE Computer Society; 2020. pp. 1316–21.

- 29.Hochreiter S, Schmidhuber J. Long Short-Term Memory. Neural Comput. 1997;9(8):1735–80. [DOI] [PubMed] [Google Scholar]

- 30.Devlin J, Chang MW, Lee K, Toutanova K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv. 2018. arXiv:1810.04805. 10.48550/arXiv.1810.04805.

- 31.Koo PK, Eddy SR. Representation learning of genomic sequence motifs with convolutional neural networks. PLoS Comput Biol. 2019;15(12):e1007560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lundberg S, Lee SI. A Unified Approach to Interpreting Model Predictions. Adv Neural Inf Process Syst. 2017;30:4765–74. [Google Scholar]

- 33.Ribeiro MT, Singh S, Guestrin C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. New York, NY,: Association for Computing Machinery; 2016. pp. 1135–44.

- 34.Shrikumar A, Greenside P, Kundaje A. Learning important features through propagating activation differences. In: Proceedings of the 34th International Conference on Machine Learning. vol. 70. Sydney: ML Research Press; 2017. pp. 3145–53.

- 35.An W, Guo Y, Bian Y, Ma H, Yang J, Li C, et al. MoDNA: motif-oriented pre-training for DNA language model. In: Proceedings of the 13th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics. BCB ’22. New York: Association for Computing Machinery; 2022.

- 36.Danilevicz MF, Gill M, Fernandez CGT, Petereit J, Upadhyaya SR, Batley J, et al. DNABERT-based explainable lncRNA identification in plant genome assemblies. Comput Struct Biotechnol J. 2023;21:5676–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zhou SS, Yan XM, Zhang KF, Liu H, Xu J, Nie S, et al. A comprehensive annotation dataset of intact LTR retrotransposons of 300 plant genomes. Sci Data. 2021;2021(8):174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Li W, Godzik A. CD-hit: a fast program for clustering and comparing large sets of protein or nucleotide sequences. Bioinformatics. 2006;22(13):1658–9. [DOI] [PubMed] [Google Scholar]

- 39.Youens-Clark K. Mastering python for bioinformatics: How to write flexible, documented, tested python code for research computing. Sebastopol, CA: O’Reilly Media; 2021.

- 40.Vitte C, Panaud O. Formation of Solo-LTRs Through Unequal Homologous Recombination Counterbalances Amplifications of LTR Retrotransposons in Rice (Oryza sativa L.). Mol Biol Evol. 2003;20(4):528–40. [DOI] [PubMed] [Google Scholar]

- 41.Castro-Mondragon JA, Riudavets-Puig R, Rauluseviciute I, Berhanu LR, Turchi L, Blanc-Mathieu R, et al. JASPAR 2022: the 9th release of the open-access database of transcription factor binding profiles. Nucleic Acids Res. 2022;50(D1):D165–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Cock PJA, Antao T, Chang JT, Chapman BA, Cox CJ, Dalke A, et al. Biopython: freely available Python tools for computational molecular biology and bioinformatics. Bioinformatics. 2009;25(11):1422–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Manning CD, Raghavan P, Schütze H. In: Introduction to Information Retrieval. Cambridge: Cambridge University Press; 2008. pp. 118–20.

- 44.Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: Machine learning in Python. J Mach Learn Res. 2011;12(Oct):2825–30. [Google Scholar]

- 45.Chollet F, et al. Keras. https://github.com/fchollet/keras. Accessed 15 Feb 2024.

- 46.Wolf T, Debut L, Sanh V, Chaumond J, Delangue C, Moi A, et al. HuggingFace’s Transformers: State-of-the-art Natural Language Processing. arXiv. 2020;1910.03771. 10.48550/arXiv.1910.03771.

- 47.Paszke A, Gross S, Massa F, Lerer A, Bradbury J, Chanan G, et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In: Advances in Neural Information Processing Systems 32. Red Hook, NY: Curran Associates, Inc.; 2019. pp. 8024–35.

- 48.Santafe G, Inza I, Lozano JA. Dealing with the evaluation of supervised classification algorithms. Artif Intell Rev. 2015;44(4):467–508. [Google Scholar]

- 49.Rainio O, Teuho J, Klén R. Evaluation metrics and statistical tests for machine learning. Sci Rep. 2024;14(1):6086. [DOI] [PMC free article] [PubMed]

- 50.Virtanen P, Gommers R, Oliphant TE, Haberland M, Reddy T, Cournapeau D, et al. SciPy 1.0: fundamental algorithms for scientific computing in Python. Nat Methods. 2020;17(3):261–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Pizarro J, Guerrero E, Galindo PL. Multiple comparison procedures applied to model selection. Neurocomputing. 2002;48(1–4):155–73. [Google Scholar]

- 52.Janez Demšar. Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res. 2006;7:1–30. [Google Scholar]

- 53.scikit-posthocs - PyPI. Python Software Foundation. https://pypi.org/project/scikit-posthocs/. Accessed 15 Feb 2024.

- 54.Covert I, Lundberg SM, Lee SI. Understanding Global Feature Contributions With Additive Importance Measures. Adv Neural Inf Process Syst. 2020;33:17212–23. [Google Scholar]

- 55.Lundberg S, Erion G, Chen H, DeGrave A, Prutkin J, Nair B, et al. From local explanations to global understanding with explainable AI for trees. Nat Mach Intell. 2020;2(1):2522–5839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Shahmuradov IA, Umarov R, Solovyev V. TSSPlant: a new tool for prediction of plant Pol II promoters. Nucleic Acids Res. 2017;45(8):e65. [DOI] [PMC free article] [PubMed]

- 57.Ramírez F, Ryan DP, Grüning B, Bhardwaj V, Kilpert F, Richter AS, et al. deepTools2: a next generation web server for deep-sequencing data analysis. Nucleic Acids Res. 2016;44(W1):W160–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Bailey TL, Johnson J, Grant CE, Noble WS. The MEME Suite. Nucleic Acids Res. 2015;43(W1):W39–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Bailey TL, Elkan C. Fitting a mixture model by expectation maximization to discover motifs in biopolymers. In: Proceedings of the Second International Conference on Intelligent Systems for Molecular Biology. Washington: AAAI Press; 1994. pp. 28–36. [PubMed]

- 60.Kolberg L, Raudvere U, Kuzmin I, Adler P, Vilo J, Peterson H. g:Profiler-interoperable web service for functional enrichment analysis and gene identifier mapping (2023 update). Nucleic Acids Res. 2023;51(W1):W207–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Schietgat L, Vens C, Cerri R, Fischer CN, Costa E, Ramon J, et al. A machine learning based framework to identify and classify long terminal repeat retrotransposons. PLoS Comput Biol. 2018;14(4):e1006097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Orozco-Arias S, Candamil-Cortés MS, Jaimes PA, Piña JS, Tabares-Soto R, Guyot R, et al. K-mer-based machine learning method to classify LTR-retrotransposons in plant genomes. PeerJ. 2021;9:e11456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Abrusán G, Grundmann N, Makałowski W. TEclass - a tool for automated classification of unknown eukaryotic transposable elements. Bioinformatics. 2009;25(10):1329–30. [DOI] [PubMed] [Google Scholar]

- 64.Nakano FK, Pinto WJ, Pappa GL, Cerri R. Top-down strategies for hierarchical classification of transposable elements with neural networks. In: International Joint Conference on Neural Networks (RIJCNN). Washington: IEEE; 2017. pp. 2539–46.

- 65.da Cruz MHP, Domingues DS, Saito PTM, Paschoal AR, Bugatti PH. TERL: classification of transposable elements by convolutional neural networks. Brief Bioinform. 2021;22(3):bbaa185. [DOI] [PubMed]

- 66.Yan H, Bombarely A, Li S. DeepTE: a computational method for de novo classification of transposons with convolutional neural network. Bioinformatics. 2020;36(15):4269–75. [DOI] [PubMed] [Google Scholar]

- 67.Rocheta M, Carvalho L, Viegas W, Morais-Cecilio L. Corky, a Gypsy-like retrotransposon is differentially transcribed in Quercus suber tissues. BMC Res Notes. 2012;5(1):432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Yuan HY, Kagale S, Ferrie AMR. Multifaceted roles of transcription factors during plant embryogenesis. Front Plant Sci. 2024;14. 10.3389/fpls.2023.1322728. [DOI] [PMC free article] [PubMed]

- 69.Hong JC. General Aspects of Plant Transcription Factor Families. In: Gonzalez DH, editor. Plant Transcription Factors. Boston: Academic Press; 2016. p. 35–56. [Google Scholar]

- 70.Boer DR, Freire-Rios A, van den Berg WM, Saaki T, Manfield I, Kepinski S, et al. Structural Basis for DNA Binding Specificity by the Auxin-Dependent ARF Transcription Factors. Cell. 2014;156(3):577–89. [DOI] [PubMed] [Google Scholar]

- 71.Strader L, Weijers D, Wagner D. Plant transcription factors - being in the right place with the right company. Curr Opin Plant Biol. 2022;65:102136. [DOI] [PMC free article] [PubMed] [Google Scholar]