Abstract

The X-ray flux from X-ray free-electron lasers and storage rings enables new spatiotemporal opportunities for studying in-situ and operando dynamics, even with single pulses. X-ray multi-projection imaging is a technique that provides volumetric information using single pulses while avoiding the centrifugal forces induced by conventional time-resolved 3D methods like time-resolved tomography, and can acquire 3D movies (4D) at least three orders of magnitude faster than existing techniques. However, reconstructing 4D information from highly sparse projections remains a challenge for current algorithms. Here we present 4D-ONIX, a deep-learning-based approach that reconstructs 3D movies from an extremely limited number of projections. It combines the computational physical model of X-ray interaction with matter and state-of-the-art deep learning methods. We demonstrate its ability to reconstruct high-quality 4D by generalizing over multiple experiments with only two to three projections per timestamp on simulations of water droplet collisions and experimental data of additive manufacturing. Our results demonstrate 4D-ONIX as an enabling tool for 4D analysis, offering high-quality image reconstruction for fast dynamics three orders of magnitude faster than tomography.

Subject terms: Imaging techniques, Imaging and sensing, Computational methods, Fluids

Yuhe Zhang and colleagues create a deep-learning-based algorithm for reconstructing 4D X-ray images from sparse projections. They aim to enable time-resolved 3D CT at X-ray beamlines without the need to rapidly rotate the object.

Introduction

X-ray tomography is a non-destructive 3D imaging technique that enables the study of the internal structure and composition of opaque materials1–3. It has been widely applied in various research areas, such as materials science4,5, medical imaging6, biology7, geology8, fluid dynamics9,10, and also industrial diagnostics11,12. By utilizing X-rays to scan a sample from multiple angles, tomography generates a comprehensive 3D image of the object. The recent advancement of modern Synchrotron Radiation (SR) facilities and X-ray Free-Electron Lasers (XFELs) has opened the way for time-resolved tomography experiments, where exploring dynamics in 4D, i.e., in real-time and in 3D space, has become possible13. Time-resolved tomography experiments have demonstrated the capabilities of capturing dynamics with sub-millisecond temporal resolution and micrometer spatial resolution14,15. However, the imaging process of tomography introduces centrifugal forces to the sample during acquisition that can potentially alter or even damage the sample and the dynamics studied. Achieving 1 ms temporal resolution would necessitate rotating the sample 500 times per second, generating a substantial centrifugal force that is hundreds of times the gravitational acceleration. This rapid rotation also presents a challenge in developing sample environments that can withstand such speeds. Consequently, the necessary rotation process for collecting a full set of projections often restricts the types of samples that can be used and limits the temporal resolution of X-ray tomography experiments. Additionally, the nature of tomography is not adaptable to single-shot imaging approaches. Various techniques have been developed to overcome this limitation, which aims to achieve higher temporal resolutions while preserving high-quality 3D images of the sample. X-ray Multi-Projection Imaging (XMPI)16–20 is developed among others as a time-resolved imaging technique. Unlike conventional scanning-based techniques, XMPI records volumetric information without scanning by generating different beamlets that illuminate the sample simultaneously from different angles. By combining the concept of XMPI with the unique capabilities of the fourth-generation SR sources21,22 and XFELs23, one can record 3D information of dynamical processes from kHz24,25 up to MHz rate26, exploiting the possibility of imaging 3D using single pulses of XFELs. This opens up possibilities for studying the high-speed dynamics of various materials. However, unlike tomography which records hundreds of projections of a sample, XMPI records no more than eight projections due to practical constraints16,18,25, and a reconstruction algorithm is required to recover 4D (3D + time) information from the extremely sparse data collected from XMPI.

It is unlikely to solve this problem using traditional methods due to the highly ill-defined nature of the problem27. Classic approaches to this problem typically rely on matching low-level primitives or features from different projections28–31. However, these methods require easily identifiable features or prior knowledge and assumptions about the sample, which limits their applicability. In addition, their performance degrades when applied to complex objects. Recent advancements in Deep Learning (DL) approaches provide a potential solution to this problem. DL algorithms, such as Convolutional Neural Networks (CNNs)32 and Generative Adversarial Networks (GANs)33, can be optimized to learn the underlying structure of the sample, generalizing over different similar samples, and produce high-quality reconstructions from sparse inputs34–36. Specifically, approaches based on Neural Radiance Fields37 have recently shown promise in optical and X-ray imaging for reconstructing high-resolution 3D/4D structures from sparse views38–44. Instead of relying on voxels, these methods learn the shape of an object as an implicit function of the 3D spatial coordinates, offering a potential solution to the longstanding memory issues associated with 3D reconstructions. The recently developed ONIX algorithm showed the 3D reconstruction from eight views for the experimental and simulated tomographic experiments41. However, there is a need for a 4D reconstruction algorithm to investigate ultrafast dynamical processes using highly sparse X-ray projections acquired through XMPI.

Here, we report 4D-ONIX, a self-supervised DL model that learns to reconstruct high-quality temporal and spatial information of the sample from the sparse projections collected with XMPI. It does not require 3D ground truth or prior dynamic description of the sample at any stage—neither during the training nor during its deployment. Once trained, it can reconstruct a 3D movie showing the refractive index of the sample as a function of time from only the recorded projections. The capability of 4D-ONIX is achieved by (i) incorporating the physics of X-ray propagation and interaction into the model, (ii) having a continuous representation of the sample that describes the refractive index as a function of position and time, (iii) learning the latent features of the sample by generalizing over all timestamps, and (iv) applying adversarial learning to enforce consistency between measured and predicted projections. We demonstrate our approach to the dynamical processes of binary water droplet collisions45–47 and additive manufacturing48. First, we validate the performance of our approach using simulated droplet collision datasets modeled using the Navier–Stokes Cahn–Hilliard equations49,50, retrieving volumetric information from two projections of simulated XMPI experiments. We then validate the approach on experimental data of additive manufacturing, reconstructing the melt pool dynamics of magnetite-modified alumina from three projections. This approach has also been applied to experimental XMPI data collected at the European Synchrotron Radiation Facility (ESRF) and European X-Ray Free-Electron Laser Facility (European XFEL) with kHz up to MHz acquisition rates, with results reported in other studies25,26. We envision that 4D-ONIX will be pivotal for the implementation and applications of XMPI, and it will enable new spatiotemporal resolutions for time-resolved 3D X-ray imaging through acquisition approaches based on sparse projections. The 4D reconstructions offered by 4D-ONIX will provide valuable observations for in-situ and operando testing for a plethora of systems, e.g., the characteristics and dynamic studies in fluid dynamics and material science, which are important for various applications such as the study of atmospheric aerosols51,52, advancements in fuel cell technologies53 and improvements in additive manufacturing54–57. It is worth mentioning that our approach can also be extended to single-shot phase-contrast imaging58–60 and coherent diffraction imaging61,62 experiments where the propagation model is explicitly known. Furthermore, the availability of 4D reconstruction from 4D-ONIX opens up the possibility of directly constraining the dynamics in the reconstruction process, e.g., through the use of physics-informed neural networks63.

Results

Concept of XMPI and overview of the approach

The concept of XMPI is depicted in Fig. 1a. Unlike tomography measurements which rotate the sample in a period of 180° or 360° and record, typically, hundreds to thousands of projections in between, the measurement of XMPI does not require rotations of the sample. XMPI relies on high-brilliance X-ray sources and a group of beam splitters to generate multiple beams that illuminate the sample simultaneously from different angles and a set of kHz/MHz detectors to record the different sample projections. In this way, one can record volumetric information on the fast dynamics of the sample, ultimately limited by the speed of the detector and the flux of the X-ray source. The inset marked by the blue box of Fig. 1a shows the goal of XMPI, which is to achieve a continuous 3D movie of the sample being studied from the projections recorded, exploiting excellent temporal characteristics provided by XFELs or the fourth-generation SR sources. This opens up possibilities for observing in 3D kHz up to MHz dynamics of the sample. As shown in Fig. 1a, the data used here comes from applying XMPI to water droplet collisions using two split beamlets.

Fig. 1. Demonstration of X-ray Multi-Projection Imaging (XMPI) experiment20,26 and the reconstruction approach.

a Conceptual illustration of the XMPI setup. The dashed blue box on the right shows the goal of the reconstruction approach. b Overview of the 4D-ONIX approach. The recorded projections first pass through a convolutional neural network encoder (orange), which converts the 2D images into stacks of downscaled feature maps (blue). These extracted features are then fed into the Index of Refraction (IoR) generator, which reconstructs the 3D representation at any spatial-temporal point. A physics-based forward propagation model is used to predict projections from different angles in the plane of the incoming X-rays. The predicted projections are optimized by the discriminator, which minimizes the differences between the real and generated projections.

We designed a self-supervised DL algorithm, 4D-ONIX, to reconstruct temporal and spatial information from XMPI. It combines neural implicit representation37 and generative adversarial mechanism33 with the physics of X-ray interaction with matter, resulting in a mapping between the spatial-temporal coordinates and the distribution of the refractive index of the sample. By enforcing consistency between the recorded projections and the estimated projections generated by the model, the model learns by itself the 3D volumetric information of the sample at each measured time point from only the given projections without needing real 3D information about the sample. Once trained, it provides a 3D movie showing the structure and dynamics of the sample.

Self-supervised 4D reconstruction approach

The architecture of the self-supervised 4D reconstruction model (4D-ONIX) is depicted in Fig. 1b. The goal of the model is to learn the Index of Refraction (IoR) of the sample at any spatial-temporal point. The 4D-ONIX model is based on three neural networks: an encoder, a 4D IoR generator, and a discriminator. The encoder and the discriminator are built up on CNNs, whereas the IoR generator is formed by fully connected Multilayer Perceptrons (MLPs). The IoR generator learns the local features of each object, while the encoder and the discriminator capture both the local features and global features across all timestamps and experiments.

The recorded projections are first passed through the encoder, which converts the 2D images into stacks of downscaled feature maps. For each spatial-temporal point, we apply an affine coordinate transformation to transfer from the global coordinate system to the local coordinate system of each camera. This transformation allows us to retrieve the corresponding features extracted by the encoder.

The IoR generator receives extracted feature maps from the encoder and predicts the value of the IoR at each spatial point. In this way, we can predict the value of the IoR of the sample at any spatial-temporal point. Please note that the IoR generator relies on neural implicit representation, which learns a continuous function of the refractive index. It differs from many common 3D reconstruction approaches that learn the value of each isolated voxel. We apply a physics-based forward propagation model to predict projections from different angles in the plane of incoming X-rays. The forward model is based on the projection approximation (weak scattering)64, where secondary scattering caused by the X-ray photons is ignored. Note that this can also be extended to multiple scattering conditions by using multi-slice methods64. The discriminator constrains the predictions by differentiating them from the real ones and minimizing the differences between them. The networks are trained by an adversarial loss function. We use the same encoder, generator, and discriminator for the training of the sequence of all 3D movies. Therefore, the networks are trained by all of the recorded projections so that these networks, especially the convolutional layers in the encoder and the discriminator, learn generalized features across the whole sequence. Further details of the network implementation and the loss function are included in the 4D-ONIX algorithm and Network and training details sections.

4D-ONIX demonstration on simulated water droplet collisions

We assess the performance of 4D-ONIX using simulated datasets of droplet collisions modeled with the Navier–Stokes Cahn–Hilliard equations. We refer the readers to the Simulation of water droplet collision section for details about the droplet collision simulation. The simulations provided multiple sequences of 3D objects, each sequence illustrating the collision process between two water droplets. Two projections were generated from each 3D object to mimic the experimental conditions of the XMPI experiment performed at the European XFEL26. For brevity, we refer to each collision sequence as an XMPI experiment or simply an experiment. The geometry of the projection generation in the simulated XMPI experiment is illustrated in Fig. 2a. We evaluate 4D-ONIX using the simulated datasets under two scenarios, as visualized in Fig. 2b.

Reproducible processes: it involves multiple identical experiments of the same dynamical process; as shown in Fig. 2b, the samples may exhibit a variety of orientations throughout the experiments. This can arise from various factors such as random sample orientations, samples arriving from different directions, or manual rotation of the sample stage. For instance, assuming we set the projection from detector 1 of the first experiment as 0° and denote the relative angle between the two projections as Δφ, in the first experiment, the dynamics process is measured at φ1 = 0° and φ2 = Δφ. In the second experiment, the sample orientation is shifted by ϕ = 30°, and the two projections are measured at and . The third experiment can be measured at and , and so on. This allows for obtaining volumetric information on the collision process from different angles of the sample without rotation.

Quasi-reproducible processes: in many cases, measuring perfectly reproducible processes is challenging or impractical. It is more realistic to measure several experiments capturing similar dynamical processes within experimental tolerances, with each process being measured only once, i.e., resulting in only one 4D sequence available for each process. As illustrated in Fig. 2b, similar to the first scenario, the dynamical processes are measured from different orientations. However, the dynamical processes themselves are not identical. For example, in the first experiment, both droplets have the same size, whereas in the second experiment, one droplet is larger than the other. In the third experiment, the droplets may be moving faster than in the first experiment, and so forth.

Fig. 2. Demonstration of the simulated X-ray Multi-Projection Imaging (XMPI) data.

a Geometry of the simulated XMPI projections. Projection pairs are generated on the x–y plane with a fixed angle between them. b Comparison of the two training scenarios. In the reproducible processes scenario, the processes are identical, while in the quasi-reproducible processes scenario, dynamical processes can differ. For example, the size of droplets may vary among experiments, as shown here.

Two training datasets were generated for the two scenarios. The first dataset was based on a single simulation, mimicking 16 experiments of a reproducible process. Each experiment contains a pair of projections with random φ angles and fixed Δφ for each timestamp. The second dataset was simulated for quasi-reproducible processes. It was based on 16 simulations, where a single projection pair with random orientation of the sample was selected from each simulation to form a training dataset. All 16 simulations simulated the collision process of binary droplets, with a 10% variance in collision velocities and a 10% variance in droplet size based on the tolerance of the droplets collision experiment conducted at European XFEL26. For both datasets, the relative angle between the projection pairs was selected as 23.8° to match the experimental conditions of the XMPI setup at the European XFEL26. Each simulation contained 75 timestamps, and both datasets contained 1200 timestamps in total.

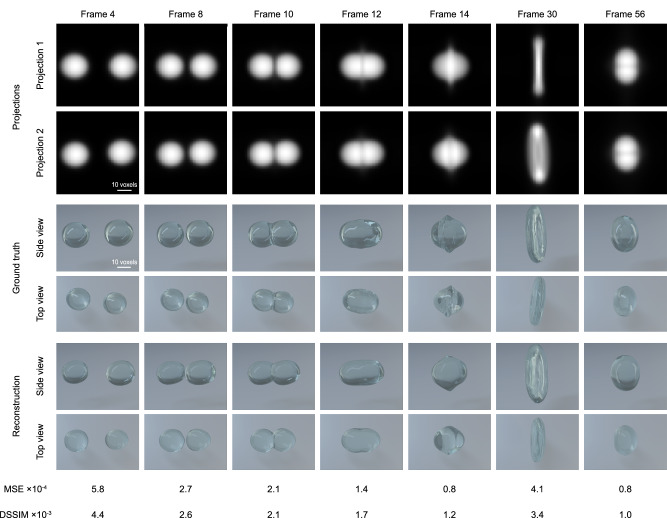

We trained 4D-ONIX with different numbers experiments, from 1, 2, 4, 8, to 16, for both scenarios. The reconstruction results and their quality improvement as a function of increasing the number of experiments are presented in Supplementary Note 1. Here, we only present the best results trained with 16 experiments. First, we present the results of training with reproducible processes. Figure 3 shows examples of the 4D-ONIX performance trained on reproducible processes. It shows the two projections, the 3D of the ground truth and the reconstruction rendered from the side and top views. The side view corresponds to the x-axis, while the top view aligns with the z-axis, which is perpendicular to the acquisition plane. Seven timestamps are shown, demonstrating the crucial stages in the collision process for one of the collision experiments. In this collision experiment, two identical water droplets were accelerated and collided center-to-center at the same speed. Complete reconstruction movies are available in Supplementary Movie 1 and Supplementary Movie 2. Please note that the 3D ground truth shown here was only used for comparison, and they were never shown to the network at any stage. The reconstructions were quantified by Mean Squared Error (MSE) and Dissimilarity Structure Similarity Index Metric (DSSIM)65, and their values for selected timestamps are shown in Fig. 3. Both of the metrics calculate the difference between the 4D-ONIX reconstructions and the ground truth. Therefore, a value closer to zero indicates a better reconstruction. Furthermore, we analyzed the evolution of errors throughout the collision process for each 3D timestamp, with the results displayed in Supplementary Fig. 1. In addition to 3D metrics, we also computed metrics in the 4D domain. We calculated the 4D MSE and 4D DSSIM for all 75 timestamps of the demonstrating experiment, resulting in MSE = 2.6 × 10−4 and DSSIM = 2.3 × 10−3. Apart from evaluating data correlation in the object space, we also evaluated the performance of the reconstructions in the frequency space using Fourier Shell Correlation (FSC) and Fourier Ring Correlation (FRC)66,67. FSC and FRC calculate the normalized cross-correlation between the reconstructions and the ground truth in frequency space over shells (3D) and rings (2D), respectively. The resolution of the reconstructions can be retrieved from the correlation curves. Here, we used the half-bit threshold criterion for resolution determination. The retrieved 3D spatial resolution was 4 ± 1 voxels, with the voxel side size being equivalent to the pixel size of the input projections. The 2D resolution was separately calculated for the training views and unseen views. For the unseen views, resolution was 6.0 ± 1.6 pixels, while for the training views, it was 4.7 ± 1.6 pixels. Please refer to Supplementary Fig. 2 for the plots of FSC and FRC for example timestamps.

Fig. 3. Demonstration of the 4D reconstruction for a reproducible process.

Seven timestamps are shown, illustrating different stages of the droplet collision process. The two generated projections are shown in rows 1–2, corresponding to 0° and 23.8°, respectively. The top view and side view of the 3D ground truth are shown in rows 3–4. The top and side views of the 4D-ONIX reconstructions are shown in rows 5–6. The bottom two rows show the Mean Squared Error (MSE) and Dissimilarity Structure Similarity Index Metric (DSSIM) between the 4D-ONIX reconstruction and the ground truth for each corresponding timestamp.

Second, we present the results obtained from training with the dataset of quasi-reproducible processes, comprising multiple simulations featuring similar but not identical samples. Figure 4 demonstrates the performance of 4D-ONIX trained on quasi-reproducible processes. Analogously to the reproducibility test, the figure presents the two projections, the 3D representation of the ground truth, and the reconstruction rendered from side and top views, providing a representation of the collision process between two water droplets. Complete reconstruction movies are available in Supplementary Movie 3 and Supplementary Movie 4. In this specific experiment, one of the water droplets (the one on the right) was 7% larger than the other, and the larger droplet also moved 7% faster than its counterpart. The 4D metrics for the reconstructions were calculated, resulting in MSE = 4.3 × 10−4 and DSSIM = 3.2 × 10−3. The distribution of errors throughout the collision process for each timestamp is visualized in Supplementary Fig. 1. We determined the 3D spatial resolution using FSC, resulting in a resolution of 6 ± 1 voxels. The 2D resolution was found to be 7 ± 2 pixels for the training views and 10 ± 4 pixels for the unseen views. Please refer to Supplementary Fig. 4 for additional figures of FSC and FRC.

Fig. 4. Demonstration of the 4D reconstruction for a quasi-reproducible process.

In this demonstrating experiment, the droplet on the right is bigger and moves faster than the one on the left. The seven timestamps shown here depict different stages of the droplet collision process. The two projections are shown in rows 1–2, corresponding to 0° and 23.8°, respectively. The top view and side view of the 3D ground truth are shown in rows 3–4. The top and side views of the 4D-ONIX reconstructions are shown in rows 5–6. The bottom two rows show the Mean Squared Error (MSE) and Dissimilarity Structure Similarity Index Metric (DSSIM) between the 4D-ONIX reconstruction and the ground truth for each corresponding timestamp.

4D-ONIX demonstration on experimental additive manufacturing data

We also validated the performance of our reconstruction method using experimental data. As current XMPI experiments have only been applied to unknown dynamical processes and achieve spatiotemporal resolutions beyond what existing methods can offer, ground truth data for evaluation is unavailable. Instead, we validated our approach using experimental data from time-resolved X-ray tomography, or tomoscopy15. The tomoscopy experiments were conducted at the TOMCAT beamline of the Swiss Light Source48, capturing the melt pool dynamics of magnetite-modified alumina. The projections were recorded every 0.9° during continuous sample rotation at 50 Hz, and in total 200 projections were recorded within 180° for each tomogram68.

We simulated a reproducible XMPI experiment for the remelting of magnetite-modified alumina using 60 tomograms that resulted in 60 time points. For each experiment and time point, we selected three projections spaced 27° apart. A total of 32 XMPI experiments were simulated with three projections each. For example, the projection angles for the first experiment were 0°, 27°, and 54°, while for another experiment they were 3.6°, 30.6°, and 57.6°, and so on. Please note that these experiments were treated as independent in the context of 4D-ONIX. As a result, 4D-ONIX only sees the three projections spaced 27° apart and does not have access to the relative angles between experiments as the latter is, in general, not experimentally possible. Each projection had an effective pixel size of 2.75 μm and a field of view of 912 × 180. We selected a 960 × 64 region where the remelting dynamics occur and resized this area to 128 × 64 for faster computation. For more details on the experimental geometry and data preparation, please refer to Supplementary Note 3.

Figure 5 demonstrates the performance of 4D-ONIX on the experimental additive manufacturing data. It presents the projection triplets from an example experiment, along with the 3D representation of the ground truth and the reconstruction, rendered from both side and top views, and the performance metrics for the selected timestamps. As before, the top view was perpendicular to the projection plane and was never shown to the networks. Four time points for the remelting process of magnetite-modified alumina are shown. The dynamic remelting regions are highlighted with blue boxes in both the top and side views, while red circles indicate areas that pose challenges for the algorithm. A full 3D movie of the reconstruction and the ground truth can be found in Supplementary Movie 5. The 4D metrics for the reconstructions were calculated, resulting in MSE = 5.2 × 10−3 and DSSIM = 6.9 × 10−2. For the distribution of 3D MSE and DSSIM over time, as well as the performance of 4D-ONIX trained with varying numbers of experiments, please refer to Supplementary Table 3 and Supplementary Fig. 7. We also calculated the 3D spatial resolution using FSC, yielding a resolution of 2 voxels in the resized 128 × 64 spaces over all time points.

Fig. 5. Demonstration of 4D-ONIX on experimental data. Four timestamps are shown, illustrating different stages of the additive manufacturing process.

The three projections are shown in rows 1–3, corresponding to 0°, 27°, and 54°, respectively. Note that the scales of the horizontal and vertical directions differ, as we resized the image for faster computation and improved visualization. The top view and side view of the 3D ground truth are shown in rows 4–5. The top and side views of the 4D-ONIX reconstructions are shown in rows 6–7. The blue boxes mark the remelting regions in both the top and side views, while the red circles indicate an example area that poses challenges for the reconstruction algorithm. The bottom two rows show the Mean Squared Error (MSE) and Dissimilarity Structure Similarity Index Metric (DSSIM) between the 4D-ONIX reconstruction and the ground truth for each corresponding timestamp.

We also conducted additional evaluations by comparing 4D-ONIX to two well-established sparse-view tomographic reconstruction methods: SART69 (Simultaneous Algebraic Reconstruction Technique), a classic method for sparse-view tomographic reconstruction, and Noise2inverse70, a state-of-the-art DL-based approach. Since these methods lack the capacity to generalize across different experiments, we reconstruct using a hypothetical scenario where more projections are available for a single experiment. The results of 4D-ONIX trained with more projections of a single experiment are also compared, as denoted by 4D-ONIX*. The results are presented in Supplementary Figs. 8 and 9.

Discussion

We have demonstrated the application of 4D-ONIX, a self-supervised DL 4D reconstruction model to reconstruct 4D data of water droplet collisions using simulated XMPI datasets and additive manufacturing using experimental datasets.

For the simulated water droplet collision data, we evaluated the performance of 4D-ONIX under two scenarios: with reproducible processes and with quasi-reproducible processes. As depicted in Figs. 3 and 4, we reconstructed 3D movies of water droplet collision using 16 experiments. Each experiment contained only two 2D projections over 75 different timestamps. The reconstructions accurately captured the dynamics of the water droplet collision process. Even the top view, which was perpendicular to the projection plane, was effectively reconstructed. This view was experimentally unobservable and never shown to the networks. We evaluated the MSE, DSSIM, and the resolution of the reconstructions. The MSE was 2.6 × 10−4 for the training with reproducible processes and 4.3 × 10−4 for the training with quasi-reproducible processes. The DSSIM was 2.3 × 10−3 and 3.2 × 10−3 for the training with reproducible and quasi-reproducible processes, respectively. This suggests that our approach effectively reconstructs the collision processes of the water droplets. The ability to reconstruct 3D from two projections is largely attributed to 4D-ONIX’s capacity to generalize across all timestamps of various experiments using an encoder. Furthermore, 4D-ONIX learns not only the self-consistency of the two projections in 3D but also the shared features of the samples through the discriminator. Regarding the resolution of the 3D reconstructions, 4D-ONIX achieved a resolution of ~4 voxels for training with reproducible processes and 6 voxels for training with quasi-reproducible processes. Further investigation found that the reconstructions had a superior resolution for the trained views over unseen views for both scenarios. This is mainly due to the fact that the projections were only recorded in the plane formed by the two beamlets, providing very limited information from the top. As shown in Figs. 3, 4, and S1, the error distribution with time suggested that the timestamps prior to the collision process appear to be more difficult to reconstruct. This difficulty may come from the distortion of the droplets induced by the acceleration process within the simulation and the inadequacy of training data available for this particular stage. Comparing the training results between reproducible and quasi-reproducible processes, it is observed that the training shows better performance for reproducible processes than quasi-reproducible processes. Learning the features of a single experiment is a less complex task than generalizing across multiple experiments with different parameters within the experimental tolerance. Introducing multiple experiments may introduce additional variability and complexity, making it more challenging for the networks to capture and reconstruct essential features. Nevertheless, obtaining reproducible samples or processes is not always guaranteed, making quasi-reproducible processes a more common scenario and closer to experimental conditions. We also conducted comparisons by training 4D-ONIX with different numbers of experiments, and the corresponding metrics for the reconstructions are detailed in Tables S1 and S2. The results indicated that the performance was less favorable with 1–2 experiments, but improved greatly when more than 4 experiments were available. Reconstructions with fewer than four experiments may lead to a range of diverse and incorrect solutions for the sparse-view reconstruction problem. This variability, particularly noticeable in the top view (the unseen view), resulted in reconstructions that deviated from the ground truth, as illustrated in Figs. S3 and S5. Our results with water droplet collision demonstrated that 16 experiments were adequate for training an accurate model.

For the experimental additive manufacturing remelting data, we evaluated the MSE, DSSIM, and the resolution of the reconstructions determined by FSC. The MSE was 5.2 × 10−3, the DSSIM was 6.9 × 10−2, and the spatial resolution was 2 voxels, indicating that our approach effectively captures the remelting processes. The dynamic remelting regions (marked by blue boxes in Fig. 5) were well reconstructed, as shown in the upper part of the side views. However, some static features were not fully reconstructed. A notable example is the bottom-right tip of the material, marked by red circles. Other imperfections include the lack of flatness in some areas of the reconstruction and an incidental shape generated at the top of the top view. These issues may arise due to the complexity and noise of the projections. The differences are particularly noticeable in the top view, which represents an unseen perspective of the algorithm. Figure S8 presents a comparison of 4D-ONIX trained with different numbers of experiments, as well as results from SART, Noise2inverse, and 4D-ONIX* trained with different numbers of projections of a single experiment. This comparison demonstrates that 4D-ONIX, when trained with 32 experiments, achieves performance levels comparable to 4D-ONIX*, SART, and Noise2inverse when they are trained on single experiments with 24 projections. However, given that the current XMPI setup does not support acquiring 24 projections simultaneously, the ability of 4D-ONIX to generalize across multiple experiments with fewer projections is crucial. This generalization capability makes 4D-ONIX particularly suitable for practical experimental conditions, where obtaining a high number of projections may not be feasible. Additionally, the reconstruction quality differs across methods due to the noisy nature of the experimental projections. The SART and Noise2inverse reconstructions are particularly affected by strong artifacts, which can obscure finer details and reduce the accuracy of the reconstruction. By contrast, 4D-ONIX and 4D-ONIX* employ self-consistency constraints across spatial and temporal dimensions, effectively reducing artifacts and enhancing the clarity of reconstructed features. This enables 4D-ONIX and 4D-ONIX* to better mitigate noise, generating reconstructions that are more robust and accurate under challenging experimental conditions. As a result, both models show potential for handling complex, noisy datasets in 4D more effectively than SART and Noise2inverse, offering a promising approach for 4D reconstructions in high-noise environments.

4D-ONIX has also been applied to experimental data collected at European XFEL, where collisions of binary droplets were recorded at 10 keV in 1.128 MHz frame rate using XMPI with two split beamlets26. 4D-ONIX can reconstruct a complete 3D movie capturing the collision of water droplets from the experimental XMPI dataset, using just two projections for each dynamical process. The temporal resolution of the 3D movie retrieved was 0.89 μs, which is three orders of magnitude faster than state-of-the-art time-resolved tomography15. Unfortunately, the retrieved reconstructions may suffer from imperfections as (i) only two sequences of the droplet collision were captured, with a minimal shift in sample orientation (23. 8°), and (ii) the recorded projections suffered from noise and imaging artifacts.

4D-ONIX is a data-driven approach, and its performance is determined by the quality and quantity of the training dataset. In this work, 4D-ONIX has been trained using just two to three projections, which is an ill-posed problem that can limit the accuracy of the reconstruction. Setups of XMPI have also been demonstrated on SR sources at Super Photon Ring–8 GeV (SPring-8) in Japan and ESRF in France, which generate more projections and can capture dynamical processes at sub-millisecond timescales with ~10 μm spatial resolution24,25. These extra projections, as well as increasing the angular separation between projections (Δφ), can improve the performance of 4D-ONIX and reduce the number of experiments required to satisfactorily reconstruct 4D information. Thus, such advancements in XMPI will improve 4D-ONIX’s performance. The number of experiments is a crucial factor that affects the accuracy of the reconstruction. We have evaluated its effect for this specific scientific case, as illustrated in Figs. S1, S3, S5 and S7. One can observe that the model’s efficacy may be compromised when trained with only one or two experiments. Increasing the number of experiments for identical or similar samples is key to improving both convergence and the accuracy of the reconstructions. This way, the neural networks receive more information about the sample, contributing to improved model performance.

The 4D nature and the inclusion of X-ray physics in 4D-ONIX offer opportunities for further development. First, the temporal information available from 4D-ONIX’s reconstructions provides opportunities for improvement by introducing additional constraints. For instance, if the sample dynamics adhere to a partial differential equation (PDE), incorporating an extra PDE loss term into the loss function using physics-informed neural networks63 can not only better align the model with the laws of physics but also facilitate the interpolation between the dynamical processes. This will enable the generation of a continuous 3D movie with temporal resolution surpassing the recording rate of the XMPI experiment. Furthermore, the inclusion of a frame variation regularizer can further improve the reconstruction quality40. The 4D-ONIX code provides the option to include a frame variation regularizer in the time domain, minimizing the variance of adjacent timestamps. This can be particularly beneficial when dealing with high temporal resolutions or rapidly evolving samples. Second, 4D-ONIX offers adaptability and flexibility to be applied to other types of time-resolved imaging experiments, such as coherent diffraction imaging61,62 and phase-contrast experiments58–60. The propagation and interaction model implemented in 4D-ONIX can be readily adapted to suit different experiments’ specific imaging formation processes, e.g., to directly perform 4D phase reconstructions.

The proposed approach also faces several challenges. First, our algorithm requires multiple experiments with similar dynamics to achieve high-quality 4D reconstructions. As mentioned in our discussion, the performance of the model improves remarkably when trained on data from several experiments compared to a single experiment. The optimal number of experiments is influenced by several factors, including the number of projections and the angle between them, the total number of timestamps, and the complexity of the process being studied. As a result, the required number of experiments must be determined on a case-by-case basis. Second, our approach assumes varying sample orientations across different experiments to maximize coverage of the Fourier space, as demonstrated in Table S1. The effectiveness of the method may decline if altering the sample orientation between experiments is not feasible, which could limit the reconstruction quality. Third, our model is computationally heavy due to the integration of multiple CNNs, making it challenging for real-time or online reconstructions. While this complexity is necessary for achieving the desired resolution and accuracy, it does present challenges in scenarios where rapid processing is required.

In conclusion, we have presented 4D-ONIX, a physics-based self-supervised DL approach capable of reconstructing high-quality temporal and spatial information of the sample from as sparse as two to three projections per timestamp. 4D-ONIX provides an innovative solution for single-shot time-resolved X-ray imaging techniques like XMPI, which rely on recording a sparse number of sample projections simultaneously to avoid the scanning processes, as used in X-ray tomography. With the application of 4D-ONIX on simulated XMPI data of water droplet collision and experimental data of additive manufacturing, we have demonstrated the capacity of our approach to reconstruct high-resolution 3D movies from only two to three projections per timestamp, preserving the critical dynamics of the observed phenomenon. We envision that 4D-ONIX will open up possibilities for more sophisticated time-resolved X-ray imaging experiments and push the limits of time-resolved imaging when combining the use with XMPI and advanced high-brilliant X-ray sources such as the fourth-generation SR sources and XFELs. The reconstructions provided by 4D-ONIX will allow for an in-depth exploration of rapid physical phenomena in fluid dynamics and material science, potentially impacting fields like atmospheric aerosol research and additive manufacturing. Additionally, the self-supervised learning approach demonstrated by 4D-ONIX has the potential to provide new spatiotemporal resolutions through novel acquisition approaches that only acquire a sparse number of projections. Finally, the possibility to directly reconstruct 3D processes provides a framework to implement physics-based methods for dynamic studies.

Methods

Simulation of water droplet collision

Consider the domain Ω and time t ∈ (0, T], denote ΩT = Ω × (0, T]. In this paper, we use ψ ∈ [−1, 1] as the phase variable, with ψ = 1 to label phase 1 (i.e., water) and ψ = −1 to label phase 2 (i.e., air), and ψ ∈ (−1, 1) representing the interface.

Consider the non-dimensional Reynolds and Weber numbers defined as

| 1 |

with U the characteristic velocity, L the characteristic length scale, σ the surface tension, and ρr = ρ1 and μr = μ1 as the reference density and viscosity for non-dimensionalization.

Following49,50, using volume-averaged densities and viscosities

| 2 |

the fluid flow is described by the incompressible Navier–Stokes equations in non-dimensionalized form with potential surface tension η ∇ ψ

| 3 |

| 4 |

with an appropriate combination of boundary conditions for velocity u and pressure p.

The movement and shape of the interface is described by the Cahn–Hilliard equations, given the double well potential and thus ∂ψW(ψ) = (ψ2−1)ψ:

| 5 |

| 6 |

where ψ is the phase variable, η the chemical potential, and ω a mobility parameter and ε is an artificial parameter describing the interface thickness. The equations are, in our case, equipped with natural boundary conditions.

Similar to ref. 50, low-order Finite Element techniques are used to discretize the Navier–Stokes Cahn–Hilliard equations. For the Navier–Stokes Eqs. (3) and (4) a pressure-correction projection method with second order backward differencing formula (BDF2) in time, described in71, and a Taylor–Hood conforming Finite Element pair for (u, p) in space is used. For the Cahn–Hilliard Eqs. (5) and (6) a conforming Finite Element pair for (ψ, η) and Crank–Nicholson in time with mass lumping is used. The equations are decoupled by solving sequentially first the interface motion using the velocity from the previous time step followed by a Navier–Stokes solve using the updated phase variable. This works well for reasonably small time steps. The resulting linear systems are solved with a (S)SOR preconditioned GMRes or CG method from the DUNE-ISTL module72 with a tolerance of 10−8. To reduce the computational effort, mesh adaptation based on the newest vertex bisection is applied73,74. The refinement indicator is based on the phase variable ψ and defined as θ(ψ) = 2(ψ + 1)(1−ψ). A mesh element is refined when θ(ψ) > 0.15 and coarsened whenever θ(ψ) < 0.0525. The implementation is done in the open-source framework DUNE and, in particular, DUNE-FEM75,76 using the Python-based user interfaces, which allows the description of weak forms using UFL77.

For the simulations carried out in this work, parameters resembling the experiment were used. In particular, , We = 6.94, U = 2.5 m/s, L = 8 ⋅ 10−5 m, ρ1 = 1000 kg/m3, ρ2 = 1 kg/m3, μ1 = 10−3 Ns/m2 and μ2 = 10−5 Ns/m2. For the Cahn–Hilliard equation, we used ε = 4h with h being the initial grid width. Figure 6 shows the droplet simulation at different stages. 144 cores were used for one simulation, and the average run time per simulation was 3 h. In total, 16 simulations were carried out with volume ratios of the two droplets varying between 0%, , , and 10%. The same initial data variation was applied to the initial droplet velocities.

Fig. 6. Equal-sized centered collision of two droplets viewed from different angles.

Dynamic grid adaptation is used which reduces the computational effort by a factor of 40.

4D-ONIX algorithm

As shown in Fig. 1b, 4D-ONIX comprises three networks. The first network is an encoder (E), implemented using CNNs. The encoder sees all of the input projection images and extracts latent features of the sample from the projections. By transferring knowledge across different timestamps, it learns the general features of the sample, which is crucial for accurate 3D reconstruction from sparse projections. The second network is an IoR generator (G), formed by a MLP. It learns the mapping from 4D spatial-temporal coordinates (x, t) to the refractive index of the sample n (δ, β), with the assistance of the encoder. Here, δ and β are real and positive numbers representing the real and imaginary parts of the complex refractive index, respectively. The generator takes as input the extracted latent features from the encoder and the 4D coordinates at each spatial-temporal point. It is trained to output the value of the refractive index at each 4D point. Predicted projections can be calculated by integrating the output refractive index along the line of propagation, following the law of X-ray propagation and interaction with matter64.

Figure 7 illustrates the process of unseen view prediction. The third network is a CNN discriminator (D). The discriminator learns to minimize the difference between the image patches from the real projections and the predictions generated by 4D-ONIX. It sees both the image patches from the real projections and the 4D-ONIX predictions, and it learns to distinguish the fake ones from the real images. The encoder and the generator are optimized based on the feedback from the discriminator. They are trained to fool the discriminator by generating indistinguishable images, leading to high-quality 3D reconstruction of the sample.

Fig. 7. Unseen view prediction from 4D-ONIX.

The Index of Refraction (IoR) Generator calculates the refractive index at each 4D point (x, t). For each time point, multiple query points are taken along a specific ray direction to obtain the refractive index n (δ, β) along that ray. The refractive index is integrated along the ray using the physical principles of X-ray interaction with matter. This integration provides the value of each pixel in the predicted image. By utilizing more rays, we can generate a projection image.

4D-ONIX is trained by optimizing a loss function based on adversarial learning, as expressed in Eq. (7).

| 7 |

where cv and refer to image patches from the real and predicted projections, respectively. The simulation dataset included both absorption and phase contrast, resulting in two-channel representations of cv and . Since the phase contrast in our simulations was directly proportional to the absorption contrast, only the absorption images were shown in the present work. In the case of the experimental data, only absorption-contrast images were obtained. Therefore, cv and are representations of absorption contrast. The discriminator is trained to minimize the difference between these two patches. The data distribution, denoted as pD, represents the distribution over the collected projections in our experiments, which are considered real projections by the discriminator. On the other hand, pν represents the distribution over all generated predictions, which are considered fake projections by the discriminator. represents the expectation of a function. By minimizing the difference between the real and predicted projections, the discriminator provides feedback to the generator and the encoder networks, enabling them to generate more accurate and realistic 3D reconstructions.

Network and training details

For the implementation, we used ResNet3478 as encoders and PatchGAN discriminator35 as the discriminator. The generator was built by five layers of ResBlocks78, where the first three layers contain multiple parallel weight-sharing ResBlocks, with the number of parallel blocks equals to the number of constraints used. An average pooling operation was applied after the first three layers to take the average, and the last two layers introduced new learning parameters to the networks. We used the same sampling method as in38 to extract image patches, where each image patch was formed by sampling a 32 × 32 square grid with a flexible scale, position, and stride.

GANs are known to have the problem of local equilibria and mode collapse79,80. Therefore, in the first five epochs of the training, we force 4D-ONIX to learn only the self-consistency over the two recorded projections. This consistency is enforced by optimizing an MSE loss function between the recorded projections and the network predictions, as expressed in Equation (8).

| 8 |

where cv and denote image patches from the real and predicted projections, while v and ν stand for the view angle of the recorded projections and the predictions, respectively. The adversarial loss was applied starting from the sixth epoch, once a convergence was obtained from the consistency of the constraining projections. The ADAM optimizer81 with a mini-batch size of 8 was used throughout the training. For the training of water droplet datasets, We set the learning rates to be 0.0001 for the networks and decayed the learning rate by 0.1 every 100 epochs. The results presented in the Self-supervised 4D reconstruction approach section were the results of 200 epochs, which took around 70 h of training on an NVIDIA V100 GPU with 32 GB of RAM.

For the experimental additive manufacturing data, we employed a random dice mode for training, where half of the iterations used GAN loss and the other half used MSE loss. The learning rate was set to 0.0001, and no decay was applied. The results presented in the Self-supervised 4D reconstruction approach section were based on 400 epochs of training, which took 35 h on an NVIDIA A100 GPU with 80 GB of RAM.

Supplementary information

Description of Additional Supplementary Files

Acknowledgements

We are grateful to Z. Matej for his support and access to the GPU-computing cluster at MAX IV. We are also grateful to Malgorzata Makowska et al. for sharing the additive manufacturing data to demonstrate the capabilities of 4D-XMPI. These data were acquired within a frame of the project “Operando tomography of Selective Laser Additive Manufacturing” funded by the SNF Spark grant CRSK-2_196085. This work has received funding from ERC-2020-STG 3DX-FLASH 948426 and the HorizonEIC-2021-PathfinderOpen-01-01, MHz-TOMOSCOPY 101046448.

Author contributions

Y.Z. contributed to computational experiments, data analysis, code development, and manuscript writing. Z.Y. contributed to computational experiments and data analysis. R.K. contributed to the simulation of water droplet collisions and manuscript writing. T.R. contributed to project planning, data analysis, and manuscript writing. P.V.-P. supervised the project and contributed to project planning, data analysis, and manuscript writing. All authors reviewed and provided feedback on the manuscript.

Peer review

Peer review information

Communications Engineering thanks the anonymous reviewers for their contribution to the peer review of this work. Primary Handling Editors: Ros Daw, Anastasiia Vasylchenkova, Miranda Vinay.

Funding

Open access funding provided by Lund University.

Data availability

The water droplet collision data that support the findings of this study are available in figshare with the doi:10.6084/m9.figshare.2853309882. The additive manufacturing data that support the findings of this study are available in the PSI Public Data Repository with the doi: 10.16907/d64d2e8c-b593-47b8-ab90-4ddbd19bedb568.

Code availability

The 4D-ONIX code is available at ref. 83.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

The online version contains supplementary material available at 10.1038/s44172-025-00390-w.

References

- 1.Stock, S. X-ray microtomography of materials. Int. Mater. Rev.44, 141–164 (1999). [Google Scholar]

- 2.Maire, E. & Withers, P. J. Quantitative X-ray tomography. Int. Mater. Rev.59, 1–43 (2014). [Google Scholar]

- 3.Withers, P. J. et al. X-ray computed tomography. Nat. Rev. Methods Prim.1, 18 (2021). [Google Scholar]

- 4.Baruchel, J., Buffiere, J.-Y. & Maire, E. X-ray Tomography in Material Science (Hermes Science Publications, 2000).

- 5.Ebner, M., Marone, F., Stampanoni, M. & Wood, V. Visualization and quantification of electrochemical and mechanical degradation in Li ion batteries. Science342, 716–720 (2013). [DOI] [PubMed] [Google Scholar]

- 6.Hendee, W. R. Physics and applications of medical imaging. Rev. Mod. Phys.71, S444 (1999). [Google Scholar]

- 7.Mizutani, R. & Suzuki, Y. X-ray microtomography in biology. Micron43, 104–115 (2012). [DOI] [PubMed] [Google Scholar]

- 8.Mees, F., Swennen, R., Geet, M. V. & Jacobs, P. Applications of X-ray Computed Tomography in the Geosciences Vol. 215, 1–6 (Geological Society, 2003).

- 9.Bieberle, M. & Barthel, F. Combined phase distribution and particle velocity measurement in spout fluidized beds by ultrafast X-ray computed tomography. Chem. Eng. J.285, 218–227 (2016). [Google Scholar]

- 10.Schug, S. & Arlt, W. Imaging of fluid dynamics in a structured packing using X-ray computed tomography. Chem. Eng. Technol.39, 1561–1569 (2016). [Google Scholar]

- 11.Östman, E. & Persson, S. Application of X-ray tomography in non-destructive testing of fibre reinforced plastics. Mater. Des.9, 142–147 (1988). [Google Scholar]

- 12.Hussain, A. & Akhtar, S. Review of non-destructive tests for evaluation of historic masonry and concrete structures. Arab. J. Sci. Eng.42, 925–940 (2017). [Google Scholar]

- 13.Villanova, J. et al. Fast in situ 3D nanoimaging: a new tool for dynamic characterization in materials science. Mater. Today20, 354–359 (2017). [Google Scholar]

- 14.Shahani, A. J., Xiao, X., Lauridsen, E. M. & Voorhees, P. W. Characterization of metals in four dimensions. Mater. Res. Lett.8, 462–476 (2020). [Google Scholar]

- 15.García-Moreno, F. et al. Tomoscopy: time-resolved tomography for dynamic processes in materials. Adv. Mater.33, 2104659 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hoshino, M. et al. Development of an X-ray real-time stereo imaging technique using synchrotron radiation. J. Synchrotron Radiat.18, 569–574 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Hoshino, M., Sera, T., Uesugi, K. & Yagi, N. Development of X-ray triscopic imaging system towards three-dimensional measurements of dynamical samples. J. Instrum.8, C05002 (2013). [Google Scholar]

- 18.Villanueva-Perez, P. et al. Hard X-ray multi-projection imaging for single-shot approaches. Optica5, 1521–1524 (2018). [Google Scholar]

- 19.Voegeli, W. et al. Multibeam X-ray optical system for high-speed tomography. Optica7, 514–517 (2020). [Google Scholar]

- 20.Vagovic, P., Bellucci, V., Villanueva-Perez, P. & Yashiro, W. Multi beam splitting and redirecting apparatus for a tomoscopic inspection, tomoscopic inspection apparatus and method for creating a three-dimensional tomoscopic image of a sample. Global patent index EP4160623A1 (2023).

- 21.Bilderback, D. H., Elleaume, P. & Weckert, E. Review of third and next generation synchrotron light sources. J. Phys. B At. Mol. Optical Phys.38, S773 (2005). [Google Scholar]

- 22.Shin, S. New era of synchrotron radiation: fourth-generation storage ring. AAPPS Bull.31, 21 (2021). [Google Scholar]

- 23.McNeil, B. W. & Thompson, N. R. X-ray free-electron lasers. Nat. Photonics4, 814–821 (2010). [Google Scholar]

- 24.Liang, X. et al. Sub-millisecond 4d X-ray tomography achieved with a multibeam X-ray imaging system. Appl. Phys. Express16, 072001 (2023). [Google Scholar]

- 25.Asimakopoulou, E. M. et al. Development towards high-resolution kHZ-speed rotation-free volumetric imaging. Opt. Express32, 4413–4426 (2024). [DOI] [PubMed] [Google Scholar]

- 26.Villanueva-Perez, P. et al. Megahertz X-ray multi-projection imaging. Preprint at https://arxiv.org/abs/2305.11920 (2023).

- 27.Jacobsen, C. X-ray Microscopy (Cambridge University Press, 2019).

- 28.Bellucci, V. et al. Hard X-ray stereographic microscopy for single-shot differential phase imaging. Opt. Express31, 18399–18406 (2023). [DOI] [PubMed] [Google Scholar]

- 29.Duarte, J. et al. Computed stereo lensless X-ray imaging. Nat. Photonics13, 449–453 (2019). [Google Scholar]

- 30.Fainozzi, D. et al. Three-dimensional coherent diffraction snapshot imaging using extreme-ultraviolet radiation from a free electron laser. Optica10, 1053–1058 (2023). [Google Scholar]

- 31.Ippoliti, M., Billè, F., Karydas, A. G., Gianoncelli, A. & Kourousias, G. Reconstruction of 3d topographic landscape in soft X-ray fluorescence microscopy through an inverse X-ray-tracing approach based on multiple detectors. Sci. Rep.12, 20145 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature521, 436–444 (2015). [DOI] [PubMed] [Google Scholar]

- 33.Goodfellow, I. et al. Generative adversarial networks. Commun. ACM63, 139–144 (2020). [Google Scholar]

- 34.Ulyanov, D., Vedaldi, A. & Lempitsky, V. Deep image prior. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 9446–9454 (IEEE, 2018).

- 35.Ledig, C. et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 4681–4690 (IEEE, 2017).

- 36.Ahishakiye, E., Bastiaan Van Gijzen, M., Tumwiine, J., Wario, R. & Obungoloch, J. A survey on deep learning in medical image reconstruction. Intell. Med.1, 118–127 (2021). [Google Scholar]

- 37.Mildenhall, B. et al. NeRF: representing scenes as neural radiance fields for view synthesis. Commun. ACM65, 99–106 (2021). [Google Scholar]

- 38.Schwarz, K., Liao, Y., Niemeyer, M. & Geiger, A. Graf: generative radiance fields for 3D-aware image synthesis. Adv. Neural Inf. Process. Syst.33, 20154–20166 (2020). [Google Scholar]

- 39.Yu, A., Ye, V., Tancik, M. & Kanazawa, A. pixelNeRF: neural radiance fields from one or few images. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 4578–4587 (IEEE, 2021).

- 40.Pumarola, A., Corona, E., Pons-Moll, G. & Moreno-Noguer, F. D-NeRF: neural radiance fields for dynamic scenes. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 10318–10327 (IEEE, 2021).

- 41.Zhang, Y., Yao, Z., Ritschel, T. & Villanueva-Perez, P. Onix: an X-ray deep-learning tool for 3D reconstructions from sparse views. Appl. Res.2, e202300016 (2023). [Google Scholar]

- 42.Maas, K. W., Pezzotti, N., Vermeer, A. J., Ruijters, D. & Vilanova, A. NeRF for 3D reconstruction from X-ray angiography: possibilities and limitations. In Proc. VCBM 2023: Eurographics Workshop on Visual Computing for Biology and Medicine 29–40 (Eurographics Association, 2023).

- 43.Corona-Figueroa, A. et al. Mednerf: medical neural radiance fields for reconstructing 3D-aware CT-projections from a single X-ray. In Proc.2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC) 3843–3848 (IEEE, 2022). [DOI] [PubMed]

- 44.Zheng, Y. & Hatzell, K. B. Ultrasparse view X-ray computed tomography for 4d imaging. ACS Appl. Mater. Interfaces15, 35024–35033 (2023). [DOI] [PubMed] [Google Scholar]

- 45.Adam, J., Lindblad, N. & Hendricks, C. The collision, coalescence, and disruption of water droplets. J. Appl. Phys.39, 5173–5180 (1968). [Google Scholar]

- 46.Planchette, C., Lorenceau, E. & Brenn, G. The onset of fragmentation in binary liquid drop collisions. J. Fluid Mech.702, 5–25 (2012). [Google Scholar]

- 47.Grzybowski, H. & Mosdorf, R. Modelling of two-phase flow in a minichannel using level-set method. J. Phys. Conf. Ser.530, 012049 (2014). [Google Scholar]

- 48.Makowska, M. G. et al. Operando tomographic microscopy during laser-based powder bed fusion of alumina. Commun. Mater.4, 73 (2023). [Google Scholar]

- 49.Hosseini, B. S., Turek, S., Möller, M. & Palmes, C. Isogeometric analysis of the Navier-Stokes-Cahn-Hilliard equations with application to incompressible two-phase flows. J. Comput. Phys.348, 171–194 (2017). [Google Scholar]

- 50.Lovrić, A., Dettmer, W. G. & Perić, D. Low order finite element methods for the Navier-Stokes-Cahn-Hilliard equations. Preprint at https://arxiv.org/abs/1911.06718 (2019).

- 51.Wang, Y. et al. Ultrafast X-ray study of dense-liquid-jet flow dynamics using structure-tracking velocimetry. Nat. Phys.4, 305–309 (2008). [Google Scholar]

- 52.Loh, N. et al. Fractal morphology, imaging and mass spectrometry of single aerosol particles in flight. Nature486, 513–517 (2012). [DOI] [PubMed] [Google Scholar]

- 53.Bührer, M. et al. Unveiling water dynamics in fuel cells from time-resolved tomographic microscopy data. Sci. Rep.10, 16388 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Martin, A. A. et al. Dynamics of pore formation during laser powder bed fusion additive manufacturing. Nat. Commun.10, 1987 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Martin, A. A. et al. Ultrafast dynamics of laser-metal interactions in additive manufacturing alloys captured by in situ X-ray imaging. Mater. Today Adv.1, 100002 (2019). [Google Scholar]

- 56.Kumar, S. et al. Machine learning techniques in additive manufacturing: a state of the art review on design, processes and production control. J. Intell. Manuf.34, 21–55 (2023). [Google Scholar]

- 57.Jin, Z., Zhang, Z., Demir, K. & Gu, G. X. Machine learning for advanced additive manufacturing. Matter3, 1541–1556 (2020). [Google Scholar]

- 58.Olbinado, M. P. et al. MHz frame rate hard X-ray phase-contrast imaging using synchrotron radiation. Opt. Express25, 13857–13871 (2017). [DOI] [PubMed] [Google Scholar]

- 59.Hagemann, J. et al. Single-pulse phase-contrast imaging at free-electron lasers in the hard X-ray regime. J. Synchrotron Radiat.28, 52–63 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Zhang, Y. et al. PhaseGAN: a deep-learning phase-retrieval approach for unpaired datasets. Opt. Expres s.29, 19593–19604 (2021). [DOI] [PubMed] [Google Scholar]

- 61.Rodriguez, J. A. et al. Three-dimensional coherent X-ray diffractive imaging of whole frozen-hydrated cells. IUCrJ2, 575–583 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Fan, J., Zhang, J. & Liu, Z. Coherent diffraction imaging of cells at advanced X-ray light sources. TrAC Trends Anal. Chem.171, 117492 (2023).

- 63.Raissi, M., Perdikaris, P. & Karniadakis, G. Physics-informed neural networks: a deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys.378, 686–707 (2019). [Google Scholar]

- 64.Paganin, D. Coherent X-Ray Optics 1st edn, 71–77 (Oxford University Press, 2006).

- 65.Wang, Z., Bovik, A. C., Sheikh, H. R. & Simoncelli, E. P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process.13, 600–612 (2004). [DOI] [PubMed] [Google Scholar]

- 66.Saxton, W. O. & Baumeister, W. The correlation averaging of a regularly arranged bacterial cell envelope protein. J. Microsc.127, 127–138 (1982). [DOI] [PubMed] [Google Scholar]

- 67.Van Heel, M. & Schatz, M. Fourier shell correlation threshold criteria. J. Struct. Biol.151, 250–262 (2005). [DOI] [PubMed] [Google Scholar]

- 68.Makowska, M. High-resolution time-resolved tomography of Selective Laser Melting of alumina. PSI Public Data Repository 10.16907/d64d2e8c-b593-47b8-ab90-4ddbd19bedb5 (2023).

- 69.Andersen, A. H. & Kak, A. C. Simultaneous algebraic reconstruction technique (sart): a superior implementation of the art algorithm. Ultrason. Imaging6, 81–94 (1984). [DOI] [PubMed] [Google Scholar]

- 70.Hendriksen, A. A., Pelt, D. M. & Batenburg, K. J. Noise2inverse: self-supervised deep convolutional denoising for tomography. IEEE Trans. Comput. Imaging6, 1320–1335 (2020). [Google Scholar]

- 71.Guermond, J.-L. & Quartapelle, L. A projection FEM for variable density incompressible flows. J. Comput. Phys.165, 167–188 (2000). [Google Scholar]

- 72.Blatt, M. & Bastian, P. On the generic parallelisation of iterative solvers for the finite element method. Int. J. Comput. Sci. Eng.4, 56–69 (2008). [Google Scholar]

- 73.Alkämper, M. & Klöfkorn, R. Distributed newest vertex bisection. J. Parallel Distrib. Comput.104, 1–11 (2017). [Google Scholar]

- 74.Alkämper, M., Dedner, A., Klöfkorn, R. & Nolte, M. The DUNE-ALUGrid Module. Arch. Numer. Softw.4, 1–28 (2016). [Google Scholar]

- 75.Bastian, P. et al. The Dune framework: basic concepts and recent developments. CAMWA81, 75–112 (2021). [Google Scholar]

- 76.Dedner, A. & Klöfkorn, R. Extendible and efficient Python framework for solving evolution equations with stabilized discontinuous Galerkin Method. Commun. Appl. Math. Comput. 4, 657–696 (2022).

- 77.Alnæs, M. S., Logg, A., Ølgaard, K. B., Rognes, M. E. & Wells, G. N. Unified form language: a domain-specific language for weak formulations of partial differential equations. ACM Trans. Math. Softw.40, 9:1–9:37 (2014). [Google Scholar]

- 78.He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE Conference on Computer Vision and Pattern Recognition 770–778 (IEEE, 2016).

- 79.Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning (MIT Press, 2016).

- 80.Kodali, N., Abernethy, J., Hays, J. & Kira, Z. On convergence and stability of gans. Preprint at https://arxiv.org/abs/1705.07215 (2017).

- 81.Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

- 82.Zhang, Y., Yao, Z., Klöfkorn, R., Ritschel, T. & Villanueva-Perez, P. 4D water droplet collision datasets. Figshare 10.6084/m9.figshare.28533098 (2025).

- 83.Zhang, Y. 4D-ONIX for reconstructing 3D movies from sparse X-ray projections via deep learning. 1.0. Zenodo. 10.5281/zenodo.14956708 (2025).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Description of Additional Supplementary Files

Data Availability Statement

The water droplet collision data that support the findings of this study are available in figshare with the doi:10.6084/m9.figshare.2853309882. The additive manufacturing data that support the findings of this study are available in the PSI Public Data Repository with the doi: 10.16907/d64d2e8c-b593-47b8-ab90-4ddbd19bedb568.

The 4D-ONIX code is available at ref. 83.