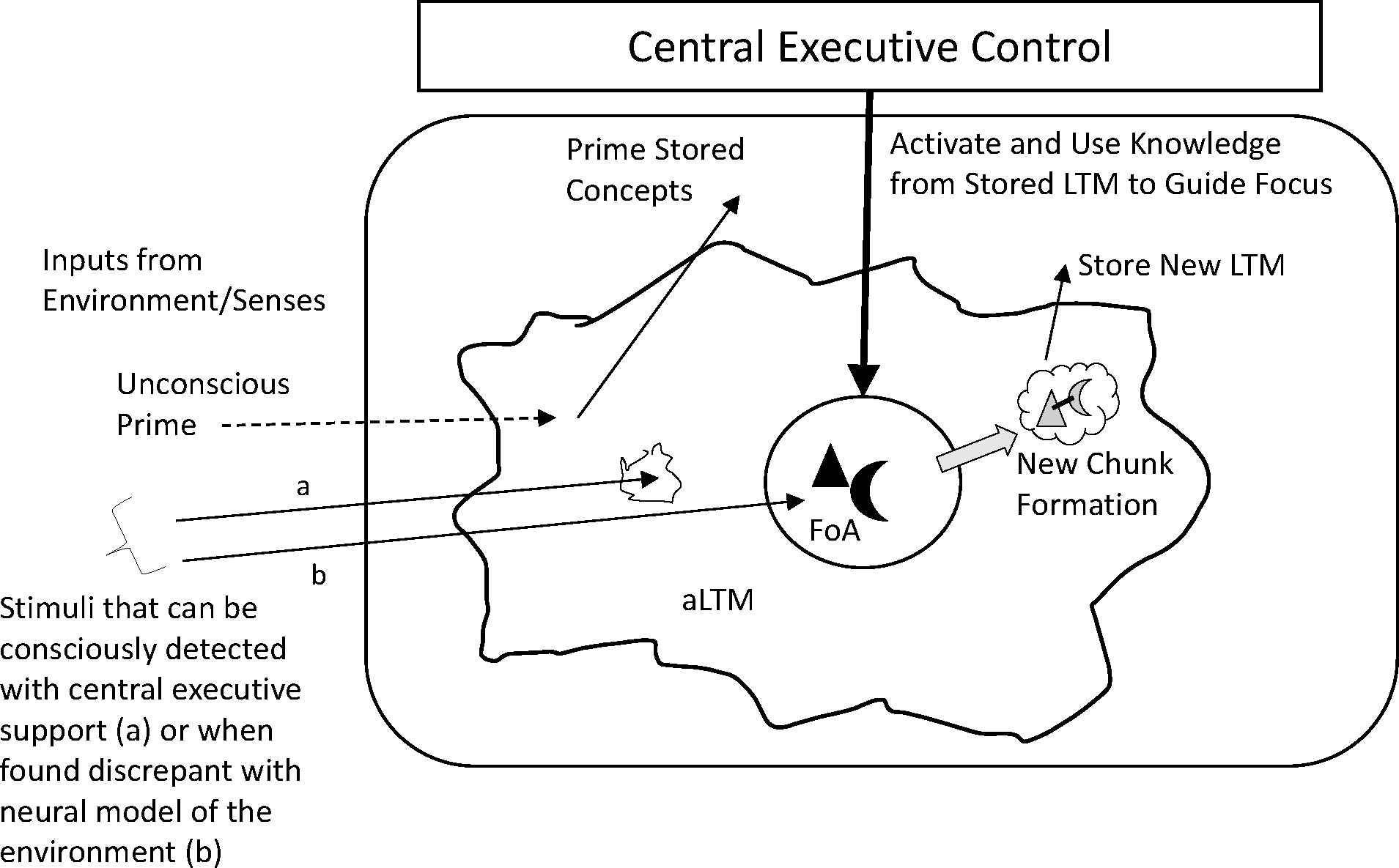

Figure 1.

Schematic representation of attention and LTM in an embedded-processes view. Inputs from the environment pass into an activated subset of long-term memory (aLTM), represented by the large, irregular shape. Some subset of this information passes into the focus of attention (FoA), which is severely limited in capacity. Solid arrows from the environment represent information entering the FoA, represented as two shapes. Knowledge from stored LTM can be used to create structures (e.g., new chunks) from stimuli currently in the FoA, enabling the information to be offloaded out of the FoA into aLTM (cloud with conjoined shapes) and stored as a new LTM. Primes presented either without conscious, explicit awareness (dashed arrow input from the environment) or with awareness can activate stored concepts from LTM, which in turn can more easily pass related content to the FoA.